Support Questions

- Cloudera Community

- Support

- Support Questions

- URGENT : Datanode started but not not live (dead)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

URGENT : Datanode started but not not live (dead)

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

HDFS

Created on 04-23-2021 02:19 AM - edited 04-23-2021 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

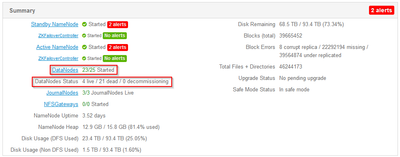

I'm facing a critical issue on hdp cluster, datanodes are running but dead

Bellow datanode logs

2021-04-22 23:30:06,457 WARN checker.StorageLocationChecker (StorageLocationChecker.java:check(209)) - Exception checking StorageLocation [DISK]file:/grid/disk11/hadoop/hdfs/data/

2021-04-22 23:30:06,553 INFO impl.MetricsConfig (MetricsConfig.java:loadFirst(112)) - loaded properties from hadoop-metrics2.properties

2021-04-22 23:30:06,700 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(82)) - Initializing Timeline metrics sink.

2021-04-22 23:30:06,702 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(102)) - Identified hostname = ovh-cnode5.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh, serviceName = datanode

2021-04-22 23:30:06,813 INFO availability.MetricSinkWriteShardHostnameHashingStrategy (MetricSinkWriteShardHostnameHashingStrategy.java:findCollectorShard(42)) - Calculated collector shard ovh-enode6.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh based on hostname: ovh-cnode5.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh

2021-04-22 23:30:06,814 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(125)) - Collector Uri: http://ovh-enode6.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh:6188/ws/v1/timeline/metrics

2021-04-22 23:30:06,814 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(126)) - Container Metrics Uri: http://ovh-enode6.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh:6188/ws/v1/timeline/containermetrics

2021-04-22 23:30:06,827 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:start(206)) - Sink timeline started

2021-04-22 23:30:06,896 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:startTimer(376)) - Scheduled snapshot period at 10 second(s).

2021-04-22 23:30:06,897 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:start(192)) - DataNode metrics system started

2021-04-22 23:30:06,904 INFO datanode.BlockScanner (BlockScanner.java:<init>(180)) - Initialized block scanner with targetBytesPerSec 1048576

2021-04-22 23:30:06,911 INFO common.Util (Util.java:isDiskStatsEnabled(111)) - dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2021-04-22 23:30:06,917 INFO datanode.DataNode (DataNode.java:<init>(444)) - File descriptor passing is enabled.

2021-04-22 23:30:06,918 INFO datanode.DataNode (DataNode.java:<init>(455)) - Configured hostname is ovh-cnode5.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh

2021-04-22 23:30:06,918 INFO common.Util (Util.java:isDiskStatsEnabled(111)) - dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2021-04-22 23:30:06,918 WARN conf.Configuration (Configuration.java:getTimeDurationHelper(1659)) - No unit for dfs.datanode.outliers.report.interval(1800000) assuming MILLISECONDS

2021-04-22 23:30:06,924 ERROR datanode.DataNode (DataNode.java:secureMain(2692)) - Exception in secureMain

java.lang.RuntimeException: Cannot start secure DataNode without configuring either privileged resources or SASL RPC data transfer protection and SSL for HTTP. Using privileged resources in combination with SASL RPC data transfer protection is not supported.

at org.apache.hadoop.hdfs.server.datanode.DataNode.checkSecureConfig(DataNode.java:1354)

at org.apache.hadoop.hdfs.server.datanode.DataNode.startDataNode(DataNode.java:1224)

at org.apache.hadoop.hdfs.server.datanode.DataNode.<init>(DataNode.java:456)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:2591)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:2493)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:2540)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:2685)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:2709)

2021-04-22 23:30:06,927 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 1

2021-04-22 23:30:06,933 INFO datanode.DataNode (LogAdapter.java:info(47)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at ovh-cnode5.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh/10.1.2.69

************************************************************/

Any help is welcome

Created 04-26-2021 01:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @enirys ,

From the below log snippet, I could think that the DN is running on the regular ports such as 50010 and 50075 (as per CM). Please confirm from your end.

2021-04-22 23:30:06,918 WARN conf.Configuration (Configuration.java:getTimeDurationHelper(1659)) - No unit for dfs.datanode.outliers.report.interval(1800000) assuming MILLISECONDS 2021-04-22 23:30:06,924 ERROR datanode.DataNode (DataNode.java:secureMain(2692)) - Exception in secureMain java.lang.RuntimeException: Cannot start secure DataNode without configuring either privileged resources or SASL RPC data transfer protection and SSL for HTTP. Using privileged resources in combination with SASL RPC data transfer protection is not supported.

If they are running with the above ports are unprivileged ports on the OS. Could you try use the port 1024 (generally we do use 1004 and 1006)

Or the other option is to enable SASL RPC data transfer protection and TLS

The first option should be an easier one. Please try and let me know.

Created 04-26-2021 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-03-2021 04:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Port are configured correctly, i just restarted my vms and fixed the issue.

But it happens many times, and now i'm seeing other errors in logs

ERROR datanode.DataNode (DataXceiver.java:run(278)) - ovh-cnode19.26f5de01-5e40-4d8a-98bd-a4353b7bf5e3.datalake.ovh:1019:DataXceiver error processing WRITE_BLOCK operation src: /10.1.2.106:34306 dst: /10.1.2.171:1019

java.io.IOException: Premature EOF from inputStream