Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable To Upload A Table In Hive : java.sql.SQ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable To Upload A Table In Hive : java.sql.SQLException Error

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

Apache Hive

Created 01-09-2018 02:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was trying to upload a database table in Hive View. But after choose the file and make the relevant settings, and then when I hit the UPLOAD TABLE Option, I get the following error :

java.sql.SQLException:Errorwhile processing statement: FAILED:ExecutionError,return code 1fromorg.apache.hadoop.hive.ql.exec.DDLTask.MetaException(message:java.security.AccessControlException:Permission denied:user=hive, path="file:/":root:root:drwxr-xr-x)

I was denied permission to upload the table. I am using the account of maria_dev which is there by default in Ambari UI.

I tried changing the permission and try again by using the following command :

hdfs dfs -chmod 777/

On running this command in hortonworks sandbox terminal, I got the following error :

Exception in thread "main" java.lang.RuntimeException: core-site.xml not found

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2640)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2566)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2451)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1164)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1136)

at org.apache.hadoop.conf.Configuration.setBoolean(Configuration.java:1472)

at org.apache.hadoop.util.GenericOptionsParser.processGeneralOptions(GenericOptionsParser.java:321)

at org.apache.hadoop.util.GenericOptionsParser.parseGeneralOptions(GenericOptionsParser.java:487)

at org.apache.hadoop.util.GenericOptionsParser.<init>(GenericOptionsParser.java:170)

at org.apache.hadoop.util.GenericOptionsParser.<init>(GenericOptionsParser.java:153)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:356)

I used to get a similar error earlier too.

I was uploading the table from my laptop's desktop, i.e. Local File System. I didn't use any Hive Query to upload the table. In the Hive View of Ambari UI, there is an option of "UPLOAD TABLE". I clicked on that option, then I set the Field Delimiter as Tab delimited and then clicked on "Upload Table". After this, I got the error that I mentioned.

Can somebody help me to sort out this error ?

Created 01-09-2018 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have seen couple of questions from you which were related to file missing. There is a chance that your sandbox may be corrupted. I suggest you to try downloading latest sandbox and use it.

For the above question, can you please list the files in the folder '/usr/hdp/current/hadoop-client/conf/' and check if core-site.xml exits. Also check if this folder exists '/etc/hadoop/conf'.

Thanks,

Aditya

Created 01-09-2018 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the folder '/usr/hdp/current/hadoop-client/conf/', I found the following files :

capacity-scheduler.xml hadoop-env.sh health_check mapred-site.xml secure yarn-site.xml commons-logging.properties hadoop-metrics2.properties log4j.properties ranger-security.xml task-log4j.properties

The file core-site.xml is missing.

Also, the folder '/etc/hadoop/conf' is missing.

Created 01-09-2018 05:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please try running the below commands and see if core-site.xml is created in /usr/hdp/current/hadoop-client/conf/ folder and also /etc/hadoop/conf is created

# curl -k -u {username}:{password} -H "X-Requested-By:ambari" -i -X PUT -d '{"HostRoles": {"state": "INSTALLED"}}' http://{ambari-host}:{ambari-port}/api/v1/clusters/{clustername}/hosts/{hostname}/host_components/HD...

# curl -k -u {username}:{password} -H "X-Requested-By:ambari" -i -X PUT -d '{"HostRoles": {"state": "INSTALLED"}}' http://{ambari-host}:{ambari-port}/api/v1/clusters/{clustername}/hosts/{hostname}/host_components/YA...

# curl -k -u {username}:{password} -H "X-Requested-By:ambari" -i -X PUT -d '{"HostRoles": {"state": "INSTALLED"}}' http://{ambari-host}:{ambari-port}/api/v1/clusters/{clustername}/hosts/{hostname}/host_components/MA...

# yum install -y hadoop hadoop-hdfs hadoop-libhdfs hadoop-yarn hadoop-mapreduce hadoop-client opensslIn the curl commands replace the {username} with ambari username, {password} with ambari password , {ambari-host} with hostname, {port} with ambari port(default 8080) ,{clustername} and {hostname}.

Thanks,

Aditya

Created 01-10-2018 12:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried running the first three commands using :

{username} as admin

{password} as admin

{ambari-host} as sandbox.hortonworks.com

{port} with ambari port(default 8080)

{clustername} as Sandbox

{hostname} as sandbox.hortonworks.comE.g. I ran the following command :

curl -k -u admin:admin -H "X-Requested-By:ambari" -i -X PUT -d '{"HostRoles": {"state": "INSTALLED"}}' http://sandbox.hortonworks.com:8080/api/v1/clusters/Sandbox/hosts/sandbox.hortonworks.com/host_compo...But I got the following error :

HTTP/1.1 404 Not Found

X-Frame-Options: DENY

X-XSS-Protection: 1; mode=block

X-Content-Type-Options: nosniff

Cache-Control: no-store

Pragma: no-cache

Set-Cookie: AMBARISESSIONID=14jxcimd3368rh42fh6lsxsnx;Path=/;HttpOnly

Expires: Thu, 01 Jan 1970 00:00:00 GMT

User: admin

Content-Type: text/plain

Content-Length: 279

{

"status" : 404,

"message" : "org.apache.ambari.server.controller.spi.NoSuchParentResourceException: Parent Host resource doesn't exist. Host not found, cluster=Sa

ndbox, hostname=sandbox.hortonworks.com. Host not found, cluster=Sandbox, hostname=sandbox.hortonworks.com"

What to do now ?

Created on 01-09-2018 10:18 PM - edited 08-18-2019 01:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As we see that the error is related to missing "core-site.xml"

Exception in thread "main" java.lang.RuntimeException: core-site.xml not found

Hence it looks like something is deleted from your Sandbox. HDP sandbox contains these files.

These files like "core-site.xml" and "hdfs-site.xml" are very basic files that are needed for HDFS Components/Services and HDFS clients.

.

So can you please check/try the following:

1. Please check if you are able to restart your HDFS services?

Ambari UI --> HDFS --> "Service Actions" (Drop Down) --> "Restart All"

2. If you are able to restart the HDFS services successfully that means you only have the problem with the HDFS Clients.

It may be a Missing Symlink issue. So please check if you have the "core-site.xml" and "gdfs-site.xml" files present in the following location or not?

Example:

[root@sandbox ~]# ls -l /etc/hadoop/conf/ | grep core-site.xml -rw-r--r-- 1 hdfs hadoop 4089 May 13 2017 core-site.xml [root@sandbox ~]# ls -l /etc/hadoop/conf/ | grep hdfs-site.xml -rw-r--r-- 1 hdfs hadoop 6820 May 13 2017 hdfs-site.xml

3. As you can see that "/etc/hadoop/conf" contains the required files. So you should also see that there is a Symlink as following in your File System which is actually used by the client to locate the HDFS configs:

[root@sandbox ~]# ls -l /etc/hadoop/conf lrwxrwxrwx 1 root root 35 Apr 19 2017 /etc/hadoop/conf -> /usr/hdp/current/hadoop-client/conf [root@sandbox ~]# ls -l /usr/hdp/current/hadoop-client/conf lrwxrwxrwx 1 root root 23 Apr 19 2017 /usr/hdp/current/hadoop-client/conf -> /etc/hadoop/2.6.0.3-8/0

NOTE: the HDP version directory might be slightly different version in your case but the Symlinks should be created as above.

.

4. The Symlink creation is the responsibility if the "hdp-select" command while installing the components. So looks like somehow the symlink creation is failing at your end.

So you might reinstall the HDFS CLIENTs on this host (and any other host) where it is failing to execute the HDFS command.

HDFS clients can be installed from Ambari UI as following:

Login to Ambari UI and the navigate to:

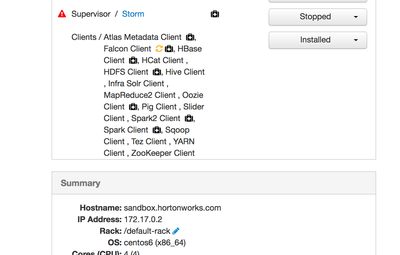

Ambari UI --> Hosts (Tab) --> Click on the Select Hostname link -->Installed (Drop Down) --> Install Clients.

.

.

Created 01-10-2018 12:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was unable to restart the HDFS services successfully. There was some error.

Also, the folder "/etc/hadoop/conf" is missing.

Also, in the install clients option suggested by you, I went through that and it installed only the HCat client. I think the rest of the clients were pre-installed.

What to do now ?

Created 01-10-2018 12:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you mentioned that :

Also, the folder "/etc/hadoop/conf" is missing.

.

This indicates that someone deleted the directory "/etc/hadoop/conf" because if the services are visible in Ambari then it measn it was installed properly but later someone mistakenly deleted the directory.

.

In this case i will suggest if you have any backup of that directory (or form any other host of the cluster you can get the backup of this dir) then you can try restoring that directory content.

Else if you are using HDP Sandbox then just create another VM with the Sandbox Image to get back to the original state.

Recovering the missing directory will be tough if you do not have any backup. But if you want to know who & when deleted that directory then you might want to look at the OS audit log.

Created 01-10-2018 12:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-10-2018 12:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried to create a new Sandbox image in docker several times before and similar errors have always cropped up. I feel that there is something wrong with the Hortonoworks Sandbox for docker that has been uploaded on the official website. 😞

I downloaded Hortonworks Sandbox for docker from here : https://hortonworks.com/downloads/#