Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to access HDFS from CDSW session

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to access HDFS from CDSW session

Created 06-24-2020 07:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Would appreciate any advice, how to solve the following problem – in a CDH 6.3.2 HA-enabled cluster I am unable to access HDFS from a CDSW CLI session:

!hdfs dfs -ls /

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"WARN","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:37","logger":"hdfs.DFSUtilClient","timezone":"UTC","log":{"message":"Namenode for namenodeHA remains unresolved for ID namenode43. Check your hdfs-site.xml file to ensure namenodes are configured properly."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"WARN","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:37","logger":"hdfs.DFSUtilClient","timezone":"UTC","log":{"message":"Namenode for namenodeHA remains unresolved for ID namenode57. Check your hdfs-site.xml file to ensure namenodes are configured properly."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:38","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 1 failover attempts. Trying to failover after sleeping for 813ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:38","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 2 failover attempts. Trying to failover after sleeping for 1903ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:40","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 3 failover attempts. Trying to failover after sleeping for 2225ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:43","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 4 failover attempts. Trying to failover after sleeping for 9688ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:08:52","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 5 failover attempts. Trying to failover after sleeping for 9501ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:09:02","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 6 failover attempts. Trying to failover after sleeping for 9001ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:09:11","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 7 failover attempts. Trying to failover after sleeping for 13904ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:09:25","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 8 failover attempts. Trying to failover after sleeping for 14567ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:09:39","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 9 failover attempts. Trying to failover after sleeping for 15279ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:09:55","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 10 failover attempts. Trying to failover after sleeping for 10985ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:10:05","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 11 failover attempts. Trying to failover after sleeping for 8394ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:10:14","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 12 failover attempts. Trying to failover after sleeping for 21701ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:10:36","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-03.novalocal:8020 after 13 failover attempts. Trying to failover after sleeping for 16983ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/06/24 13:10:53","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "cdh-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over cdh-control-02.novalocal:8020 after 14 failover attempts. Trying to failover after sleeping for 8437ms."}}

ls: Invalid host name: local host is: (unknown); destination host is: "cdh-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost

The contents of /etc/hosts files in the CDH and CDSW nodes is:

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.10.112 cdh-control-02.novalocal

10.10.10.111 cdh-control-01.novalocal

10.10.10.131 cdh-worker-01.novalocal

10.10.10.132 cdh-worker-02.novalocal

10.10.10.122 cdh-edge-02.novalocal

10.10.10.113 cdh-control-03.novalocal

10.10.10.121 cdh-edge-01.novalocal

10.10.10.133 cdh-worker-03.novalocal

10.10.10.110 cdsw-master-01.novalocal

10.10.10.130 cdsw-worker-01.novalocal

Created 07-28-2020 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marek The only thing I can think of form the logs is issue with client Configuration.

echo $PATH

which hdfs

I am wondering if the path /opt/cloudera/parcels/CDH/bin is missing form default paths set in session then you have to export the path /opt/cloudera/parcels/CDH/bin manually and run the hdfs listing again to see if that works.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 07-28-2020 04:32 AM - edited 07-28-2020 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see the CDSW session command log and the hdfs-site.xml file contents enclosed.

!echo $PATH

/usr/lib/jvm/jre-openjdk/bin:/home/cdsw/.local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/opt/conda/bin:/opt/cloudera/parcels/CDH/bin:/home/cdsw/.conda/envs/python3.6/bin

!which hdfs

/opt/cloudera/parcels/CDH/bin/hdfs

!/opt/cloudera/parcels/CDH/bin/hdfs dfs -ls /

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"WARN","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/07/28 11:28:47","logger":"hdfs.DFSUtilClient","timezone":"UTC","log":{"message":"Namenode for namenodeHA remains unresolved for ID namenode43. Check your hdfs-site.xml file to ensure namenodes are configured properly."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"WARN","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/07/28 11:28:47","logger":"hdfs.DFSUtilClient","timezone":"UTC","log":{"message":"Namenode for namenodeHA remains unresolved for ID namenode57. Check your hdfs-site.xml file to ensure namenodes are configured properly."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/07/28 11:28:47","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "blc-control-03.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over blc-control-03.novalocal:8020 after 1 failover attempts. Trying to failover after sleeping for 1424ms."}}

{"type":"log","host":"host_name","category":"HDFS-hdfs-GATEWAY-BASE","level":"INFO","system":"etcd_clcm_std_3C_2E_3W_cdh","time": "20/07/28 11:28:49","logger":"retry.RetryInvocationHandler","timezone":"UTC","log":{"message":"java.net.UnknownHostException: Invalid host name: local host is: (unknown); destination host is: "blc-control-02.novalocal":8020; java.net.UnknownHostException; For more details see: http://wiki.apache.org/hadoop/UnknownHost, while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over blc-control-02.novalocal:8020 after 2 failover attempts. Trying to failover after sleeping for 2662ms."}}<?xml version="1.0" encoding="UTF-8"?>

<!--Autogenerated by Cloudera Manager-->

<configuration>

<property>

<name>dfs.nameservices</name>

<value>namenodeHA</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.namenodeHA</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled.namenodeHA</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>blc-control-01.novalocal:2181,blc-control-02.novalocal:2181,blc-control-03.novalocal:2181</value>

</property>

<property>

<name>dfs.ha.namenodes.namenodeHA</name>

<value>namenode43,namenode57</value>

</property>

<property>

<name>dfs.namenode.rpc-address.namenodeHA.namenode43</name>

<value>blc-control-02.novalocal:8020</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.namenodeHA.namenode43</name>

<value>blc-control-02.novalocal:8022</value>

</property>

<property>

<name>dfs.namenode.http-address.namenodeHA.namenode43</name>

<value>blc-control-02.novalocal:9870</value>

</property>

<property>

<name>dfs.namenode.https-address.namenodeHA.namenode43</name>

<value>blc-control-02.novalocal:9871</value>

</property>

<property>

<name>dfs.namenode.rpc-address.namenodeHA.namenode57</name>

<value>blc-control-03.novalocal:8020</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.namenodeHA.namenode57</name>

<value>blc-control-03.novalocal:8022</value>

</property>

<property>

<name>dfs.namenode.http-address.namenodeHA.namenode57</name>

<value>blc-control-03.novalocal:9870</value>

</property>

<property>

<name>dfs.namenode.https-address.namenodeHA.namenode57</name>

<value>blc-control-03.novalocal:9871</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>false</value>

</property>

<property>

<name>fs.permissions.umask-mode</name>

<value>022</value>

</property>

<property>

<name>dfs.client.block.write.locateFollowingBlock.retries</name>

<value>7</value>

</property>

<property>

<name>dfs.namenode.acls.enabled</name>

<value>false</value>

</property>

<property>

<name>dfs.client.read.shortcircuit</name>

<value>false</value>

</property>

<property>

<name>dfs.domain.socket.path</name>

<value>/var/run/hdfs-sockets/dn</value>

</property>

<property>

<name>dfs.client.read.shortcircuit.skip.checksum</name>

<value>false</value>

</property>

<property>

<name>dfs.client.domain.socket.data.traffic</name>

<value>false</value>

</property>

<property>

<name>dfs.datanode.hdfs-blocks-metadata.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>ALWAYS</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.best-effort</name>

<value>true</value>

</property>

</configuration>

Created on 08-01-2020 08:39 AM - edited 08-01-2020 08:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marek I tried my best to dug this issue for you. First of all I want to clear that this is not CDSW issue. This is the HDFS client configuration issue. I found some discrepancy:

- In the hdfs-site.xml file notice the dfs.ha.namenodes.[nameservice ID] section where you have declared the NN IDs as "namenode43,namenode57" which is causing the issue as indicated in the message.

<property>

<name>dfs.ha.namenodes.namenodeHA</name>

<value>namenode43,namenode57</value>

</property>"Namenode for namenodeHA remains unresolved for ID namenode57. Check your hdfs-site.xml file to ensure namenodes are configured properly."

So you should declare the IP or FQDN here because the session is not resolving this short name as there is no entry in the "/etc/hosts" file. Your "/etc/hosts" file is consist only below format:

---- RUN cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

IP address blc-control-02.novalocal

IP address blc-control-01.novalocal

👆This format is not recommended the Network names should be configured as below:

1.1.1.1 foo-1.example.com foo-1 2.2.2.2 foo-2.example.com foo-2 3.3.3.3 foo-3.example.com foo-3 4.4.4.4 foo-4.example.com foo-4

In the Ideal condition you should met below condition:

- Your CDSW session should resolve the localhost as:

!nslookup localhost

Server: IP address Address: IP address#53 Name: localhost Address: 127.0.0.1 Name: localhost Address: ::1 - Your "/etc/hosts" file should be correct as per the Recommended Network names Configuration

- Your hdfs-site.xml should use FQDN or IP.

NOTE: Having the short name entries of "namenode43,namenode57" in "/etc/hosts" file might work with your existing hdfs-site.xml but I would recommend to do that with FQDN way to avoid other issue.

Please modify and let me know how this goes, I am waiting for this issue to be resolved 🙂🤞

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 08-12-2020 01:09 AM - edited 08-12-2020 01:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GangWar I am confused – earlier you wrote that CDSW does not care about /etc/hosts file, and now that the short names should be declared in /etc/hosts file. What is the right statement?

Notwithstanding, if the CDSW hosts are managed by Cloudera Manager, shouldn't the latter take care about the relevant configuration of all the cluster hosts? In other words, if the CDH hosts in the cluster communicate correctly with the HDFS name nodes based on the hdfs-site.xml config file, then why the CDSW hosts don't?

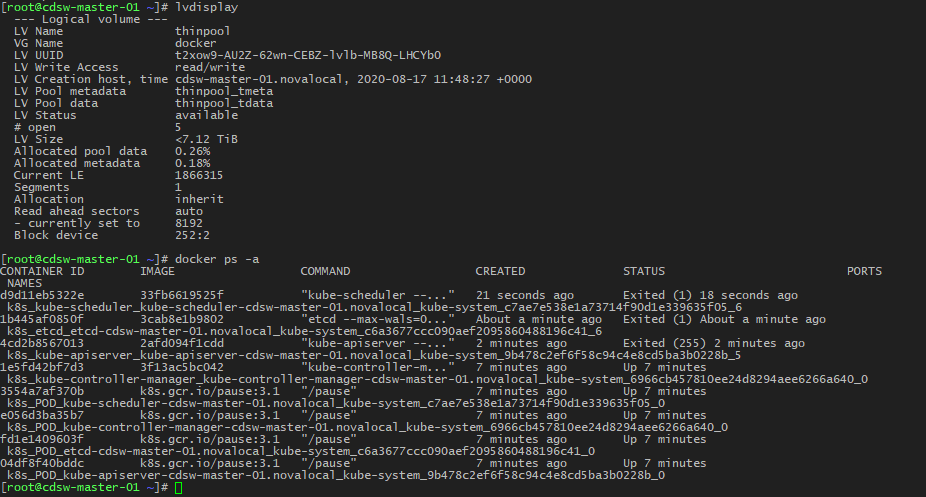

Nevertheless, unfortunately the CDSW master host crashed and I was unable to restore it through Cloudera Manager. Tried to solve it by removing CDSW service from cluster, removing the CDSW host completely from cluster, destroying and creating a new VM for CDSW master, redeploying on it the requirements, adding back to CM and cluster. However now the problem is with adding CDSW service back to the cluster – the procedure gets stuck at running /opt/cloudera/parcels/CDSW/scripts/create-docker-thinpool.sh. It hangs at command:

lvcreate --wipesignatures y -n thinpool docker -l 95%VG

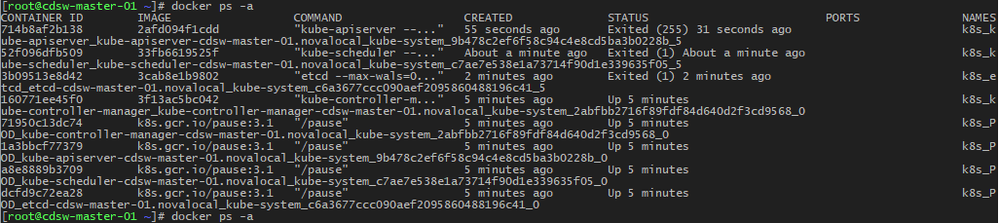

The procedure to add CDSW service continues and completes only if I terminate manually in CLI the aforementioned hanging lvcreate process (kill -2 <pid>). However the Docker Daemon service seems to malfunction as several service pods do not come up, incl. the CDSW web GUI.

Created 08-13-2020 11:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marek CDSW doesn't consider /etc/hosts for the internal communications i.e with K8s and pods hierarchy. Here the issue is with HDFS side as stated in previous comment.

Regarding the issue with lvcreate command you are hitting a known bug: https://access.redhat.com/solutions/1228673

You have to manually run the command form terminal (Not Kill) while starting only docker role then you have to start Master and Application role respectively and see if CDSW comes up.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 08-17-2020 01:17 AM - edited 08-17-2020 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GangWar Which command should I run manually from terminal, at what cluster hosts, and at which point in the overall procedure of adding CDSW service to the cluster?

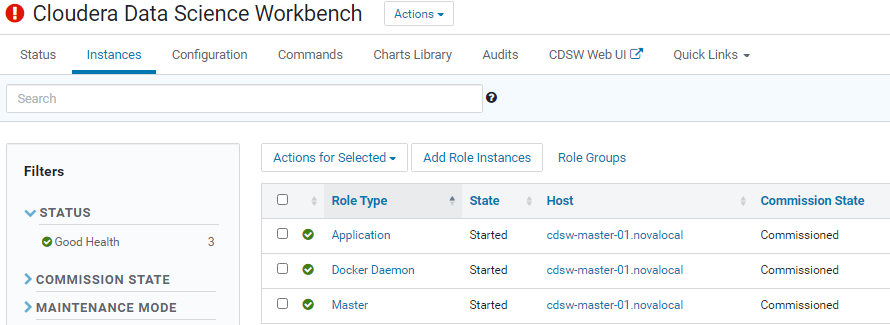

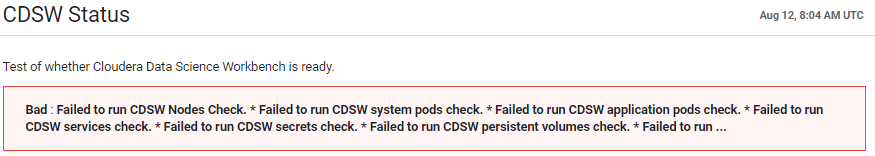

Nonetheless, have removed the CDSW roles and host from the cluster and Cloudera Manager, created another clean VM, adjusted its config to meet the requirements, and added back CDSW service and its roles on the new host. Unfortunately the CDSW service reports the same errors as before and the web GUI is not accessible. The docker-thinpool logical volume has been created successfully, however the containers keep crashing/exiting:

Created 08-21-2020 11:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marek Try to start CDSW in below manner and then see the logs for failed PODs if there is any.

1. Go to CM > CDSW > Stop (To stop CDSW first)

2. Go to CM > CDSW > Action > Run Prepare Node

3. Go to CM > CDSW > Instances

i) Select Docker role Only from Master host and start.

ii) Select Master Role from the Master host and start.

iii) Then select Application Role from Master host and start.

iv) Select Docker Role on Worker host and Start.

v) At last select all Worker Roles from CDSW Hosts and start.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 08-24-2020 01:59 AM - edited 08-24-2020 02:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GangWar Followed the steps jointly with a Cloudera representative (Kamel D). Unfortunately the problem is still there – several containers keep exiting.

Created on 09-02-2020 07:22 AM - edited 09-02-2020 07:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

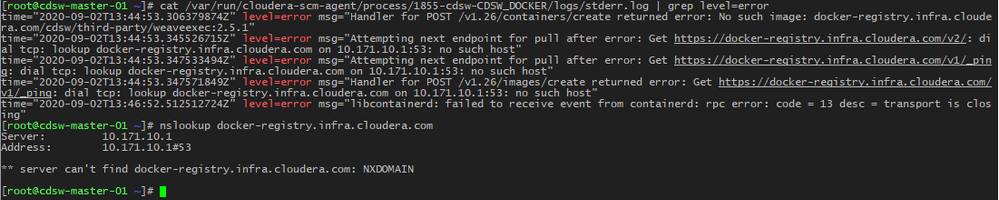

Have performed some further troubleshooting. As per the CDSW master-docker process stderr.log there might be a problem with Kubernetes DNS resolution due to missing weave containers for pod networking. Indeed, the DNS lookup cannot find/resolve one of the container repository's FQDN – docker-registry.infra.cloudera.com, which is supposed to hold the weave containers.

Are you in a position to verify and confirm, if that is the root cause?

Created 09-02-2020 10:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Marek No, that's a false alert, docker-registry shouldn't be accessible publicly. It's expected. The more worrisome part is this:

I0831 17:16:26.129073 10863 kubelet_node_status.go:279] Setting node annotation to enable volume controller attach/detach

W0831 17:16:26.129340 10863 kubelet_node_status.go:481] Failed to set some node status fields: failed to validate nodeIP: Node IP: "Public-IP-Address" not found in the host's network interfaces

I0831 17:16:26.131194 10863 kubelet_node_status.go:447] Recording NodeHasSufficientMemory event message for node cdsw-master-01.novalocal

I0831 17:16:26.131229 10863 kubelet_node_status.go:447] Recording NodeHasNoDiskPressure event message for node cdsw-master-01.novalocal

I0831 17:16:26.131239 10863 kubelet_node_status.go:447] Recording NodeHasSufficientPID event message for node cdsw-master-01.novalocal

I0831 17:16:26.131255 10863 kubelet_node_status.go:72] Attempting to register node cdsw-master-01.novalocal

E0831 17:16:26.131750 10863 kubelet_node_status.go:94] Unable to register node "cdsw-master-01.novalocal" with API server: Post https://Public-IP-Address:6443/api/v1/nodes: dial tcp Public-IP-Address:6443: connect: connection refused

E0831 17:16:26.137202 10863 reflector.go:125] k8s.io/kubernetes/pkg/kubelet/kubelet.go:453: Failed to list *v1.Node: Get https://Public-IP-Address:6443/api/v1/nodes?fieldSelector=metadata.name%3Dcdsw-master-01.novalocal&limit=500&resourceVersion=0: dial tcp Public-IP-Address:6443: connect: connection refused

E0831 17:16:26.138323 10863 reflector.go:125] k8s.io/kubernetes/pkg/kubelet/kubelet.go:444: Failed to list *v1.Service: Get https://Public-IP-Address:6443/api/v1/services?limit=500&resourceVersion=0: dial tcp Public-IP-Address:6443: connect: connection refused

E0831 17:16:26.139410 10863 reflector.go:125] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:47: Failed to list *v1.Pod: Get https://Public-IP-Address:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dcdsw-master-01.novalocal&limit=500&resourceVersion=0: dial tcp Public-IP-Address:6443: connect: connection refused

E0831 17:16:26.184663 10863 kubelet.go:2266] node "cdsw-master-01.novalocal" not found

E0831 17:16:26.284919 10863 kubelet.go:2266] node "cdsw-master-01.novalocal" not found

E0831 17:16:26.385174 10863 kubelet.go:2266] node "cdsw-master-01.novalocal" not found

E0831 17:16:26.485392 10863 kubelet.go:2266] node "cdsw-master-01.novalocal" not found

While if I see in my cluster with a grep of "Successfully registered node" there are positive outputs but not in yours.

[DNroot@100.96 process]# rg "Successfully registered node"

2323-cdsw-CDSW_MASTER/logs/stderr.log

4919:I0824 07:47:48.639094 9038 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2213-cdsw-CDSW_MASTER/logs/stderr.log

4733:I0729 04:19:04.480413 26592 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2243-cdsw-CDSW_MASTER/logs/stderr.log.2

4629:I0729 04:25:25.114590 18483 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2182-cdsw-CDSW_MASTER/logs/stderr.log

5140:I0728 10:02:24.590379 11039 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2108-cdsw-CDSW_MASTER/logs/stderr.log

4761:I0707 03:34:20.010139 8360 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2123-cdsw-CDSW_MASTER/logs/stderr.log.1

4907:I0708 10:39:33.202393 6672 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

2058-cdsw-CDSW_MASTER/logs/stderr.log

4747:I0703 04:18:25.271379 30286 kubelet_node_status.go:75] Successfully registered node host-10-17-xxx-xx

So again I am back to the network issue 🙂

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.