Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to complete the first installation of C...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to complete the first installation of Cloudera: problem accessing /cmf/process/287/logs

- Labels:

-

Cloudera Manager

Created on

11-05-2019

08:08 AM

- last edited on

11-05-2019

08:16 AM

by

Robert Justice

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I can't complete the first installation of my Cluster on AWS.

When I try to install my instances on my nodes, the error given by Cloudera Manager is the following:

HTTP ERROR 502

Problem accessing /cmf/process/287/logs. Reason:

Connection refused (Connection refused)

Could not connect to host.

I know the error is very simple, but I am struggling to find out the solution.

My first thought was about the /etc/hosts file, that it is the following for each host in my cluster:

127.0.0.1 localhost.localdomain localhost

13.48.140.49 master.sysdatadigital.it master

13.48.181.38 slave1.sysdatadigital.it slave1

13.48.185.39 slave2.sysdatadigital.it slave2

13.53.62.160 slave3.sysdatadigital.it slave3

13.48.18.0 slave4.sysdatadigital.it slave4

Moreover, I checked with the following command the hostname in each node:

python -c "import socket; print socket.getfqdn(); print socket.gethostbyname(socket.getfqdn())"

And the result, for each host is the following:

Node Master:

master.sysdatadigital.it

13.48.140.49

Node Slave1:

slave1.sysdatadigital.it

13.48.181.38

etc.

All cluster has the following template of /etc/sysconfig/network:

NETWORKING=yes

NOZEROCONF=yes

HOSTNAME=master.sysdatadigital.it

The firewall is disabled:

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

The iptables is disabled for each node:

● iptables.service - IPv4 firewall with iptables

Loaded: loaded (/usr/lib/systemd/system/iptables.service; disabled; vendor preset: disabled)

Active: inactive (dead)

Each host can communicate through ssh to each other since my private key is correctly uploaded in ./ssh/id_rsa, in fact when I try to do "ssh slave3" from any node in the cluster, I can connect to the other node.

Now, in my understanding, each command is not working for this reason, and I cannot start, restart, fix issues in the cluster.

Does anyone have an idea or clue on what I might have missed out?

Thanks a lot for your help.

M

Created 11-06-2019 11:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To validate the zookeeper ports can you run the snippets and share the output, it seems CDH uses the port 4181 for its zookeeper I am from the HDP world!

Using port 2181

echo "stat" | nc server.example.org 2181 | grep Mode

$ telnet slave3.sysdatadigital.it 2181

Using port 4181

echo "stat" | nc slave3.sysdatadigital.it 4181 | grep Mode

$ telnet slave3.sysdatadigital.it 4181

Disable the firewall in the VPC which is a subnet doesn't disable the firewall on the host please validate that all the hosts have the firewall disable

Assuming you are on Centos/RHEL 7 and share the output readapt if your OS is different

# systemctl status firewalld

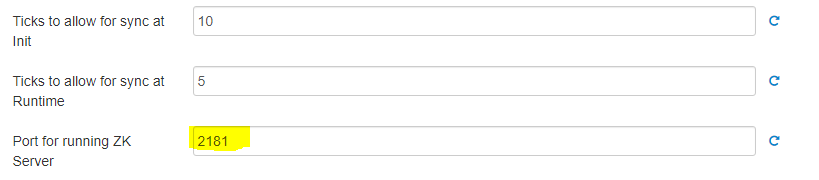

From your CM can you share the zookeeper screenshot

Created 11-05-2019 08:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also,

I checked some log files around my cluster when I try to "do" operations:

Example:

I try, let us say, to restart a service from a single node.

Error! Check the file /var/log/hadoop-hdfs/hadoop-cmf-hdfs-NAMENODE-slave4.sysdatadigital.it.log.out

When I open that file from my node the errors are the following:

2019-11-05 16:05:48,449 WARN org.apache.zookeeper.server.quorum.QuorumCnxManager: Cannot open channel to 2 at election address slave3.sysdatadigital.it/13.53.62.160:4181

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:554)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:530)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:396)

at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:368)

at java.lang.Thread.run(Thread.java:748)

2019-11-05 16:05:48,453 WARN com.cloudera.cmf.event.publish.EventStorePublisherWithRetry: Failed to publish event: SimpleEvent{attributes={ROLE=[zookeeper-SERVER-ca49cdfef04a282cc441985b3ebaf2c9], HOSTS=[slave4.sysdatadigital.it], ROLE_TYPE=[SERVER], CATEGORY=[LOG_MESSAGE], EVENTCODE=[EV_LOG_EVENT], SERVICE=[zookeeper], SERVICE_TYPE=[ZOOKEEPER], LOG_LEVEL=[WARN], HOST_IDS=[688609d5-69de-4dc2-9b8c-b4360de93ec6], SEVERITY=[IMPORTANT]}, content=Non-optimial configuration, consider an odd number of servers., timestamp=1572969948380}

I do not know where I should look at.

Thanks,

M

Created on 11-05-2019 10:20 AM - edited 11-05-2019 10:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am wondering why your zookeeper is running on port 4181?? As shown in the log. Please check that the default zk port is 2181 after sorting that out restart the zookeeper ensemble

2019-11-05 16:05:48,449 WARN org.apache.zookeeper.server.quorum.QuorumCnxManager: Cannot open channel to 2 at election address slave3.sysdatadigital.it/13.53.62.160:4181

java.net.ConnectException: Connection refused (Connection refused)

The default port for zookeeper is 2181 I have attached a screenshot of my zk see attached even if I had an assemble I would still have usually an FQDN:2181

slave3.sysdatadigital.it:2181,slave1.sysdatadigital.it:2181,slave2.sysdatadigital.it:2181

Can you ensure your Zookeeper assemble is up and running, down in the log too it seems you don't have an odd number of zookeepers in your case at least 3 Zookeepers to avoid the split-brain decision

Happy hadooping

Created 11-05-2019 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

thanks for the reply.

Yeah, it’s strange and I will change it, but still I can’t do anything through the fact I can’t connect to hosts...

Has anyone have an idea of what I could have done to the network configuration?

I also changed the file config.ini under Cloudera folder and I replaced the host name of the master in each node with master.sysdatadigital.it rather than the long aws IP address.

thanks,

M

Created 11-05-2019 10:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Was you cluster deployed using a cloudfornation template? Apart from that strange port can your hostname master.sysdatadigital.it resolve to the AWS IP? Remember its very trick with Internal and Public IP's because the hosts in the AWS datacenter need the internal IP's to communicate whilst the public IP is the accessible to the outside world. If you was to access and Cloudera service in AWS you will need to map your local hostname to the Public IP in AWS. The best solution for testing is to create a firewall inbound rule to only accept connections from you IP this is only good for testing and you should always harden your network security.

Created 11-06-2019 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

thanks again for following my case.

I use the Elastic IP in AWS, therefore my IP addresses are fixed and they do not change.

I created a subnetwork in AWS where each node can communicate to the other and I disabled the firewall (in my VPC).

Question: Was your cluster deployed using a cloud formation template?

Answer: No. I followed the following Cloudera Installation guide.

Question: Apart from that strange port can your hostname master.sysdatadigital.it resolve to the AWS IP?

Answer: Yes. I can prove it because when I turn on the cluster (AWS), from my laptop (local browser) I can just type http://master.sysdatadigital.it:7180/ to be redirected to the Cloudera Manager home page.

Thanks again,

M

Created 11-06-2019 11:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To validate the zookeeper ports can you run the snippets and share the output, it seems CDH uses the port 4181 for its zookeeper I am from the HDP world!

Using port 2181

echo "stat" | nc server.example.org 2181 | grep Mode

$ telnet slave3.sysdatadigital.it 2181

Using port 4181

echo "stat" | nc slave3.sysdatadigital.it 4181 | grep Mode

$ telnet slave3.sysdatadigital.it 4181

Disable the firewall in the VPC which is a subnet doesn't disable the firewall on the host please validate that all the hosts have the firewall disable

Assuming you are on Centos/RHEL 7 and share the output readapt if your OS is different

# systemctl status firewalld

From your CM can you share the zookeeper screenshot