Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to connect to hive on cloudera quicksta...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to connect to hive on cloudera quickstartVM on virtualbox B

- Labels:

-

Apache Hive

-

Quickstart VM

Created on 06-12-2018 09:28 AM - edited 09-16-2022 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

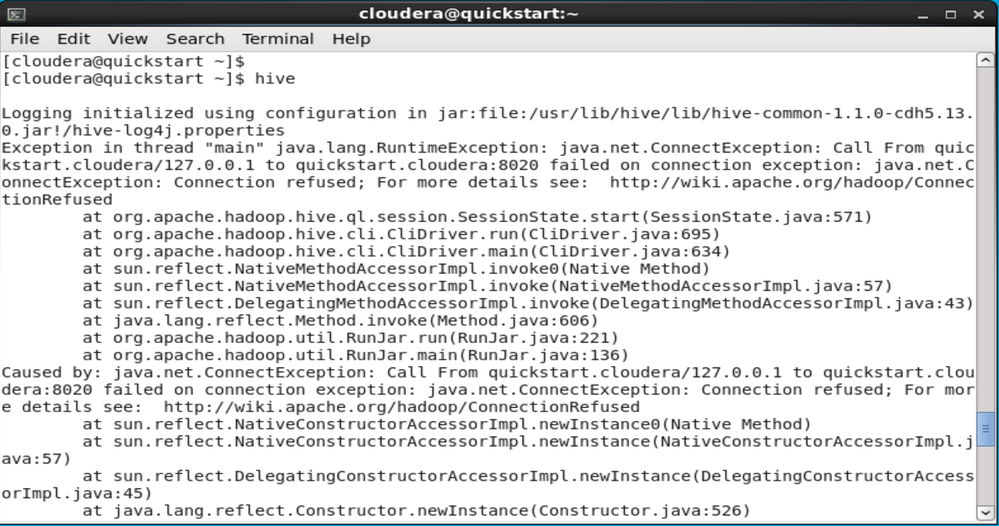

I am trying to launch the hive command line interface in the Virtual box(Cloudera Quickstart VM). It throws the error as connection refused.

Here is the error

Logging initialized using configuration in jar:file:/usr/lib/hive/lib/hive-common-1.1.0-cdh5.14.0.jar!/hive-log4j.properties

Exception in thread “main” java.lang.RuntimeException: java.net.ConnectException: Call From quickstart.cloudera/127.0.0.1 to quickstart.cloudera:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see:http://wiki.apache.org/hadoop/ConnectionRefused

** at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:571)**

** at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:695)**

** at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:634)**

** at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)**

** at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)**

** at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)**

** at java.lang.reflect.Method.invoke(Method.java:606)**

** at org.apache.hadoop.util.RunJar.run(RunJar.java:221)**

** at org.apache.hadoop.util.RunJar.main(RunJar.java:136)**

Caused by: java.net.ConnectException: Call From quickstart.cloudera/127.0.0.1 to quickstart.cloudera:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

** at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)**

** at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)**

** at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)**

** at java.lang.reflect.Constructor.newInstance(Constructor.java:526)**

** at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)**

** at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)**

** at org.apache.hadoop.ipc.Client.call(Client.java:1508)**

** at org.apache.hadoop.ipc.Client.call(Client.java:1441)**

** at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230)**

** at com.sun.proxy.$Proxy17.getFileInfo(Unknown Source)**

** at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:786)**

** at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)**

** at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)**

** at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)**

** at java.lang.reflect.Method.invoke(Method.java:606)**

** at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:258)**

** at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104)**

** at com.sun.proxy.$Proxy18.getFileInfo(Unknown Source)**

** at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2154)**

** at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1265)**

** at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1261)**

** at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)**

** at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1261)**

** at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1418)**

** at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:665)**

** at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:606)**

** at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:547)**

** … 8 more**

Caused by: java.net.ConnectException: Connection refused

** at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)**

** at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)**

** at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)**

** at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)**

** at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)**

** at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:648)**

** at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:744)**

** at org.apache.hadoop.ipc.Client$Connection.access$3000(Client.java:396)**

** at org.apache.hadoop.ipc.Client.getConnection(Client.java:1557)**

** at org.apache.hadoop.ipc.Client.call(Client.java:1480)**

** … 28 more**

Created on 06-13-2018 03:21 AM - edited 06-13-2018 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @nick23

Thank you for posting your query with us.

From your error description, I could see the Hive command is not able to reach HDFS. Could you please check if you are able to access HDFS?

just try by running

#hdfs dfs -ls /

Satz

Created 06-15-2018 08:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-17-2018 07:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

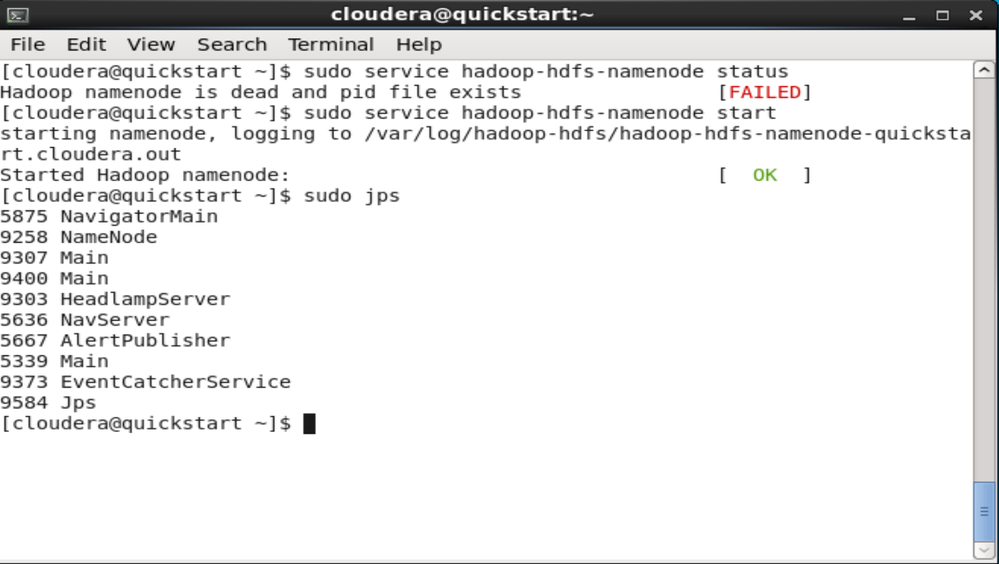

Please verfy if your hdf instance like Namenode , Datanode are up and runining via Cloudera mif anager web ui .

alternatively

you can also fire sudo jps from the terminal ?

or

fire this command ->

to check status sudo service hadoop-hdfs-namenode status to start sudo service hadoop-hdfs-namenode start

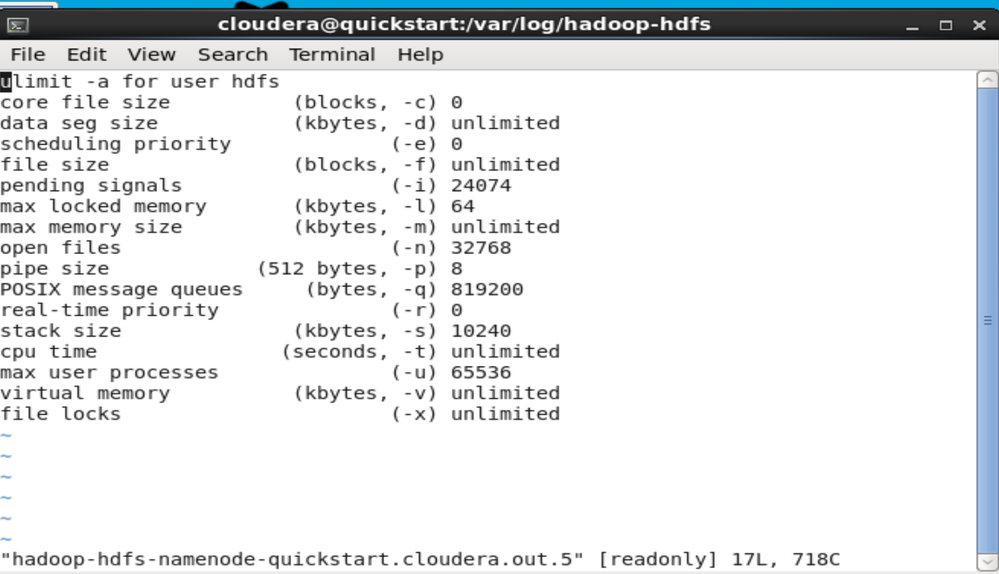

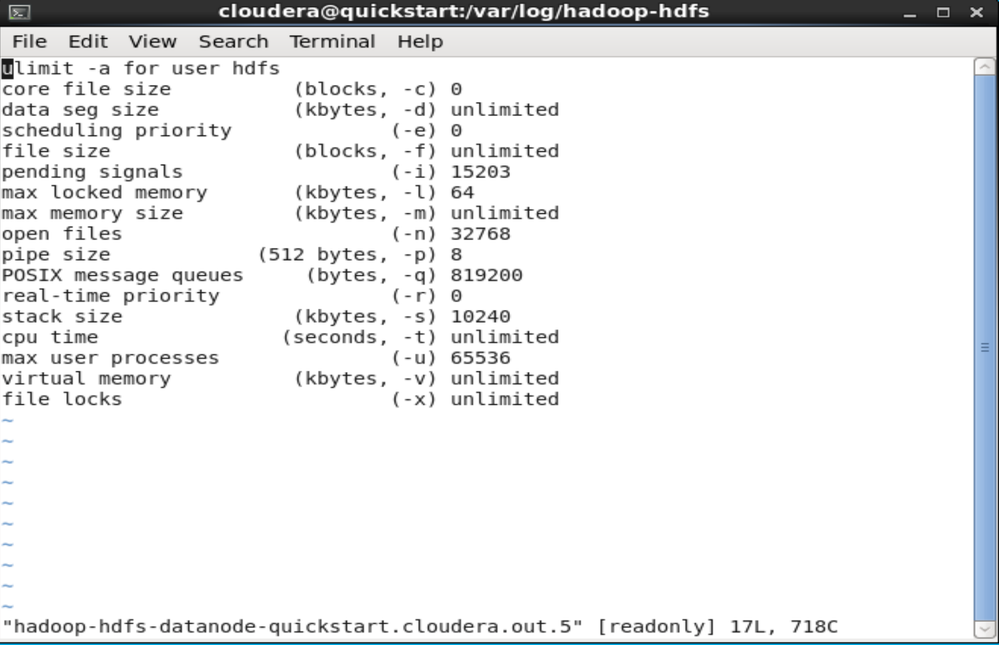

if you are curious about whats happening please check the agent logs or hadoop-hdfs logs

/var/log/hadoop-hdfs/ -> u can see namenode ,datanode logs

also you can check agent logs in this location - /var/log/cloudera-scm-agent/

Created 06-17-2018 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content