Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to move data to a S3 bucket using last ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to move data to a S3 bucket using last CDH (5.14.0)

- Labels:

-

HDFS

Created 02-02-2018 12:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I am trying to move data from HDFS to a S3 bucket. I am using last version of CM/CDH (5.14.0). I have been able to copy data using the tool aws:

aws s3api put-object

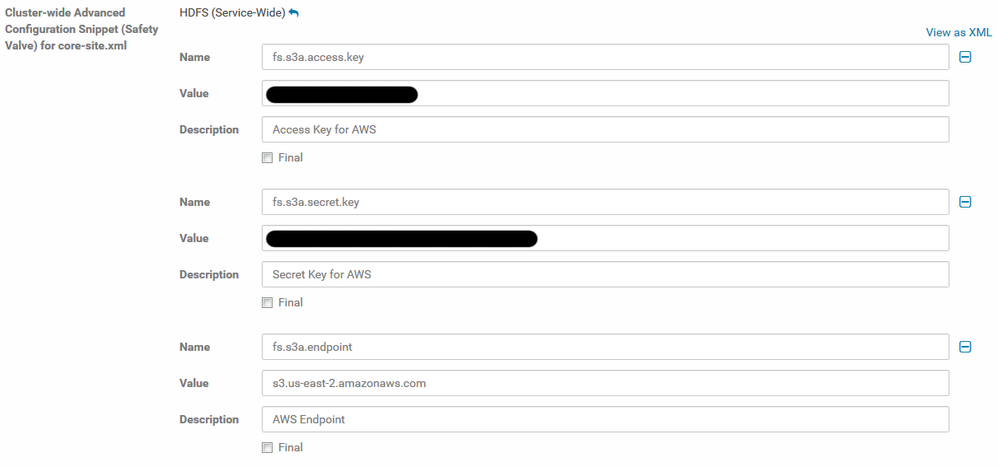

And also with the python SDK but I cannot copy data with hadoop distcp. I have added the following extra properties to core-site.xml in HDFS service.

<property>

<name>fs.s3a.access.key</name>

<value>X</value>

</property>

<property>

<name>fs.s3a.secret.key</name>

<value>X</value>

</property>

<property>

<name>fs.s3a.endpoint</name>

<value>s3.us-east-2.amazonaws.com</value>

</property>Nothing happens when I execute a command like

hadoop distcp /blablabla s3a://bucket-name/

but it hangs for a while (I guess is trying several times). Same thing when I try to just list files in the bucket with

hadoop fs -ls s3a://bucket-name

I am sure it is not a credentials problem since I can connect using the same access and secret key with the python SKD and aws tool.

Anyone facing a similar issue? Thanks!

Created 03-05-2018 03:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aaron! Thanks for answering.

At the end it wasn't a problem with Hadoop or the configuration (credentials were correct and config files deploy in all nodes). It was just that IT was blocking all the traffic to the private bucket. Even after asking them to allow those IPs it didn't work so I install CNLM in all nodes and specified the proxy using:

-Dfs.s3a.proxy.host="localhost" -Dfs.s3a.proxy.port="3128"

After that I was able to move 3 TB in less than a day.

Created 02-07-2018 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Distcp can take some time to complete depending on your source data.

One thing to try would be to list a public bucket. I believe if you have no credentials set you'll see an error, but if you have any valid credentials you should be able to list it:

hadoop fs -ls s3a://landsat-pds/

Also make sure you've deployed your client configs in Cloudera Manager (CM).

Created 03-05-2018 03:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aaron! Thanks for answering.

At the end it wasn't a problem with Hadoop or the configuration (credentials were correct and config files deploy in all nodes). It was just that IT was blocking all the traffic to the private bucket. Even after asking them to allow those IPs it didn't work so I install CNLM in all nodes and specified the proxy using:

-Dfs.s3a.proxy.host="localhost" -Dfs.s3a.proxy.port="3128"

After that I was able to move 3 TB in less than a day.