Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to run multiple pyspark sessions

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to run multiple pyspark sessions

- Labels:

-

Apache Spark

Created on 04-07-2018 01:43 PM - edited 09-16-2022 06:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am new to coudera. I have installed cloudera express on a Centos 7 VM, and created a cluster with 4 nodes(another 4 VMs). I ssh to the master node and run: pyspark

This works but only for one session. If I open another console and run pyspark I will get the following error:

WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

And it gets stuck there and does nothing until I close the other session running pyspark! Any idea why this is happening and how I can fix this so multiple sessions/user can run pyspark? Am I missing some configurations somewhere?

Thanks in advance for your help.

Created 04-09-2018 10:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general one port will allow one session (one connection) at a time, so your 1st session connects to the default port 4040 and your 2nd session is trying to connect to the same port but got the bind issue, so trying to connect to the next port but it is not working

there are two things that you need to check

1. please make sure the port 4041 is open

2. On your second session, when you run pyspark, pass the avilable port as a parameter.

Ex: Long back i've used spark-shell with different port as parameter, pls try similar option for pyspark

session1: $ spark-shell --conf spark.ui.port=4040

session2: $ spark-shell --conf spark.ui.port=4041

if 4041 is not working you can try upto 4057, i think thease are the available port for spark by default

Created on 04-09-2018 11:27 AM - edited 04-09-2018 11:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your help. I tried different ports, but it still doesn't work,unless I kill the running session and start another one. Can it be that I had wrong configuriation(s) during cloudera installation? Or changes needed to be made in any configuration files or somewhere else?

Created 04-09-2018 11:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 04-09-2018 11:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@saranvisa Sorry forgot to mention that... yes I did. The port is open.

Created 04-09-2018 11:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you try to run the 2nd pyspark command from a different user id?

because it seems this is normal issue according to the below link

Created 04-09-2018 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 04-09-2018 01:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like things cannot run in parallel but more in a queue form. Maybe missed/misconfgured something in the installation process.

Created 04-10-2018 09:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

^ This generally means that the problem is beyond the port mapping ( i.e either with queue configuration/ available resources/YARN level).

Assuming that you are using spark1.6, I'd suggest to temporarily change the shell logging level to INFO and see if that gives a hint. The easy and quick way to do this would be to edit /etc/spark/conf/log4j.properties from the node you are running pyspark and modify the log level from WARN to INFO.

# vi /etc/spark/conf/log4j.properties shell.log.level=INFO $ spark-shell

....

18/04/10 20:40:50 WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

18/04/10 20:40:50 INFO util.Utils: Successfully started service 'SparkUI' on port 4041.

18/04/10 20:40:50 INFO client.RMProxy: Connecting to ResourceManager at host-xxx.cloudera.com/10.xx.xx.xx:8032

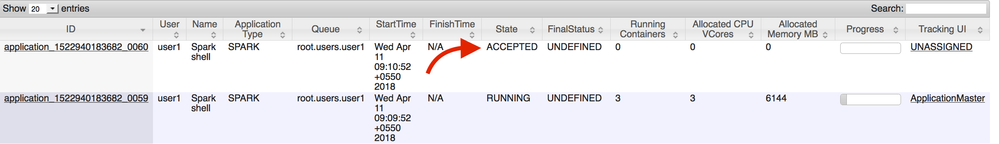

18/04/10 20:40:52 INFO impl.YarnClientImpl: Submitted application application_1522940183682_0060

18/04/10 20:40:54 INFO yarn.Client: Application report for application_1522940183682_0060 (state: ACCEPTED)

18/04/10 20:40:55 INFO yarn.Client: Application report for application_1522940183682_0060 (state: ACCEPTED)

18/04/10 20:40:56 INFO yarn.Client: Application report for application_1522940183682_0060 (state: ACCEPTED)

18/04/10 20:40:57 INFO yarn.Client: Application report for application_1522940183682_0060 (state: ACCEPTED)

Next, open the Resource Manager UI and check the state of the Application (i.e your second invocation of pyspark) -- whether it's is registered but just stuck in ACCEPTED state like this:

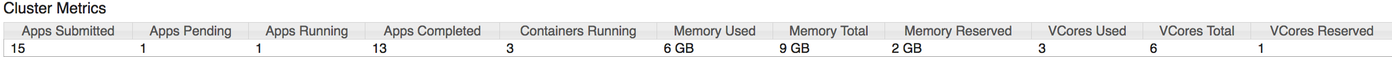

If yes, look at the Cluster Metrics row at the top of the RM UI page and see if there are enough resources available:

Now kill the first pyspark session and check if the second session changes the state RUNNING in the RM UI. If yes, look at the queue placement rules and stats in Cloudera Manager > Yarn > Resource Pools Usage (and Configuration)

Hopefully, this would give us some more clues. Let us know how it goes? Feel free to share the screen-shots from the RM UI and spark-shell INFO logging.

Created 04-15-2018 10:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I really appreciate your response. My advisor actually found out that this will work if we use the following command:

$ pysark --master local[i]

where i is a number. Using this command, multiple pyspark shells could run concurrently. But why the other solutions did not work, I have no clue!