Support Questions

- Cloudera Community

- Support

- Support Questions

- Unable to start Node Manager

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to start Node Manager

Created on

12-18-2019

08:39 PM

- last edited on

12-18-2019

09:44 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @nsabharwal

Greetings.

Need your inputs and expertise on this topic.

Details:

1. I have configured a FAIR_TEST queue and set the Ordering to FAIR

2. Have added "fair-scheduler.xml" in HADOOP_CONF_DIR default path (/usr/hdp/3.1.0.0-78/hadoop/conf) and have set minResources and maxResources to 4 GB and 8 GB respectively.

3. Changed the Scheduler Class in Ambari to fair scheduler class and added a parameter "yarn.scheduler.fair.allocation.file" to point to the above XML file.

While re-starting the YARN affected components in Ambari, I am getting the below error:

Can you please let me know what's going wrong and how to fix this issue.

2019-12-19 09:48:17,762 INFO service.AbstractService (AbstractService.java:noteFailure(267)) - Service NodeManager failed in state INITED

java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler not org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2628)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.createContainerExecutor(NodeManager.java:347)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.serviceInit(NodeManager.java:389)

at org.apache.hadoop.service.AbstractService.init(AbstractService.java:164)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.initAndStartNodeManager(NodeManager.java:933)

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.main(NodeManager.java:1013)

Caused by: java.lang.RuntimeException: class org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler not org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2622)

fair-scheduler.xml

<configuration xmlns:xi="http://www.w3.org/2001/XInclude">

<allocations>

<queue name="FAIR-TEST">

<minResources>4096 mb,0vcores</minResources>

<maxResources>8192 mb,0vcores</maxResources>

<maxRunningApps>50</maxRunningApps>

<maxAMShare>0.1</maxAMShare>

<weight>30</weight>

<schedulingPolicy>fair</schedulingPolicy>

</queue>

<queuePlacementPolicy>

<rule name="specified" />

<rule name="default" queue="FAIR-TEST" />

</queuePlacementPolicy>

</allocations>

Created 01-01-2020 09:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shelton ,

Thanks for your inputs. If I understand correctly, there will be 2 fair-scheduler.xml files? One for YARN kept in $HADOOP_CONF_DIR and one more in $SPARK_HOME?

For fair-scheduler.xml belonging to Spark, how to configure the parameter in Ambari?

Also, the queueMaxAMShareDefault or maxAMShare value - earlier it was 0.5 only - but since it was not launching the jobs due to the AM resource exceeded error, I did set it to 0.8 - I will try setting it to 0.1 and will check it.

Please let me know your inputs.

Thanks and Regards,

Sudhindra

Created 01-01-2020 08:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shelton ,

I went through your email again and tried out all the options that you have mentioned. But, I am still facing the same issue, while running the second job.

Please let me know if anything else needs to be set, or it is a pure memory related issue and kindly suggest on fixing this issue.

Here are the relevant screenshots:

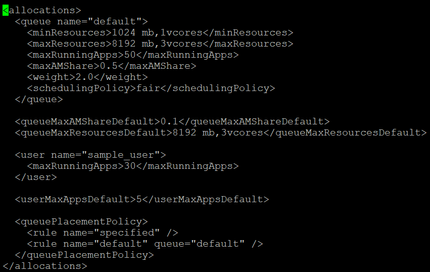

Screenshot 1: YARN Fair Scheduler XML file (I tried setting maxAMShare to 0.1 - but the first spark job didn't start at all - so I had to bump it to 0.5)

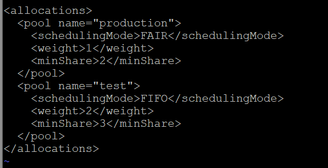

Screenshot 2: Spark Fair Scheduler XML file (this is placed in $SPARK_HOME/conf directory, i.e /usr/hdp/3.1.0.0-78/spark2/conf)

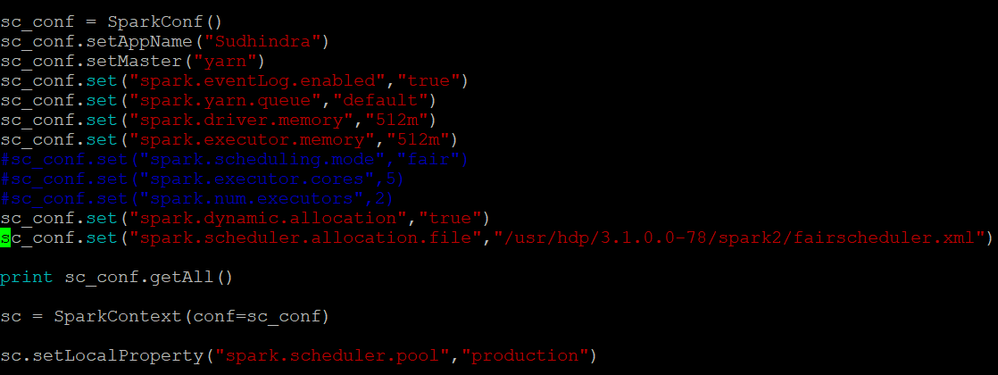

Screenshot 3: Spark Configuration Parameters set through the pyspark program

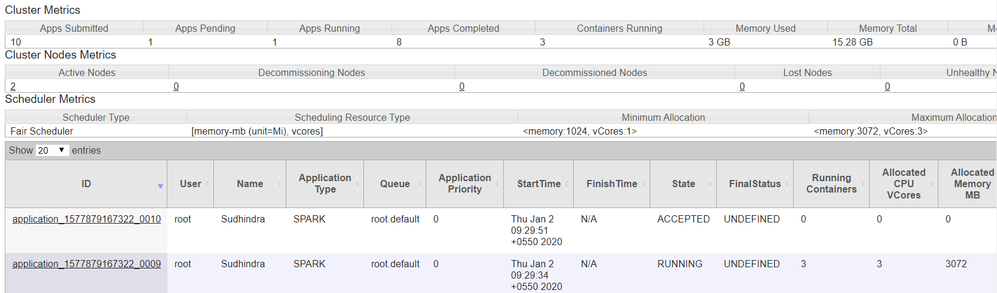

Screenshot 4: YARN Cluster Information (Total number of VCORES is 6 and Total amount of memory present in 2 node cluster is 15.3 GB)

Note: Since this is a flask application, it will launch 2 jobs I believe, one to open the port at 5000 and another to accept the inputs. The whole idea behind this exercise is to test how many number of spark sessions can run at parallel in a single Spark Context.

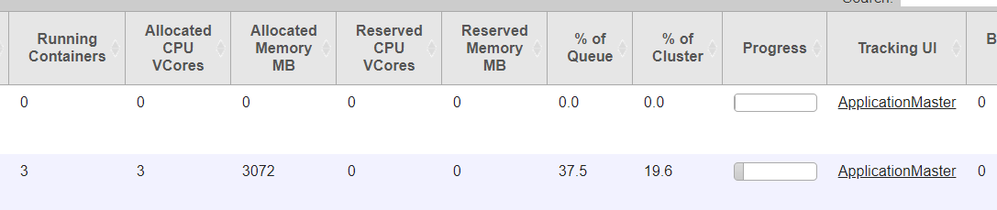

Screenshot 5: This shows the percentage usage of queue as well as cluster by the first job. As we can see, there is sufficient space in both Cluster as well as Queue. But, for some reason, the second job never gets the required amount of resources. I know this could be because the fair-scheduler's maximum allocation is set to 3GB. Can you please let me know how to bump up this value. I am also curious here - even though the maxResources in fair-scheduler.xml file is set to 8 GB, the fair scheduler's maximum allocation is set to 3 GB only. Is it because of the value of maxAMShare?

Also, I am supplying both driver and executor memory to 512 MB only. How is my job occupying 3 GB of space?

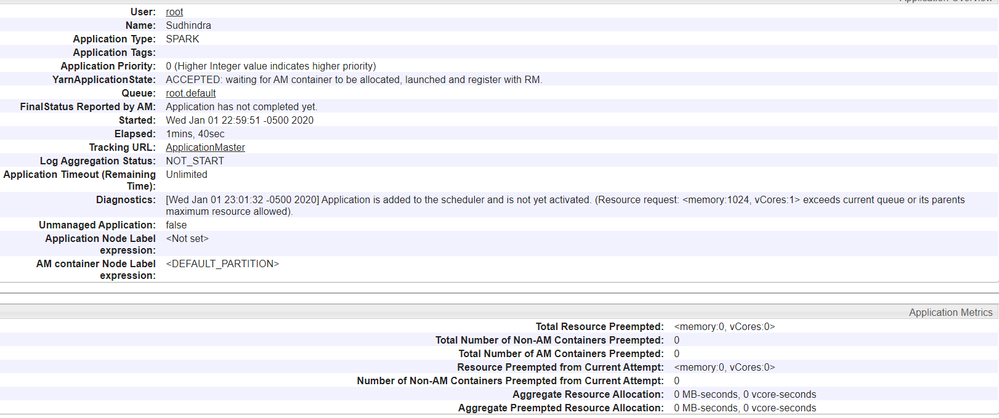

Screenshot 6: This screenshot shows that the job 2 never gets the required amount of resources.

Created 01-05-2020 09:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shelton ,

Can you please help me in fixing this issue.

With the same memory configuration as mentioned, I am able to run more than 1 spark job with Capacity Scheduler, while it's not possible to run the second Spark job with Fair Scheduler.

I have already sent you the required screenshots. Please let me know your inputs at the earliest.

Thanks and Regards,

Sudhindra

Created 01-07-2020 11:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shelton ,

I was able to achieve my objective of running multiple Spark Sessions under a single Spark context using YARN capacity scheduler and Spark Fair Scheduling.

However, the issue still remains with YARN fair scheduler. The second Spark job is still not running (with the same memory configuration) due to lack of resources.

So, what additional parameters need to be set for YARN fair scheduler to achieve this?

Please help me in fixing this issue.

Thanks and Regards,

Sudhindra

- « Previous

-

- 1

- 2

- Next »