Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to start namenode

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to start namenode

- Labels:

-

Apache Hadoop

Created 02-18-2017 07:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I try to start the namenode severa times. but its not starting, so i check the log file. I found the below exception

2017-02-18 15:05:23,548 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: Failed to start namenode.

java.io.IOException: There appears to be a gap in the edit log. We expected txid 1, but got txid 44.

at org.apache.hadoop.hdfs.server.namenode.MetaRecoveryContext.editLogLoaderPrompt(MetaRecoveryContext.java:94)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:215)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:143)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:843)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:698)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:294)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:975)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:681)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:585)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:645)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:812)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:796)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1493)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1559)

2017-02-18 15:05:23,552 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1

2017-02-18 15:05:23,554 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at aruna/127.0.1.1

************************************************************/

It says gap in the edit log. I search the google and still not find any solution ?

All the other deamons are running

aruna@aruna:~/hadoop-2.7.3/sbin$ sudo jps 4177 DataNode 4545 ResourceManager 5042 JobHistoryServer 5605 Jps 4854 NodeManager 4360 SecondaryNameNode aruna@aruna:~/hadoop-2.7.3/sbin$

Created 02-18-2017 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check if the service is up or not? It should open port 9000 if you have not changed the value of :

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>- Check port. If port is not opened then start the service or check configuration if it is supposed to start on 9000 port or not?

netstat -tnlpa | grep 9000

.

See:

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

Created 02-18-2017 07:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try these steps: Stop "SecondaryNameNode" then start it again.

1. On Active NameNode service execute following commands:

# su hdfs # hdfs dfsadmin -safemode enter # hdfs dfsadmin -saveNamespace

2. Stop HDFS. Keep the Journal Nodes running.

3. Take a backup of the "Data directory of the NameNode". For example if the NameNode data directory is - "/hadoop/hdfs/namenode", and the backup location is "/tmp" , then following:

# cp -prf /hadoop/hdfs/namenode/current /tmp

4. Run "initializeSharedEdits" to sync the edits.

# hdfs namenode -initializeSharedEdits

5. Start the NameNode service, that was active last time.

6. Bootstrap Standby NameNode. This command copies the contents of the Active NameNode's metadata directories (including the namespace information and most recent checkpoint) to the Standby NameNode.

# hdfs namenode -bootstrapStandby

.

7. Start the Standby NameNode and the rest of HDFS.

.

Created 02-18-2017 12:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

which bash file use to stop SecondaryNameNode ? Normally i use stop-all.sh to stop the deamons.

Created 02-18-2017 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hadoop-daemon.sh start [namenode | secondarynamenode | datanode | jobtracker | tasktracker]

Created 02-18-2017 02:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

since i got issues continously i delete the hadoop folder using rm - rf command .

Then i try to install from the beginging, when i complete starting deamons it shows as below

aruna@aruna:/tmp$ sudo jps [sudo] password for aruna: 12282 Jps 11500 DataNode

Then i check the hadoop-aruna-namenode-aruna.log file

java.net.BindException: Port in use: 0.0.0.0:50070

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:919)

at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:856)

at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:142)

at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:753)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:639)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:812)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:796)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1493)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1559)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:463)

at sun.nio.ch.Net.bind(Net.java:455)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:914)

... 8 more

It tell that the port is already use.? since i delete the old hadoop instalation and clear the temp file how it says port already used/

Created 02-18-2017 02:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Find the process that is holding the port 50070 and then kill it.

# netstat -tnlpa | grep 50070 tcp 0 0 172.17.0.2:50070 0.0.0.0:* LISTEN 29687/java # kill -9 29687

.

Created 02-18-2017 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Jay SenSharma it works for many logs. But hadoop-aruna-datanode-aruna.log log has connection issue.

java.net.ConnectException: Call From aruna/127.0.1.1 to localhost:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused at sun.reflect.GeneratedConstructorAccessor8.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792) at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732) at org.apache.hadoop.ipc.Client.call(Client.java:1479) at org.apache.hadoop.ipc.Client.call(Client.java:1412) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229) at com.sun.proxy.$Proxy14.sendHeartbeat(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.DatanodeProtocolClientSideTranslatorPB.sendHeartbeat(DatanodeProtocolClientSideTranslatorPB.java:152) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.sendHeartBeat(BPServiceActor.java:554) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.offerService(BPServiceActor.java:653) at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:824) at java.lang.Thread.run(Thread.java:745)Caused by: java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:744) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495) at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:614) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:712) at org.apache.hadoop.ipc.Client$Connection.access$2900(Client.java:375) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1528) at org.apache.hadoop.ipc.Client.call(Client.java:1451) ... 8 more

It says unable to connect 127.0.1.1 to localhost: 9000. How can i resolve this ?

Created 02-18-2017 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check if the service is up or not? It should open port 9000 if you have not changed the value of :

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>- Check port. If port is not opened then start the service or check configuration if it is supposed to start on 9000 port or not?

netstat -tnlpa | grep 9000

.

See:

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

Created 02-18-2017 09:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is my core-site.xml

<configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property> </configuration>

in core-site.xml name is "fs.default.name" , not the "fs.defaultFS"

port 9000 is open. I check as below.

aruna@aruna:~/hadoop-2.7.3/sbin$ netstat -tnlpa | grep 9000 (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 13554/java tcp 0 0 127.0.0.1:58016 127.0.0.1:9000 TIME_WAIT

Created on 02-20-2017 01:32 AM - edited 08-19-2019 04:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

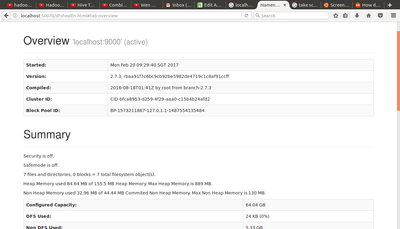

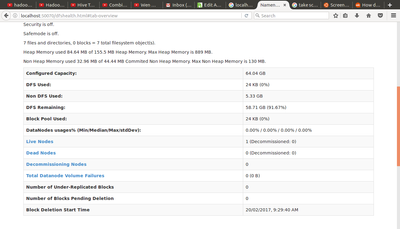

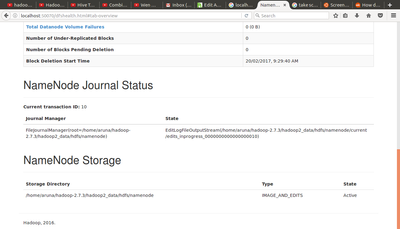

Many Thanks for helping me @Jay SenSharma. I remove all the Hadoop and start again. Now seeem ok.

aruna@aruna:~/hadoop-2.7.3/sbin$ sudo jps 4256 SecondaryNameNode 3921 NameNode 4437 ResourceManager 4918 Jps 4074 DataNode 4876 JobHistoryServer 4733 NodeManager

1) Anywa i have one question, when i ShutDown the my machine and start again do i need to start the deamons everytime ?

Below is the Screenshot of http://localhost:50070 url