Support Questions

- Cloudera Community

- Support

- Support Questions

- Unable to upload csv files from hdfs to hive view

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to upload csv files from hdfs to hive view

- Labels:

-

Apache Hadoop

-

Apache Hive

Created on 01-03-2017 08:28 AM - edited 08-19-2019 04:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

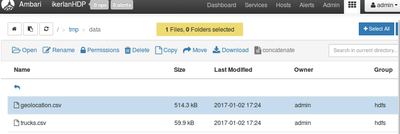

I am trying to upload files that I upload to HDFS to hive view, I give all the permission to the files + recursive.

HDFS:

HIVE:

The problem is that when I try to upload to hive it throws the next:

E090 No rows in the file. [NoSuchElementException]

Created 01-03-2017 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okey it works... The simple solution was to disable the firewall.. Really thanks

Created 01-03-2017 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error message indicates that Hive can not find the file under the given path. I assume you are using the Sandbox, right? So no permission issues for user Admin for Hive and HDFS... - are you using the Sandbox?

Can you please check that the path you entered does not contain a leading or tailing blankspace '/tmp/data/geolocation.csv'

Created 01-03-2017 09:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Ambari-HDP on Centos7 cluster. 1 master and 2 slaves. I have check it and it does not contain any blankspace. It is very strange :S. Really thanks

Created 01-03-2017 09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share the output of the following command:

$ hdfs dfs -ls /tmp/data/geolocation.csv

- We will need to check two things here

1. The file exist

2. The file has proper read permission for the user

3. Is this the same "Geolocation.zip" (geolocation.csv) file you are using which is shown as part of the demo: http://hortonworks.com/hadoop-tutorial/hello-world-an-introduction-to-hadoop-hcatalog-hive-and-pig/

- I just quickly tested this "geolocation.csv" file at my end ant it works fine.

.

Created 01-03-2017 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes the file works fine, because if I upload from local it detects and show the data. But the problem is uploading from HDFS. I have gived all the permission for that file and recursively for the directories.

The output is the next:

[root@hdp-master1 ~]# hdfs dfs -ls /tmp/data/geolocation.csv -rwxrwxrwx 3 admin hdfs 526677 2017-01-02 11:24 /tmp/data/geolocation.csv

Created 01-03-2017 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another quick test. Are you able to open the file for cat as following (that will help us in understandaing if there are any special character issue/ new line issue)

Example: hdfs dfs -cat /tmp/data/geolocation.csv | head

$ hdfs dfs -cat /tmp/data/geolocation.csv | head truckid,driverid,event,latitude,longitude,city,state,velocity,event_ind,idling_ind A54,A54,normal,38.440467,-122.714431,Santa Rosa,California,17,0,0 A20,A20,normal,36.977173,-121.899402,Aptos,California,27,0,0 A40,A40,overspeed,37.957702,-121.29078,Stockton,California,77,1,0 A31,A31,normal,39.409608,-123.355566,Willits,California,22,0,0 A71,A71,normal,33.683947,-117.794694,Irvine,California,43,0,0 A50,A50,normal,38.40765,-122.947713,Occidental,California,0,0,1 A51,A51,normal,37.639097,-120.996878,Modesto,California,0,0,1 A19,A19,normal,37.962146,-122.345526,San Pablo,California,0,0,1 A77,A77,normal,37.962146,-122.345526,San Pablo,California,25,0,0

.

Created 01-03-2017 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I can open, this is my output:

[root@hdp-master1 ~]# hdfs dfs -cat /tmp/data/geolocation.csv | head truckid,driverid,event,latitude,longitude,city,state,velocity,event_ind,idling_ind A54,A54,normal,38.440467,-122.714431,Santa Rosa,California,17,0,0 A20,A20,normal,36.977173,-121.899402,Aptos,California,27,0,0 A40,A40,overspeed,37.957702,-121.29078,Stockton,California,77,1,0 A31,A31,normal,39.409608,-123.355566,Willits,California,22,0,0 A71,A71,normal,33.683947,-117.794694,Irvine,California,43,0,0 A50,A50,normal,38.40765,-122.947713,Occidental,California,0,0,1 A51,A51,normal,37.639097,-120.996878,Modesto,California,0,0,1 A19,A19,normal,37.962146,-122.345526,San Pablo,California,0,0,1 A77,A77,normal,37.962146,-122.345526,San Pablo,California,25,0,0 cat: Unable to write to output stream.

Created 01-03-2017 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okey it works... The simple solution was to disable the firewall.. Really thanks

Created 03-18-2017 09:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Asier Gomez - Do you mean disable the firewall on your local machine/computer or is there a firewall included in the Sandbox?

Created 03-21-2017 01:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I only disable local machines firewall on the Master and on the agents and it works for me.