Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to view/see the filename correctly when...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to view/see the filename correctly when storing Filename with chinese characters in HDFS

- Labels:

-

Apache Hadoop

Created 11-20-2018 09:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to put a file in hadoop with the filename in chinese characters.

file: 余宗阳视频审核稿-1024.docx

but the file name is looking vaguely in hadoop as Óà×ÚÑôÊÓƵÉóºË¸å-1024.docx

Any hints to solve this issue?

Created on 11-21-2018 12:10 PM - edited 08-17-2019 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As such we didn't need any specific header other than content-type and charset which you already mentioned in your above command. I tried to replicate same command but i can able to write file in hdfs using curl via webhdfs

Seems like you have some space in the path. Please can you verify your command again ?

Reference: https://hadoop.apache.org/docs/r1.0.4/webhdfs.html

Created on 11-20-2018 10:52 AM - edited 08-17-2019 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

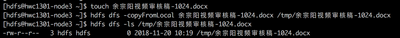

Check your locale on your terminal, if on Linux check "echo $LANG", does it end in UTF-8? You can store in HDFS any data, it only depends how are you going to interpret it for display. HDFS by default supports UTF-8, but can read other encodings as well. Most of Hadoop ecosystem uses UTF-8. Below I tried to replicate this issue but I can see filename in Chinese characters.

Created 11-21-2018 09:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's working thanks!

I am trying to put the same file to hdfs using Curl via webhdfs and getting error ">HTTP Status 500 - Illegal character in path at index "

curl -i -H 'content-type:application/octet-stream' -H 'charset:UTF-8' -X PUT -T '余宗阳视频审核稿-1024.docx' 'http://hostname:14000/webhdfs/v1/user/username/余宗阳视频审核稿-1024.docx?op=CREATE&data=true&user.name=username&overwrite=true'

Any other header to be passed to recognize the chinese character here?

Created on 11-21-2018 12:10 PM - edited 08-17-2019 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As such we didn't need any specific header other than content-type and charset which you already mentioned in your above command. I tried to replicate same command but i can able to write file in hdfs using curl via webhdfs

Seems like you have some space in the path. Please can you verify your command again ?

Reference: https://hadoop.apache.org/docs/r1.0.4/webhdfs.html

Created 11-21-2018 12:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After encoding it is working for me. But the first command, it's still throwing the error "illegal character found at index 62". 62 is where the filename will start in the destination path.

i checked the $LANG, and it is UTF-8.

What was the exact output for you when executed the first curl without encoding?

Created on 11-21-2018 01:39 PM - edited 08-17-2019 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Step 1: We need to submit first HTTP PUT request, that will give path for TEMPORARY_LOCATION to some random datanode path location where that data will going to write.

Step 2: Again submit another HTTP PUT request using the URL in the TEMPORARY_LOCATION header with the file data to be written.

The client receives a 201 Created response with zero content length and the WebHDFS URI of the file in the Location header.

Please accept the answer you found most useful

Created 11-22-2018 10:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found the root cause of the issue. Should use namenode with 50070 port. I was using edge node and hence the failure.

Thanks!

Created 11-22-2018 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

using PUT command, need to submit the curl twice. There is "negotiate" curl command which does the same in single submission.

curl --negotiate -u : -L "http://namenode:50070/webhdfs/v1/user/username/余宗阳视频审核稿-1024.docx?op=CREATE&user.name=username" -T 余宗阳视频审核稿-1024.docx