Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Update and Delete are not working in Hive ?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Update and Delete are not working in Hive ?

Created on 07-13-2017 11:14 AM - edited 09-16-2022 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I am not able to delete or update in hive table .

create table testTableNew(id int ,name string ) clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true');

insert into table testTableNew values('101','syri');

select * from testtablenew;

| 1 | 102 | syam |

| 2 | 101 | syri |

| 3 | 101 | syri |

delete from testTableNew where id = '101';

- Error while compiling statement: FAILED: SemanticException [Error 10294]: Attempt to do update or delete using transaction manager that does not support these operations.

update testTableNew

set name = praveen

where id = 101;

- Error while compiling statement: FAILED: SemanticException [Error 10294]: Attempt to do update or delete using transaction manager that does not support these operations.

I have added few properties in hive-site.xml also :

hive.support.concurrency

true

hive.enforce.bucketing

true

hive.exec.dynamic.partition.mode

nonstrict

hive.txn.manager

org.apache.hadoop.hive.ql.lockmgr.DbTxnManager

hive.compactor.initiator.on

true

hive.compactor.worker.threads

2

hive.in.test

true

After restart the Hive service also same error i am facing.

Quick Start VM - 5.8 and Hive version - 1.1.0.

Please guide me to sort this issue.

Thanks,

Syam.

Created 07-23-2017 01:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

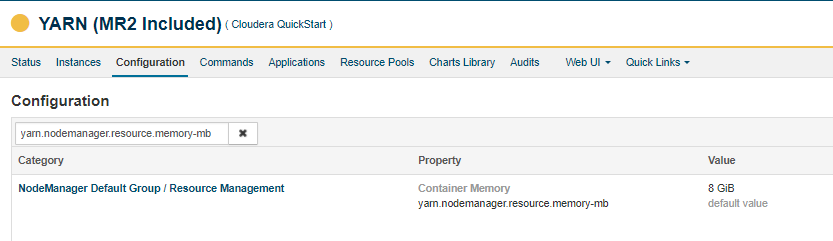

- Go to Yarn -> Configuration -> search for "yarn.nodemanager.resource.memory-mb". If it is 1 GB by default, increase it to 2 GB

- Save it and restart YARN

- (Sometime, may need to restart HUE as well, need to chk)

try again now

Created 07-24-2017 10:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply Saranvisa,

I already increased this configuration for some other reason,

But no work of update and delete operations.

Thanks,

Syam.

Created 07-25-2017 06:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how much you have increased? so i think in your case it is not sufficient, you have to increase more and try your update & delete operations

Created 07-25-2017 06:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 09-10-2017 01:22 AM - edited 09-10-2017 01:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try uninstalling hive and re-install it again and then try the same command.

Created 09-11-2017 04:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ujjwal,

Thanks for the reply.

This is quickstart Vm machine 5.8.

Uninstalling is not the solution i think.

Thanks,

Syam

Created 12-10-2017 10:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh ok syam i got. I did the installation part manually.

Created 01-09-2018 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The CDH distribution of Hive does not support transactions (HIVE-5317). Currently, transaction support in Hive is an experimental feature that only works with the ORC file format. Cloudera recommends using the Parquet file format, which works across many tools. Merge updates in Hive tables using existing functionality, including statements such as INSERT, INSERT OVERWRITE, and CREATE TABLE AS SELECT.

If you require these features, please inquire about Apache Kudu.

Kudu is storage for fast analytics on fast data—providing a combination of fast inserts and updates alongside efficient columnar scans to enable multiple real-time analytic workloads across a single storage layer.

https://www.cloudera.com/products/open-source/apache-hadoop/apache-kudu.html

Created 09-11-2018 06:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check the link https://hortonworks.com/blog/update-hive-tables-easy-way/ hope this helps.