Support Questions

- Cloudera Community

- Support

- Support Questions

- Use column values of a csv file to route flow file...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Use column values of a csv file to route flow files

- Labels:

-

Apache NiFi

Created on

12-28-2019

03:02 PM

- last edited on

12-28-2019

05:03 PM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I would like to route flow files based on a column's value of a csv file. i have splitted a csv file into many csv files with the content: header and 1 row of the dataframe. so if the inital csv file consisted of 50 rows i will have 50 new csv files. i did this with the SplitRecord processor and a csv-reader/writer. The i have a UpdateAttribute processor to a UUID to these 50 files. Now, i would like to route those files based on a column's value. is this possible?

Let's say column number 5 has got numeric values and want to route all flow files with a value bigger than 50. How can i achieve that?

Thank you in advance for your help and advice

Created 12-30-2019 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not working in your Use Case, I tried to show as much of mine as I could, I think you understand the concept. So the next trick is just getting the REGEX matched to your string.

Try a tool here: https://regex101.com

In this tool I quickly matched REGEX to 4th column:

.*?,.*?,.*?,(.*?),.*

to:

test,test,test,25.0,test,test,test

For your example, the above should work. It is not necessary to parse past the column you need, so the .* on end should pickup entire rest of the line.

Created on 12-29-2019 06:42 AM - edited 12-29-2019 06:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@CJoe36 couple of things you need to do here:

- Get the value of the column into an attribute.

- Create routes based on the value of attribute.

For #1 there are a few ways. First, you can create a flow that uses a CSV Reader and a known schema. Using this you can translate and parse the columns. This requires multiple processors and CSVReader Controller Service. Two, you can just use ExtractText with regex (one processor).

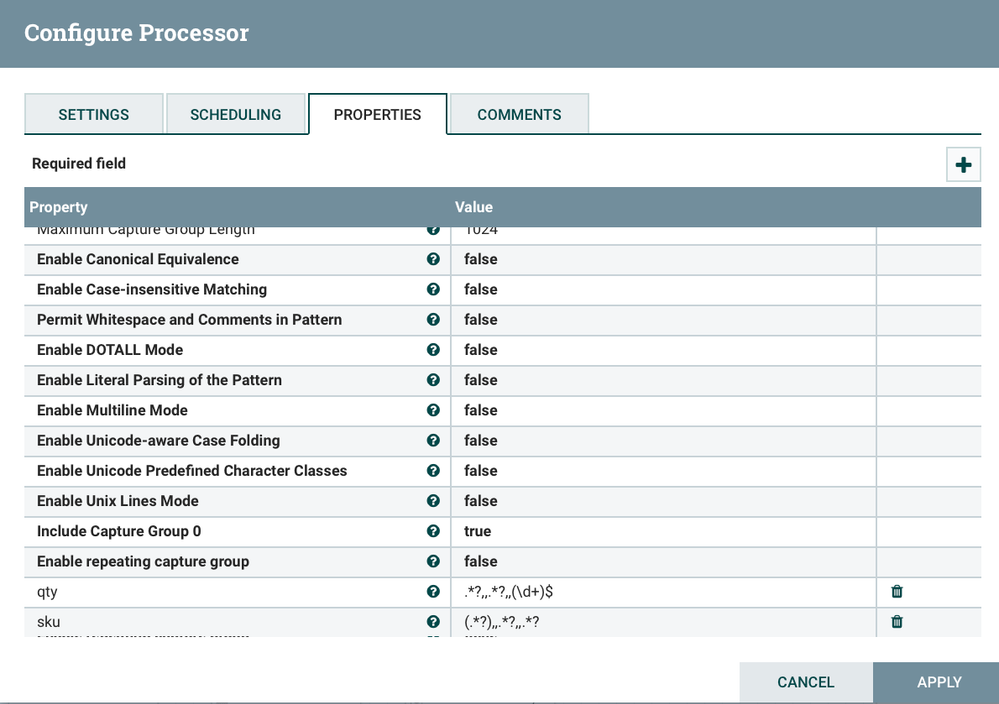

here is example of ExtractText to get a Quantity and SKU from an inventory CSV. Notice the regex codes used with the commas, and the () indicating which field maps to the attribute defined (sku or qty).

qty:

.*?,,.*?,,(\d+)$

sku:

(.*?),,.*?,,.*?

If your CSV is 10 columns, and #5 is your value, you probably want something like this:

,,,,(.*?),,,,,

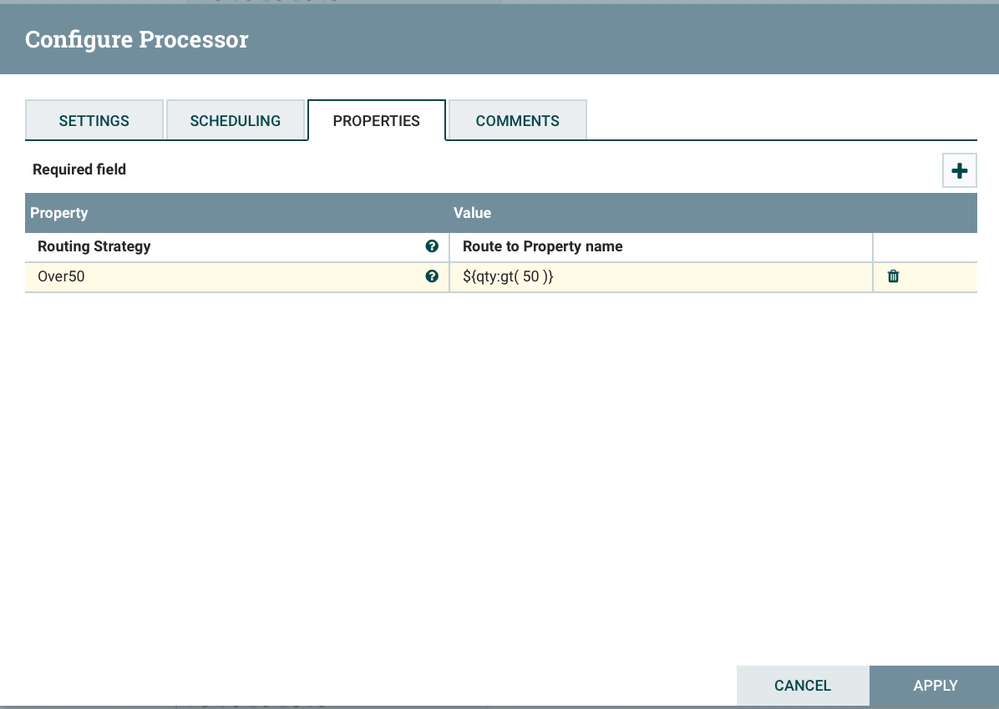

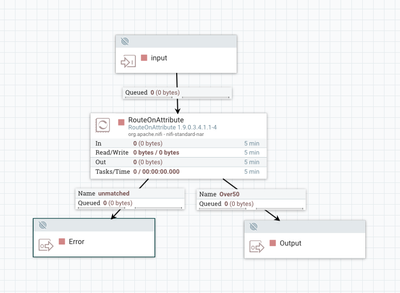

For #2 you want to use RouteOnAttribute Processor with routes defined using NiFi Expression Language. You define a route, then when defined you can chose it for downstream of RouteOnAttribute. Anything else will go to unmatched.

Here is an example:

And Routed:

If this reply helps answer your question, please mark it as a Solution.

Created 12-29-2019 03:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison Thanks a lot for your reply. very much appreciated.

I tried it but got stuck in the ExtractText processor.

My real file consists of 13 columns and #4 is the relevant one with the needed value. The input Flow File that feeds the ExtractText processor looks like this:

#4 is value 25.0 (before "cpm")

Meanwhile i removed the headers since i was not sure if that has got an impact or not.

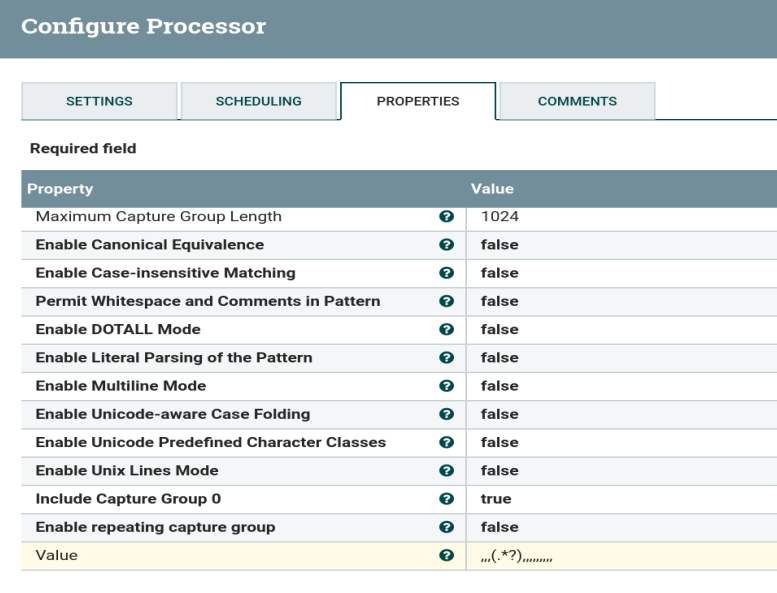

So what i did next in ExtractText processor is creating a new property named Value:

,,,(.*?),,,,,,,,,

But when i run the workflow all the flow files goes unmatched off the ExtractText processor. So there are no matched ones that go to RouteOnAttribute.

I really don't know what went wrong here.

Thank you & Regards

Created 12-30-2019 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not working in your Use Case, I tried to show as much of mine as I could, I think you understand the concept. So the next trick is just getting the REGEX matched to your string.

Try a tool here: https://regex101.com

In this tool I quickly matched REGEX to 4th column:

.*?,.*?,.*?,(.*?),.*

to:

test,test,test,25.0,test,test,test

For your example, the above should work. It is not necessary to parse past the column you need, so the .* on end should pickup entire rest of the line.

Created 12-30-2019 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks a lot. it worked with the new regex code.