Support Questions

- Cloudera Community

- Support

- Support Questions

- Version of Python of Pyspark for Spark2 and Zeppel...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Version of Python of Pyspark for Spark2 and Zeppelin

- Labels:

-

Apache Spark

Created 04-27-2018 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I built a cluster with HDP ambari Version 2.6.1.5 and I am using anaconda3 as my python interpreter.

I have a problem of changing or alter python version for Spark2 pyspark in zeppelin

When I check python version of Spark2 by pyspark, it shows as bellow which means OK to me.

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.2.0.2.6.4.0-91

/_/

Using Python version 3.6.4 (default, Jan 16 2018 18:10:19)

SparkSession available as 'spark'.

>>> import sys

>>> print (sys.path)

['', '/tmp/spark-14a0fb52-5fea-4c1f-bf6b-c0bd0c37eedf/userFiles-54205d05-fbf0-4ec1-b274-4c5a2b78e840', '/usr/hdp/current/spark2-client/python/lib/py4j-0.10.4-src.zip', '/usr/hdp/current/spark2-client/python', '/root', '/root/anaconda3/lib/python36.zip', '/root/anaconda3/lib/python3.6', '/root/anaconda3/lib/python3.6/lib-dynload', '/root/anaconda3/lib/python3.6/site-packages']

>>> print (sys.version)

3.6.4 |Anaconda, Inc.| (default, Jan 16 2018, 18:10:19)

[GCC 7.2.0]

>>> exit()

When I check python version of Spark2 by zeppelin, it shows different results as below.

%spark2.pyspark print(sc.version) import sys print(sys.version) print() print(sys.path) 2.2.0.2.6.4.0-91 2.7.5 (default, Aug 4 2017, 00:39:18) [GCC 4.8.5 20150623 (Red Hat 4.8.5-16)] () ['/tmp', u'/tmp/spark-75f5d1d5-fefa-4dc8-bc9b-c797dec106d7/userFiles-1c25cf01-7758-49dd-a1eb-f1fbd084e9af/py4j-0.10.4-src.zip', u'/tmp/spark-75f5d1d5-fefa-4dc8-bc9b-c797dec106d7/userFiles-1c25cf01-7758-49dd-a1eb-f1fbd084e9af/pyspark.zip', u'/tmp/spark-75f5d1d5-fefa-4dc8-bc9b-c797dec106d7/userFiles-1c25cf01-7758-49dd-a1eb-f1fbd084e9af', '/usr/hdp/current/spark2-client/python/lib/py4j-0.10.4-src.zip', '/usr/hdp/current/spark2-client/python', '/usr/hdp/current/spark2-client/python/lib/py4j-0.8.2.1-src.zip', '/usr/lib64/python27.zip', '/usr/lib64/python2.7', '/usr/lib64/python2.7/plat-linux2', '/usr/lib64/python2.7/lib-tk', '/usr/lib64/python2.7/lib-old', '/usr/lib64/python2.7/lib-dynload', '/usr/lib64/python2.7/site-packages', '/usr/lib64/python2.7/site-packages/gtk-2.0', '/usr/lib/python2.7/site-packages']

I have tried to update zeppelin interpreter setting known by other questions and answers such as

export PYSPARK_PYTHON = /root/anaconda3/bin/python

I updated both zeppelin.env.sh and interpreter setting via zeppelin GUI but it didn't work.

I think it cause because zeppelin's python path is heading /usr/lib64/python2.7 which is base for centos but I don't know how to fix it.

If there is any idea of this problem, please let me know. Any of your advice would be appreciated.

Thank you.

Created on 05-29-2018 02:10 PM - edited 08-17-2019 06:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

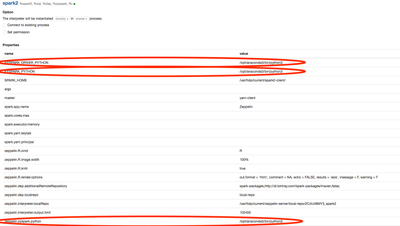

Try installing anaconda3 on /opt/anaconda3 instead of under /root. And add the following configuration to your interpreter:

The results while having this configuration is:

Important: Since zeppelin runs spark2 interpreter in yarn-client mode by default you need to make sure the /root/anaconda3/bin/python3 is installed on the zeppelin machine and on all cluster worker nodes.

Additional resources

HTH

*** If you found this answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created 04-28-2018 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sungwoo Park,

You can have a look at this question. I think it would help you : https://stackoverflow.com/questions/47198678/zeppelin-python-conda-and-python-sql-interpreters-do-no...

Best regards,

Paul

Created 05-29-2018 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your comment.

I checked the post you told me and found it is not a good idea: changing symlink in bin/.

It might make trouble to linux system.

Created 05-29-2018 04:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sungwoo Park, thanks for the input. Could you please elaborate a little bit more, why could the symlink cause problems, and which ones?

I am very interesting since we have this settings in a demo cluster within a customer.

BR. Paul

Created 05-30-2018 01:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, my problem has solved by adding zeppelin properties like @Felix Albani show me.

In my case, my cluster is based on CentOS 7.

The OS has python 2.7 as default and some packages such as yum have dependency on the default python. The symlink '/bin/python' is heading this default python and if it is changed, yum is not working any more.

Hope this help.

SW

Created on 05-29-2018 02:10 PM - edited 08-17-2019 06:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try installing anaconda3 on /opt/anaconda3 instead of under /root. And add the following configuration to your interpreter:

The results while having this configuration is:

Important: Since zeppelin runs spark2 interpreter in yarn-client mode by default you need to make sure the /root/anaconda3/bin/python3 is installed on the zeppelin machine and on all cluster worker nodes.

Additional resources

HTH

*** If you found this answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created 05-31-2018 09:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Felix Albani Hi felix, you installed 3.6.4, but according to the document spark2 can only support up to 3.4.x, Can you kindly explain how does this work ?