Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What is the best way to ingest data from HDFS ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is the best way to ingest data from HDFS witch contain XML files and then push them into Hive using apache Nifi workFlow !!

- Labels:

-

Apache Hive

-

Apache NiFi

-

HDFS

Created on 03-15-2018 04:46 PM - edited 09-16-2022 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll try to explain the problem ! GetHDFS-->ValidateXML--->TransformXMLToJson--->JoltTransformation--->SplitJson--> EvaluteJsonPath---->ReplaceText--->PutHiveQL. Till the 5 step, it works perfectly for me , but when trying to EvaluteJsonPath , it doesn't work So how can I evaluate the hol content of the flowfile cos I need to push it completely in the hive , and please how can I use ReplaceText to insert query into hive. thnks for giving help

Created on 03-17-2018 09:42 AM - edited 08-18-2019 01:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

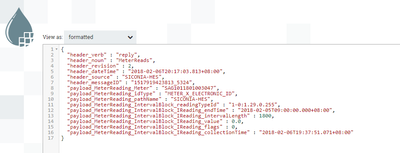

By using EvaluateJsonPath processor you can extract all the values of the json keys and keep them as attributes of the flowfile.

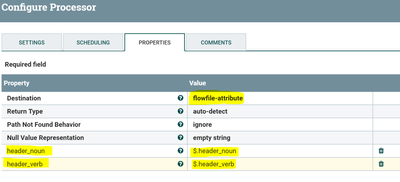

EvaluatejsonPath configs:-

Keep the Destination property as flowfile-attribute and

add all the json keys that you are having in the json message same as shown in the above screenshot.

Change the below property:

Destination

flowfile-attribute

Add these new properties to the processor:

header_noun

$.header_noun

header_verb

$.header_verb

Once you complete configuring this evaluate json path processor then we can create insert statement in ReplaceText processor.

Replace text processor Configs:-

Search Value

(?s)(^.*$)

Replacement value

insert into <db-name>.<table-name> values('${header_verb}','${header_noun}'...);Maximum Buffer Size

1 MB //change if the flowfile size is greater than 1mb

Replacement Strategy

Always Replace

Evaluation Mode

Entire text

in this processor we are going to build insert statements into hive table by adding all the extracted attribute values in the evaluatejson processor.

Then use PutHiveQl processor to execute all the insert statements.

(or)

Method2:-

Easy/Best way to do is by using convert record processor, even this convert record processor accepts array of json messages/objects,

As you are having json message use convert record processor with json reader and avro set writer, in this convert record processor we are converting json array of messages/objects into avro.

Then by using Convert AvroToOrc processor we can convert the avro format to ORC format(as orc is optimized for tez execution engine).

Use PutHDFS processor to store the data into HDFS directory and create a hive table on top of this directory(you can use hive.ddl attribute from convert AVROtoORC processor to create table).

Flow:-

GetHDFS-->ValidateXML--->TransformXMLToJson--->JoltTransformation--->ConvertRecord--->ConvertAvroToORC--->PutHDFS

in case if you want to create hive table in your flow it self then add new processors after putHDFS processor

Replacetext ---> PutHiveQL

References for convertrecord processor

create hive table references

Load data into hive references

Let us know if you are facing any issues..!!

Created 03-17-2018 07:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jandoubi Chaima 1. when you say, EvaluateJSONPath doesn't works, what is the issue that you are facing? 2. Do you need your final data on HDFS in JSON format? 3. What is the query that you want in ReplaceText? DDL? DML?

Created 03-19-2018 10:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good morning sir @Rahul Soni ^^

thanks for replying me , Mr @Shu gave me the solution I was looking for !

Created on 03-17-2018 09:42 AM - edited 08-18-2019 01:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By using EvaluateJsonPath processor you can extract all the values of the json keys and keep them as attributes of the flowfile.

EvaluatejsonPath configs:-

Keep the Destination property as flowfile-attribute and

add all the json keys that you are having in the json message same as shown in the above screenshot.

Change the below property:

Destination

flowfile-attribute

Add these new properties to the processor:

header_noun

$.header_noun

header_verb

$.header_verb

Once you complete configuring this evaluate json path processor then we can create insert statement in ReplaceText processor.

Replace text processor Configs:-

Search Value

(?s)(^.*$)

Replacement value

insert into <db-name>.<table-name> values('${header_verb}','${header_noun}'...);Maximum Buffer Size

1 MB //change if the flowfile size is greater than 1mb

Replacement Strategy

Always Replace

Evaluation Mode

Entire text

in this processor we are going to build insert statements into hive table by adding all the extracted attribute values in the evaluatejson processor.

Then use PutHiveQl processor to execute all the insert statements.

(or)

Method2:-

Easy/Best way to do is by using convert record processor, even this convert record processor accepts array of json messages/objects,

As you are having json message use convert record processor with json reader and avro set writer, in this convert record processor we are converting json array of messages/objects into avro.

Then by using Convert AvroToOrc processor we can convert the avro format to ORC format(as orc is optimized for tez execution engine).

Use PutHDFS processor to store the data into HDFS directory and create a hive table on top of this directory(you can use hive.ddl attribute from convert AVROtoORC processor to create table).

Flow:-

GetHDFS-->ValidateXML--->TransformXMLToJson--->JoltTransformation--->ConvertRecord--->ConvertAvroToORC--->PutHDFS

in case if you want to create hive table in your flow it self then add new processors after putHDFS processor

Replacetext ---> PutHiveQL

References for convertrecord processor

create hive table references

Load data into hive references

Let us know if you are facing any issues..!!

Created 03-19-2018 10:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello @Shu it works perfectly 😄

big thanks for you ^^

Created 03-27-2018 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu Hello 😄 from the other side XD

Am so grateful sir , you helped me out !!

Thanks a lot :D I highly recommend you