Support Questions

- Cloudera Community

- Support

- Support Questions

- Why I am having problem with Ambari Hadoop Service...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why I am having problem with Ambari Hadoop Services start?

- Labels:

-

Hortonworks Data Platform (HDP)

Created 02-26-2016 06:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Last week, I had set up a two-node HDP 2.3 on ec2 with just one master and a slave. Ambari installation went smoothly and it deployed and started the hadoop services.

I prefer to keep the cluster down when not in use for reasons of efficient cost utilisation. With the public IP changing with a reboot, ambari-server could not start hadoop services this week. Some services start if I were to launch them manually in sequence starting with HDFS. It will not start the services on reboot.

I believe the server has lost the connectivity after the change of public IP address. I am still not able to resolve the problem. I do not think changing the addresses in confg and other files are straightforward; they may be embedded in numerous files/locations.

I have got two elastic IP addresses and assigned them to two instances. I want to use the elastic IPs DNS name (example: 3c2-123.157.1.10.compute-1.amazonaws.com) to connect externally, while using the same (Elastic IP DNS) to let servers communicate with each other over the internal ec2 network. I wont be charged for the network traffic as long as my servers are in the same ec2 availability zone. I am aware there would be a tiny charge for the duration where the elastic IPs are not in use, which may be a few $ a month. I do not want to use the external elastic IP address (example: 123.157.1.10) directly for internal server access as I would be charged for network traffic.

Please advise the best way to resolve the hadoop services breakdown issue. Please note that I am also a Linux newbie. A detailed guidance is very much appreciated.

Thanks,

Created 03-13-2016 12:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am cleaning them out and attempt a fresh install. I will close this thread and post a new one, if required. Thanks every one for the help

Created 02-29-2016 11:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no worries. I have manually cleaned them out from the respective directories. I will post again after install. thanks

Created 03-01-2016 07:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I need some help please in making config changes in /etc/sysconfig/network and /etc/hosts. I have named the instances as NameNode and DataNode. I have an elastic IP address, which gets a fixed public DNS as follows:

1 : ec2-xx.xx.xxx.xx-compute-1-amazon.com. the public IP and Elastic IP for this Name Node are the same (xx....)

2: ec2-yy.yy.yyy.yy-compute-1.amazon.com.

/etc/sysconfig/network

- \etc\systemconfig\network

networking = yes

nozeroconf = yes

set HOSTNAME=NameNode.hdp.hadoop # namenode is the name given to an intance id

set HOSTNAME=DataNode.hdp.hadoop # datanode is the name given to an instance id

/etc/hosts

I used the public DNS route in the hosts file. I am not sure if this is correct. Because, ntp, which always autostarted, no longer autostarts now. I tried to set the auto start. But it does not autostart on boot.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

ec2-xx.xx.xxx.xx.compute-1.amazonaws.com NameNode.hdp.hadoop NameNode

ec2-yy.yy.yyy.yy.compute-1.amazonaws.com DataNode.hdp.hadoop DataNode

The idea is since the public DNS uses elastic IP, using them to let the internal servers to talk to each other (ec2-VPC) would address the problem of losing the connectivity if the internal IPs were to change for a variety of reasons.

Thanks,

Sundar

Created 03-02-2016 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The questions is: Could the public (eip) dns be used (in /etc/hosts as well as at the HDP node address specfication stage) to address the connectivity / loss of internal ip/dns for a variety of reasons including stop/restart please? Using Elastic IP is straight forward. But the clusters would access each other internally over the external network. This will attract data transfer/bandwidth to/from ec2 at the rate of $0.01/gb and/or $0.09/gb upto 10TB transfer out from ec2 to internet.

Any help and examples to resolve this would be greatly appreciated as I have not installed Ambari/deployed HDP.

Created 03-03-2016 08:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Morning all,

Since this post has not received any further response, is it fair to assume the users have not gone down using public dns route for internal applications within ec2-vpc? This does address most of the problems one could face -- losing the internal IP, charges for using EIP internally, stop/restart. I found an article on this subject. How do Ambari and HDP behave if I provide the public dns to ambari at cluster deployment stage? Has anyone tried this before please?

https://alestic.com/2009/06/ec2-elastic-ip-internal/

Thanks,

Created 03-04-2016 01:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I managed to install Ambari server 2.2.1. The normal practice is using the internal IP/DNS addresses for clusters. I am trying to supply the public (EIP) dns for the nodes. It does not go through as it failed at the host check stage -- "host checks were skipped on 2 hosts that failed to register". I manually installed ambari and started on the data node.

I did edit the /etc/hosts file and provided the internal ip and public dns on the master and slave.

Is there any guidance or notes available to use the public dns please?

Created on 03-04-2016 02:30 AM - edited 08-18-2019 04:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

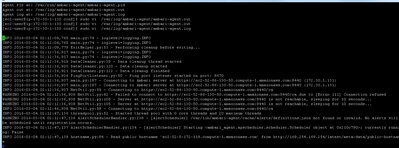

I think they are talking to each other - please see the agent log below:

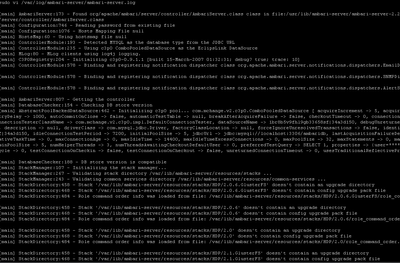

serverlog file:

Serverout

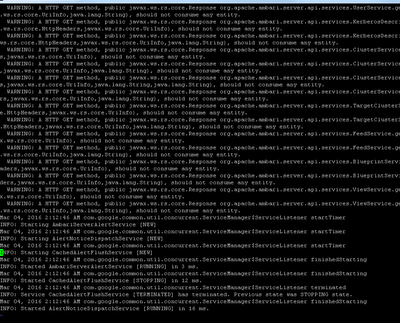

agent-log - seemed to have established connection...registration failed at datanode --- 2 way ssl auth turned off??

Created 03-04-2016 08:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could someone please help ?

I have not made any progress as I am unable to find the root cause.

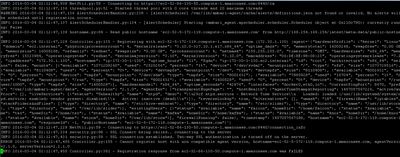

What I did for manual registration:

- /etc/sysconfig/network and etc/hosts contain relevant entries

- on stop/start/reboot Redhat on Ec2 recognises the changed hostnames

- installed, configured and started ambari-agent - datanode

- It could connect to the and establish a two-way ssl connection.

- Server refused registration - cert do not match / unknown host: datanode.teg.

- registration failed

Automatic install

- clean uninstall of ambari* on datanode

- ambari server is running

- registration failed ..

error:

Permission denied (publickey,gssapi-keyex,gssapi-with-mic). SSH command execution finished host=namenode.teg, exitcode=255 Command end time 2016-03-04 19:51:42 ERROR: Bootstrap of host namenode.teg fails because previous action finished with non-zero exit code (255) ERROR MESSAGE: Permission denied (publickey,gssapi-keyex,gssapi-with-mic). STDOUT: Permission denied (publickey,gssapi-keyex,gssapi-with-mic). Please advise. I am unable to trouble shoot.

Created 03-05-2016 02:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you have mixed the multiple questions here. I will start with the last comment

1) Make sure that you can ssh without password from ambari server to all the hosts and ssh to ambari server too "important"

in ambari server, ssh localhost and if you cant login without password then

cd ~/.ssh

cat id_rsa.pub>>authorized_keys

ssh localhost

2) If you are not using password less idea then make sure that you install agents manually and register with ambari server

host=namenode.teg ERROR: Bootstrap of host namenode.teg fails because previous action finished with non-zero exit code (255) ERROR MESSAGE: Permission denied (publickey,gssapi-keyex,gssapi-with-mic).

Created 03-05-2016 03:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Neeraj Sabharwal. I have a new problem to face 🙂

I can no longer access my master node from Putty, WinSCP or through browser. it throws an error: "Server refused or Key - (publickey,gssapi-keyex,gssapi-with-mic). Nothing has changed. I can use the same key and access the other instance! I need to see if I can attach the volume on the second node and check the issues.

I have indeed setup ssh without pw as the earlier install was successful before the stop/start created issues - hence the elastic ip. The questions below are naive. Sorry for asking them!

Do I need to enable this pw-less ssh every time I reboot/start the instances, ambari server and hadoop services please? Does it mean, ambari/hadoop services wont start after stop/restart please? How do check ssh localhost in ambari server please? I guess providing the ssh key for an automatic registration takes care of it!

Created 03-05-2016 06:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do I need to enable this pw-less ssh every time I reboot/start the instances, ambari server and hadoop services please? No

make sure that sshd is running and autostart on /sbin/chkconfig sshd on

chkconfig ambari-server on

login to ambari server

ssh node1

ssh node2 and so on ....You should be able to login to other servers without the passwords