Support Questions

- Cloudera Community

- Support

- Support Questions

- Why PutSplunk stopped picking the data from Queue

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why PutSplunk stopped picking the data from Queue

- Labels:

-

Apache NiFi

Created on 02-24-2017 07:43 AM - edited 08-19-2019 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My Usecase:

Pick log data and send it to PutSplunk.

Solution I am trying is:

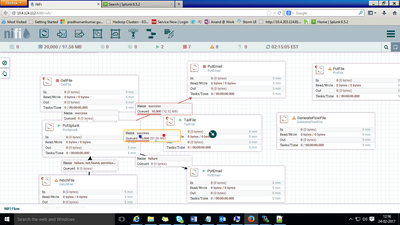

I used TailFile processor which will have details of path of log file. And it will keep fetching the updated data from this file and will put in FlowFile routed to PutSplunk.

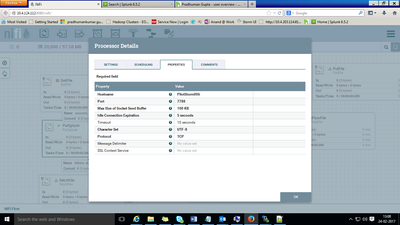

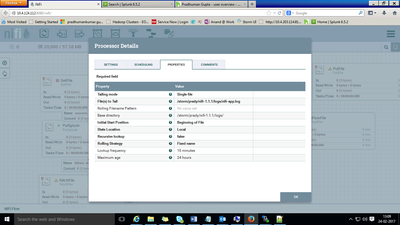

As in the image attached, its not picking any more data and seems like hung, although the data is queued up. and the processors are in running state. Also added the screen dump of Processor Configuration

Created 02-24-2017 12:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Backpressure has kicked in on your dataflow. Every new connection by default has a default backpressure object threshold of 10,000 FlowFiles. When Backpressure is reached on a connection, the connection is highlighted in red and the backpressure bar (left = object threshold and right = Size threshold) will show which threshold has reached 100%. Once backpressure is applied, the component (processor) directly upstream of that connection will no longer run. As you can see in your screenshot above the "success" from your PutSplunk processor is applying backpressure. As a result the PutSplunk processor is no longer getting scheduled to run by the NiFi controller. Since it is no longer executing, FlowFiles began to queue on the connection between your TailFile and PutSplunk processor. Once backpressure kicked in here as well, the TailFile processor was stopped as well.

If you clear the backpressure on the "success" connection between your PutSplunk and PutEmail processor, your dataflow will start running again.

You can adjust the backpressure threshold by right clicking on a connection and selecting "configure". (The configure option is on available if the processors on both sides of a connection are stopped) In addition to adjusting backpressure settings, you also have the option of setting "file expiration" on a connection. File expiration dictates how old a FlowFile in a given connection can be. If the FlowFile has existed in your NiFi (not how long it has been in that specific connection) for longer then the configured time, it is purged from your dataflow. This setting if set aggressive enough could help keep your "success" relationship clean enough to avoid back pressure.

Thanks,

Matt

Created 02-24-2017 12:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Backpressure has kicked in on your dataflow. Every new connection by default has a default backpressure object threshold of 10,000 FlowFiles. When Backpressure is reached on a connection, the connection is highlighted in red and the backpressure bar (left = object threshold and right = Size threshold) will show which threshold has reached 100%. Once backpressure is applied, the component (processor) directly upstream of that connection will no longer run. As you can see in your screenshot above the "success" from your PutSplunk processor is applying backpressure. As a result the PutSplunk processor is no longer getting scheduled to run by the NiFi controller. Since it is no longer executing, FlowFiles began to queue on the connection between your TailFile and PutSplunk processor. Once backpressure kicked in here as well, the TailFile processor was stopped as well.

If you clear the backpressure on the "success" connection between your PutSplunk and PutEmail processor, your dataflow will start running again.

You can adjust the backpressure threshold by right clicking on a connection and selecting "configure". (The configure option is on available if the processors on both sides of a connection are stopped) In addition to adjusting backpressure settings, you also have the option of setting "file expiration" on a connection. File expiration dictates how old a FlowFile in a given connection can be. If the FlowFile has existed in your NiFi (not how long it has been in that specific connection) for longer then the configured time, it is purged from your dataflow. This setting if set aggressive enough could help keep your "success" relationship clean enough to avoid back pressure.

Thanks,

Matt

Created 02-24-2017 01:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you take a thread dump and provide the output here?

./bin/nifi.sh dump /path/to/output/dump.txt