Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Why my cluster memory is less even though phys...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why my cluster memory is less even though physical memory is more..?

- Labels:

-

Apache Ambari

-

Apache YARN

Created 10-27-2017 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have 2-node cluster(1 master 4 CPU,16 GB RAM + 1 data node 8 CPU,30 GB RAM). However in Ambari console, I could be able to see the Total cluster memory is 22 GB only. Is there a way to allocate more cluster memory(around 36GB ) out of 46 GB physical memory we have together from master + data node. Morever, the number of containers are only 5 whereas the available Vcores are 8 already. I have attached the screenshot for your reference. Please suggest a way to improve the cluster resource utilization. Thank you in advance.

Created on 10-27-2017 06:36 AM - edited 08-18-2019 03:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume the question is around the YARN total memory.

This is because Ambari uses the smallest capacity node to bring with the calculations, as Ambari expects a homogenous cluster.

But in this case, we have heterogenous cluster as : 1 master 4 CPU,16 GB RAM + 1 data node 8 CPU,30 GB RAM

- Thus, Ambari picks the 16 GB one and assumes 2nd one to be of same size and does the calculation for YARN's Node Manager (NM) memory. I assume that both nodes have Node Manager running.

- I believe that you would have 11 GB as value for YARN/yarn.nodemanager.resource.memory-mb. Thus, we have 22 GB (11 * 2) available in this case which is > 16 GB. 16*2 = 32 GB, but Ambari takes out memory required to run other processes outside the YARN workspace (eg: RM, HBase etc). Thus we have memory less than 32 GB available (which is expected).

Its a good idea to have homogeneous clusters.

===================================================================

However, you can make use of Config Groups here in Ambari based on different hardware profiles.

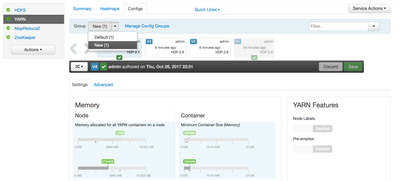

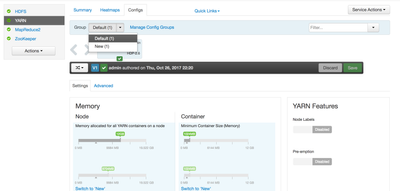

You can creates 2 Config Groups (CG) where each CG has one node. By default, there would be a default CG as seen on YARN configs page having both the nodes.

How to create a CG is exemplified using HBase here : https://docs.hortonworks.com/HDPDocuments/Ambari-2.1.2.0/bk_Ambari_Users_Guide/content/_using_host_c...

I did the following testing in order to reduce the memory for one node. You can similarly bump up up the memory for the 30 GB node.

- Starting with 2 node cluster, where Ambari had given 12 GB to each NM, with total capacity being 24 GB.

- Created a CG named 'New' and added 2nd node to it. Then changed the YARN/yarn.nodemanager.resource.memory-mb for 2nd node under 'New' from ~ 12 GB to ~8 GB.

- State of Node 1 under 'default' CG:

- Restarted "Affected components" as prompted by Ambari after the above changes.

- The Total memory changes from 24 GB to 20 GB now.

Hope this helps.

Created 10-27-2017 03:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ideally Ambari should show your total RAM and CPU information, until if you have any issues with Ambari agent.

Can you provide free -m output from your both the nodes and add scree shots of ambari cluster information.??

Created on 10-27-2017 06:36 AM - edited 08-18-2019 03:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume the question is around the YARN total memory.

This is because Ambari uses the smallest capacity node to bring with the calculations, as Ambari expects a homogenous cluster.

But in this case, we have heterogenous cluster as : 1 master 4 CPU,16 GB RAM + 1 data node 8 CPU,30 GB RAM

- Thus, Ambari picks the 16 GB one and assumes 2nd one to be of same size and does the calculation for YARN's Node Manager (NM) memory. I assume that both nodes have Node Manager running.

- I believe that you would have 11 GB as value for YARN/yarn.nodemanager.resource.memory-mb. Thus, we have 22 GB (11 * 2) available in this case which is > 16 GB. 16*2 = 32 GB, but Ambari takes out memory required to run other processes outside the YARN workspace (eg: RM, HBase etc). Thus we have memory less than 32 GB available (which is expected).

Its a good idea to have homogeneous clusters.

===================================================================

However, you can make use of Config Groups here in Ambari based on different hardware profiles.

You can creates 2 Config Groups (CG) where each CG has one node. By default, there would be a default CG as seen on YARN configs page having both the nodes.

How to create a CG is exemplified using HBase here : https://docs.hortonworks.com/HDPDocuments/Ambari-2.1.2.0/bk_Ambari_Users_Guide/content/_using_host_c...

I did the following testing in order to reduce the memory for one node. You can similarly bump up up the memory for the 30 GB node.

- Starting with 2 node cluster, where Ambari had given 12 GB to each NM, with total capacity being 24 GB.

- Created a CG named 'New' and added 2nd node to it. Then changed the YARN/yarn.nodemanager.resource.memory-mb for 2nd node under 'New' from ~ 12 GB to ~8 GB.

- State of Node 1 under 'default' CG:

- Restarted "Affected components" as prompted by Ambari after the above changes.

- The Total memory changes from 24 GB to 20 GB now.

Hope this helps.

Created 11-01-2017 02:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks much for your detailed reply, it really helps.!!!

Created 11-07-2017 12:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. Glad to know that it helped.