Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Work putHDFS on HDFS in HA?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Work putHDFS on HDFS in HA?

- Labels:

-

Apache Hadoop

-

Apache NiFi

Created 02-03-2016 03:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a NiFi job but putHDFS processor return an error:

failed to invoke @OnScheduled method due to java.lang.IllegalArgumentException: java.net.UnknownHostException: provaha; processor will not be scheduled to run for 30 sec: java.lang.IllegalArgumentException: java.net.UnknownHostException: provaha

provaha is the correct reference for HDFS High Availability.

is there any particular configuration for puthdfs that it work with HDFS in HA?

Created 02-03-2016 08:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

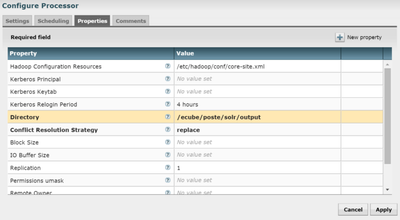

You're missing hdfs-site.xml in the config, which is where the NN HA details are found. Config requires both hdfs-site and core-site,

i.e., set "Hadoop Configuration Resources" similar to the following:

/etc/hadoop/2.3.4.0-3485/0/hdfs-site.xml,/etc/hadoop/2.3.4.0-3485/0/core-site.xml

Reference:

A file or comma separated list of files which contains the Hadoop file system configuration. Without this, Hadoop will search the classpath for a 'core-site.xml' and 'hdfs-site.xml' file or will revert to a default configuration.

Created 02-03-2016 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

did you try passing /etc/hadoop/conf/core-site.xml to putHDFS processor @Davide Isoardi

Created on 02-03-2016 04:03 PM - edited 08-19-2019 03:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes @Artem Ervits, I passing the correct path to core-site.xlm, but putHDFS don't work.

Created 02-03-2016 04:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-16-2016 01:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please provide full path with reference to root directory (absolute path). ?/?/?/etc/hadoop/conf/core-site.xml then it should work fine.

Created 02-03-2016 04:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like it cannot resolve the hostname 'provaha' to an IP address - can you check if the provaha is in your /etc/hosts file, or supply the FQDN instead?

Created 02-03-2016 06:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That probably works but defeats the purpose of HA. Hadoop is supposed to dynamically resolve 'provaha' to any of the physical nodes in the HA cluster. I wonder if PutHDFS is not successfully getting the physical node resolved from the HA node name, or if it is an error in config (core-site.xml)

Created 02-03-2016 08:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're missing hdfs-site.xml in the config, which is where the NN HA details are found. Config requires both hdfs-site and core-site,

i.e., set "Hadoop Configuration Resources" similar to the following:

/etc/hadoop/2.3.4.0-3485/0/hdfs-site.xml,/etc/hadoop/2.3.4.0-3485/0/core-site.xml

Reference:

A file or comma separated list of files which contains the Hadoop file system configuration. Without this, Hadoop will search the classpath for a 'core-site.xml' and 'hdfs-site.xml' file or will revert to a default configuration.

Created 06-16-2016 07:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

core-site has worked for me