Support Questions

- Cloudera Community

- Support

- Support Questions

- YARN - VCores max

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

YARN - VCores max

- Labels:

-

Apache YARN

Created on 12-03-2015 07:26 PM - edited 08-19-2019 05:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

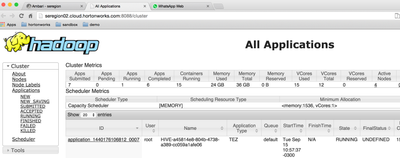

Can yarn allocate more vcores (containers) than cluster VCores Total (sum of all node manager: yarn.nodemanager.resource.cpu-cores)?

In my tests only memory is limiting applications to get accepted and execute containers. I expected it be limited by both, Memory and Vcores available.

See screenshot below where "VCores used" is greater than "VCores total".

Created 12-03-2015 08:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DefaultResourceCalculator only takes memory into account. Here is a brief explanation of what you are seeing (relevant part bolded).

Pluggable resource-vector in YARN scheduler

The CapacityScheduler has the concept of a ResourceCalculator – a pluggable layer that is used for carrying out the math of allocations by looking at all the identified resources. This includes utilities to help make the following decisions:

- Does this node have enough resources of each resource-type to satisfy this request?

- How many containers can I fit on this node, sorting a list of nodes with varying resources available.

There are two kinds of calculators currently available in YARN – the DefaultResourceCalculator and theDominantResourceCalculator.

The DefaultResourceCalculator only takes memory into account when doing its calculations. This is why CPU requirements are ignored when carrying out allocations in the CapacityScheduler by default. All the math of allocations is reduced to just examining the memory required by resource-requests and the memory available on the node that is being looked at during a specific scheduling-cycle.

You can find more on this topic on our blog:

Created 12-03-2015 08:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DefaultResourceCalculator only takes memory into account. Here is a brief explanation of what you are seeing (relevant part bolded).

Pluggable resource-vector in YARN scheduler

The CapacityScheduler has the concept of a ResourceCalculator – a pluggable layer that is used for carrying out the math of allocations by looking at all the identified resources. This includes utilities to help make the following decisions:

- Does this node have enough resources of each resource-type to satisfy this request?

- How many containers can I fit on this node, sorting a list of nodes with varying resources available.

There are two kinds of calculators currently available in YARN – the DefaultResourceCalculator and theDominantResourceCalculator.

The DefaultResourceCalculator only takes memory into account when doing its calculations. This is why CPU requirements are ignored when carrying out allocations in the CapacityScheduler by default. All the math of allocations is reduced to just examining the memory required by resource-requests and the memory available on the node that is being looked at during a specific scheduling-cycle.

You can find more on this topic on our blog:

Created 12-03-2015 09:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Shane Kumpf, it's been a while since I wanted to clarify it, totally clear now.

Have you seen people using: DominantResourceCalculator? This one makes much more sense to me.