Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Yarn container size flexible to satisfy what a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Yarn container size flexible to satisfy what application ask for?

- Labels:

-

Apache Spark

-

Apache YARN

Created 04-25-2016 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am tuning our Yarn cluster which hosts tez, mapreduce and Spark-on-Yarn.

I observed during busy hour, all Yarn cluster memory is used up while lots of cores are free--- which leads me to believe that we should decrease minimum container size.

Meanwhile,

lots of applications specify their memory, -xms=4GB etc; considering container overhead memory, would not it requires container size bigger than 4G? If I set yarn.scheduler.minimum-allocation-mb = 4GB, Spark executor size is 4GB as well, would it actually assign 2 containers/8GB to the Spark executor or yarn is smart enough to allocate around 5GB?

Even in HWX tutorial, I think something not right:

In https://community.hortonworks.com/content/kbentry/14309/demystify-tez-tuning-step-by-step.html

hive.tez.container.size is multiple of yarn.scheduler.minimum-allocation-mb, why so?

if yarn.scheduler.maximum-allocation-mb = 24GB,

yarn.scheduler.minimum-allocation-mb = 4GB,

hive.tez.container.size=5B, would not Yarn smart enough to assign 5GB to a container to satisfy tez needs?

Created 04-26-2016 12:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First let me explain in one or two sentences how yarn works then we go through the questions. I think people sometimes get confused by it all.

Essentially yarn provides a set of memory "slots" on the cluster. These are defined by "yarn.scheduler.minimum-allocation-mb". So let's assume we have 100GB of yarn memory and 1GB minimum-allocation-mb we have 100 "slots". If we set the minimum allocation to 4GB we have 25 slots.

Now this minimum allocation doesn't change application memory settings per se. Each application will get the memory it asks for rounded up to the next slot size. So if the minimum is 4GB and you ask for 4.5GB you will get 8GB. If you want less rounding error make the minimum allocation size smaller. Or better ask for a correct slot size.

Applications then ask for memory usually defined as a parameter AND they also set the memory they actually use. Yarn per se doesn't give a damn about what you do in your container UNLESS the task spawned off by the container launcher is bigger than the allocated container size in which case it will shoot down this container.

So let's go through this step by step shall we?

"I observed during busy hour, all Yarn cluster memory is used up while lots of cores are free--- which leads me to believe that we should decrease minimum container size."

What do you mean with cores are free? The Resourcemanager settings? Nornally cgroups are disabled anyway so the best way to figure this out is a top command on some of the datanodes during busy season. If you see CPU utilization below 80% when the cluster is running at full tilt you should reduce container sizes. ( Assuming your tasks don't need more )

"lots of applications specify their memory, -xms=4GB etc; considering container overhead memory, would not it requires container size bigger than 4G?"

What applications. Normally you need the Heap of the Java process PLUS around 10-25% overhead ( the JVM has some offheap overhead that still counts into the memory consumption of the linux process ) otherwise YARN will simply shoot down the containers when it sees that the Linux process spawned off by the container launcher is bigger than its memory limit for this container. That is the reason pretty much all things running on yarn have a container size in mb ( for example 4096) and a java command line setting for example -Xmx3600m

"If I set yarn.scheduler.minimum-allocation-mb = 4GB,"

Min allocation by itself doesn't give you anything. That as the name says just provides the slot size for containers. Essentially containers could be 4, 8, 12, 16 ... GB in size if you set this settings. Whatever an application requests. If it requests anything smaller or in between it gets the next big size. So if you request 5GB you get 8GB in your case.

"Spark executor size is 4GB as well, would it actually assign 2 containers/8GB to the Spark executor or yarn is smart enough to allocate around 5GB?

Two things here :

a) If you want yarn to be able to allocate 5GB you just set "yarn.scheduler.minimum-allocation-mb=1024 " In this case yarn could give out 5GB and there would be no other effect on other applications. After all they all have to ask for a specific memory ( you can find the sizes they ask for in the mapreduce2 and hive settings in ambari

b) Spark is a bit different here to all the others. Instead of providing yarn size and jvm settings it. has something called memoryOverhead, for example for the executors spark.yarn.executor.memoryOverhead. You essentially should set the executor memory AND the memory overhead so they together fit into one container.

Normally the default for the overhead is 384 I think so if you require 4096 mb for the executor spark will ask for 4500mb and get an 8GB container. Which as you have notes is hardly ideal

To optimize that you have two options

a) lower the yarn minimum container size so yarn CAN round up to 5GB

or better

b) set spark memory AND memoryoverhead so together they fit exactly into one container slot. Similar to the way tez and mapreduce do it.

"yarn.scheduler.minimum-allocation-mb = 4GB,

hive.tez.container.size=5B, would not Yarn smart enough to assign 5GB to a container to satisfy tez needs?"

As written above no and it has nothing to do with smart. Its just that minimum allocation name is a bit misleading. Its effectively the slot size. So if you want yarn to be able to deal out 5GB containers set the minimum allocation size to 1GB. There is no real downside to this. It does NOT change container sizes for applications ( since they all have their request settings anyway ) . The only downside is that some users in a shared cluster might reduce their container sizes heavily and take more CPU cycles than they should get. But the best way is to simply ask for clean container sizes. In the case of spark by correctly setting the memoryOverhead and memory settings for drivers and executors.

Created 04-26-2016 12:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see my post below, sorry, posted it as answer.

Created 04-26-2016 12:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ben,

"Thank you for your reply.

"So if you request 5GB you get 8GB in your case."

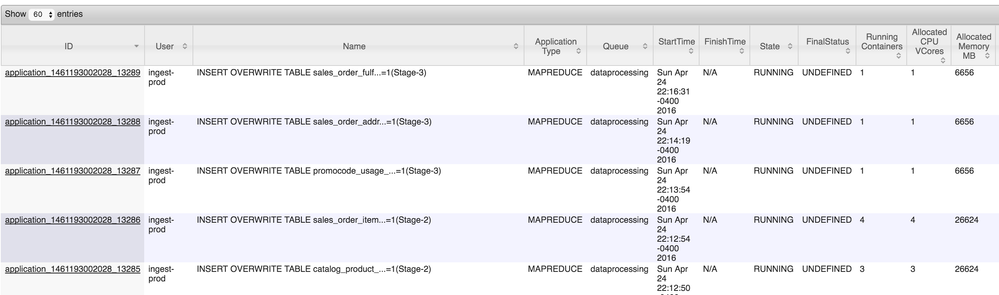

---- this is the most confusing part, I do not think it is true. Please refer to the screenshot I uploaded.

My cluster yarn.scheduler.minimum-allocation-mb = 6.5GB, look at the last 2 lines:

4 containers have 26624 GB and 3 containers also have 26624GB

meaning each container is 6.5G and 8.5G respectively, not necessarily 6.5GB, 13GB...

Thought?

Created 04-26-2016 01:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To be honest no idea. Are you sure that its not just a temporary anomaly ( I.e. that it was 4 containers a second ago and one stopped? ). Resourcemanager UI sometimes does that.

It is necessarily 6.5/13/19.5/...

If 3 containers have 26GB you either have one container who got 2 slots or in this case much much more likely the Resourcemanager didn't update the total memory consumption after one of the containers stopped.

Created on 04-26-2016 02:06 AM - edited 08-19-2019 03:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is common case, look at first line here, 5 containers take 59GB.

Created 04-26-2016 08:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can argue that till the sun goes down but yarn allocates containers as multiples of min allocation size. And he doesn't spread this among containers. Each single container gets 1x 2x 3x of min allocation size. That's just like it is. ( How else do you think can yarn distribute containers across nodes? Each nodemanager has a min and max allocation size i.e. number of slots )

So in this case he allocated 59904. Thats exactly 9 containers. Assuming this is still running and stable my guess would be that

1) the driver has one container

2) you have 4 executors that have two containers each

1 + 4 *2 = 9 = 59904MB.

As I said change the settings of executor memory AND memoryOverhead to totally go below 6605 and you will see them use less containers.

Created 11-11-2019 12:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the menu option on Ambari where I can check this infos?

Created 04-26-2016 05:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From my experience, in case of Spark, "Running containers" denotes the requested number of executors plus one (for the driver), while "Allocated Memory MB" denotes the allocated memory required to satisfy the request in a multiple of "minimum-allocation-mb". My example:

minimum-allocation-mb=4096 num-executors: 100 executor-memory: 7G spark.driver.memory=7G ---- Display ---- Running containers: 101 Allocated Mem MB=827392 (= 202 * 4096)

2*minimum-allocation-mb used to accommodate 7G plus the overhead, which in the latest versions of Spark is max(384, executor/driver-memory*0.1), in my case 700M.

Created on 04-26-2016 02:04 PM - edited 08-19-2019 03:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Predrag, thanks!

Your sample can explain the spark screenshot I shared below; however, it cannot explain the the hive job below: the last 2 lines, 3 containers and 4 containers allocated same amount of mem.