Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Yarn not connect to resource manager

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Yarn not connect to resource manager

- Labels:

-

Cloudera Data Platform (CDP)

Created 07-25-2022 07:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to run a Yarn cluster by this command:

spark-submit --deploy-mode cluster --master yarn main.py

and i return this message:

INFO Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

Server address is not correct but in yarn-site.xml there is the correct FQDN.

How can i solve this?

Created 07-25-2022 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you added yarn gateway on the system from where the command is ran so that it gets the basic configuration to connect to RM. If HA is enabled, do check of you have set yarn.resourcemanager.webapp.address.<rm‐id>.

Created 07-25-2022 11:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

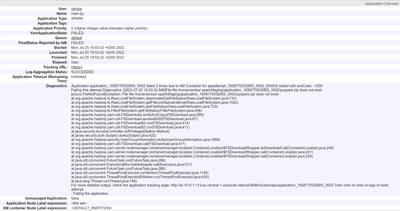

This image could be more easier to read.

Created 07-25-2022 10:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

yes I have the gateway on a worker.

I tried to run command on gateway instance but I get this log with fails.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/07/25 19:53:30 INFO SparkContext: Running Spark version 3.2.2

22/07/25 19:53:30 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

22/07/25 19:53:30 INFO ResourceUtils: ==============================================================

22/07/25 19:53:30 INFO ResourceUtils: No custom resources configured for spark.driver.

22/07/25 19:53:30 INFO ResourceUtils: ==============================================================

22/07/25 19:53:30 INFO SparkContext: Submitted application: main.py

22/07/25 19:53:30 INFO ResourceProfile: Default ResourceProfile created, executor resources: Map(cores -> name: cores, amount: 1, script: , vendor: , memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0)

22/07/25 19:53:30 INFO ResourceProfile: Limiting resource is cpus at 1 tasks per executor

22/07/25 19:53:30 INFO ResourceProfileManager: Added ResourceProfile id: 0

22/07/25 19:53:30 INFO SecurityManager: Changing view acls to: centos

22/07/25 19:53:30 INFO SecurityManager: Changing modify acls to: centos

22/07/25 19:53:30 INFO SecurityManager: Changing view acls groups to:

22/07/25 19:53:30 INFO SecurityManager: Changing modify acls groups to:

22/07/25 19:53:30 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(centos); groups with view permissions: Set(); users with modify permissions: Set(centos); groups with modify permissions: Set()

22/07/25 19:53:30 INFO Utils: Successfully started service 'sparkDriver' on port 45247.

22/07/25 19:53:30 INFO SparkEnv: Registering MapOutputTracker

22/07/25 19:53:30 INFO SparkEnv: Registering BlockManagerMaster

22/07/25 19:53:30 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/07/25 19:53:30 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/07/25 19:53:30 INFO SparkEnv: Registering BlockManagerMasterHeartbeat

22/07/25 19:53:30 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-9b0423bb-8967-4de8-9a20-2fa0c35025ed

22/07/25 19:53:30 INFO MemoryStore: MemoryStore started with capacity 408.9 MiB

22/07/25 19:53:30 INFO SparkEnv: Registering OutputCommitCoordinator

22/07/25 19:53:30 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/07/25 19:53:30 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://ip-10-0-1-113.eu-central-1.compute.internal:4040

22/07/25 19:53:31 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at /0.0.0.0:8032

22/07/25 19:53:31 INFO Client: Requesting a new application from cluster with 3 NodeManagers

22/07/25 19:53:31 INFO Configuration: resource-types.xml not found

22/07/25 19:53:31 INFO ResourceUtils: Unable to find 'resource-types.xml'.

22/07/25 19:53:31 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (60399 MB per container)

22/07/25 19:53:31 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

22/07/25 19:53:31 INFO Client: Setting up container launch context for our AM

22/07/25 19:53:31 INFO Client: Setting up the launch environment for our AM container

22/07/25 19:53:31 INFO Client: Preparing resources for our AM container

22/07/25 19:53:31 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

22/07/25 19:53:32 INFO Client: Uploading resource file:/tmp/spark-c0176ed8-8bb8-450e-8010-40b386a4b0c6/__spark_libs__5907380933161926429.zip -> file:/home/centos/.sparkStaging/application_1658770532855_0002/__spark_libs__5907380933161926429.zip

22/07/25 19:53:32 INFO Client: Uploading resource file:/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip -> file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip

22/07/25 19:53:32 INFO Client: Uploading resource file:/usr/local/lib/python3.6/site-packages/pyspark/python/lib/py4j-0.10.9.5-src.zip -> file:/home/centos/.sparkStaging/application_1658770532855_0002/py4j-0.10.9.5-src.zip

22/07/25 19:53:32 INFO Client: Uploading resource file:/tmp/spark-c0176ed8-8bb8-450e-8010-40b386a4b0c6/__spark_conf__6393843623349520327.zip -> file:/home/centos/.sparkStaging/application_1658770532855_0002/__spark_conf__.zip

22/07/25 19:53:32 INFO SecurityManager: Changing view acls to: centos

22/07/25 19:53:32 INFO SecurityManager: Changing modify acls to: centos

22/07/25 19:53:32 INFO SecurityManager: Changing view acls groups to:

22/07/25 19:53:32 INFO SecurityManager: Changing modify acls groups to:

22/07/25 19:53:32 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(centos); groups with view permissions: Set(); users with modify permissions: Set(centos); groups with modify permissions: Set()

22/07/25 19:53:32 INFO Client: Submitting application application_1658770532855_0002 to ResourceManager

22/07/25 19:53:32 INFO YarnClientImpl: Submitted application application_1658770532855_0002

22/07/25 19:53:33 INFO Client: Application report for application_1658770532855_0002 (state: FAILED)

22/07/25 19:53:33 INFO Client:

client token: N/A

diagnostics: Application application_1658770532855_0002 failed 2 times due to AM Container for appattempt_1658770532855_0002_000002 exited with exitCode: -1000

Failing this attempt.Diagnostics: [2022-07-25 19:53:32.848]File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

java.io.FileNotFoundException: File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:733)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:1022)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:723)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:456)

at org.apache.hadoop.yarn.util.FSDownload.verifyAndCopy(FSDownload.java:269)

at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:67)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:414)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:411)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:411)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.doDownloadCall(ContainerLocalizer.java:248)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:241)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:229)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

For more detailed output, check the application tracking page: http://ip-10-0-1-113.eu-central-1.compute.internal:8088/cluster/app/application_1658770532855_0002 Then click on links to logs of each attempt.

. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1658771612544

final status: FAILED

tracking URL: http://ip-10-0-1-113.eu-central-1.compute.internal:8088/cluster/app/application_1658770532855_0002

user: centos

22/07/25 19:53:33 INFO Client: Deleted staging directory file:/home/centos/.sparkStaging/application_1658770532855_0002

22/07/25 19:53:33 ERROR YarnClientSchedulerBackend: The YARN application has already ended! It might have been killed or the Application Master may have failed to start. Check the YARN application logs for more details.

22/07/25 19:53:33 ERROR SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: Application application_1658770532855_0002 failed 2 times due to AM Container for appattempt_1658770532855_0002_000002 exited with exitCode: -1000

Failing this attempt.Diagnostics: [2022-07-25 19:53:32.848]File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

java.io.FileNotFoundException: File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:733)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:1022)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:723)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:456)

at org.apache.hadoop.yarn.util.FSDownload.verifyAndCopy(FSDownload.java:269)

at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:67)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:414)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:411)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:411)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.doDownloadCall(ContainerLocalizer.java:248)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:241)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:229)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

For more detailed output, check the application tracking page: http://ip-10-0-1-113.eu-central-1.compute.internal:8088/cluster/app/application_1658770532855_0002 Then click on links to logs of each attempt.

. Failing the application.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:97)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:64)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:220)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:581)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

22/07/25 19:53:33 INFO SparkUI: Stopped Spark web UI at http://ip-10-0-1-113.eu-central-1.compute.internal:4040

22/07/25 19:53:33 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

22/07/25 19:53:33 INFO YarnClientSchedulerBackend: Shutting down all executors

22/07/25 19:53:33 INFO YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

22/07/25 19:53:33 INFO YarnClientSchedulerBackend: YARN client scheduler backend Stopped

22/07/25 19:53:33 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/07/25 19:53:33 INFO MemoryStore: MemoryStore cleared

22/07/25 19:53:33 INFO BlockManager: BlockManager stopped

22/07/25 19:53:33 INFO BlockManagerMaster: BlockManagerMaster stopped

22/07/25 19:53:33 WARN MetricsSystem: Stopping a MetricsSystem that is not running

22/07/25 19:53:33 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/07/25 19:53:33 INFO SparkContext: Successfully stopped SparkContext

Traceback (most recent call last):

File "/home/centos/spark/main.py", line 12, in <module>

spark = SparkSession.builder.getOrCreate()

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip/pyspark/sql/session.py", line 228, in getOrCreate

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip/pyspark/context.py", line 392, in getOrCreate

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip/pyspark/context.py", line 147, in __init__

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip/pyspark/context.py", line 209, in _do_init

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/pyspark.zip/pyspark/context.py", line 329, in _initialize_context

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/py4j-0.10.9.5-src.zip/py4j/java_gateway.py", line 1586, in __call__

File "/usr/local/lib/python3.6/site-packages/pyspark/python/lib/py4j-0.10.9.5-src.zip/py4j/protocol.py", line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: org.apache.spark.SparkException: Application application_1658770532855_0002 failed 2 times due to AM Container for appattempt_1658770532855_0002_000002 exited with exitCode: -1000

Failing this attempt.Diagnostics: [2022-07-25 19:53:32.848]File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

java.io.FileNotFoundException: File file:/home/centos/.sparkStaging/application_1658770532855_0002/pyspark.zip does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:733)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:1022)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:723)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:456)

at org.apache.hadoop.yarn.util.FSDownload.verifyAndCopy(FSDownload.java:269)

at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:67)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:414)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:411)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:411)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.doDownloadCall(ContainerLocalizer.java:248)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:241)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer$FSDownloadWrapper.call(ContainerLocalizer.java:229)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

For more detailed output, check the application tracking page: http://ip-10-0-1-113.eu-central-1.compute.internal:8088/cluster/app/application_1658770532855_0002 Then click on links to logs of each attempt.

. Failing the application.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:97)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:64)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:220)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:581)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

22/07/25 19:53:33 INFO ShutdownHookManager: Shutdown hook called

22/07/25 19:53:33 INFO ShutdownHookManager: Deleting directory /tmp/spark-c0176ed8-8bb8-450e-8010-40b386a4b0c6

22/07/25 19:53:33 INFO ShutdownHookManager: Deleting directory /tmp/spark-17e0ad88-9fa7-421d-913a-ffb898fe2367

I really don't understand what could be the problem

Created 07-26-2022 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Are you able to submit the job using YARN client mode (--deploy-mode client)?