Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Yarn queue limits don't apply

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Yarn queue limits don't apply

- Labels:

-

Apache YARN

Created 01-25-2017 02:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm stuck with a problem and would be really great if someone could help me !

I'm running an HDP 2.5.0.0 cluster. Capacity scheduler is the scheduler used. Let's say I have 4 queues - Q1, Q2, Q3 and Q4 defined under root. Q1,Q2 and Q3 are leaf queues and have minimum and maximum capacities 20% and 40% respectively (queues are similar). Q4 is a parent queue (minimum cap - 40%, max - 100%) and has 4 leaf queues under it - let's say Q41, Q42, Q43 and Q44 (minimum 25, maximum 100 for all 4 sub queues) .

All queues have minimum user limit set to 100% and user limit factor set to 1.

Issue :

When users submit jobs to Q1,Q2 and Q41 and if other queues are empty, I would expect Q1 and Q2 should be at 20% + absolute capacity and Q4 should be 40% +, roughly 25 (Q1), 25 (Q2) and 50 (Q41).

But this is not happening.

Q1 and Q2 always stay at 40% and Q41 or Q4 is getting only 10% absolute capacity.

Any idea how it's happening ?

Thank.

Created on 01-26-2017 03:09 PM - edited 08-18-2019 06:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Priyan S I tried to reproduce your issue, but couldn't (based on the given capacity configuration). I set all the properties based on your description and left everything else on default. With this setup the scheduler works as you expected.

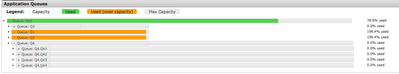

After submitting the first two jobs, they get the maximum available resource per queue (40% each):

Then, after submitting the job for Q41 it starts getting resources (it starts with just the AM container, and then - slowly - gets more and more containers):

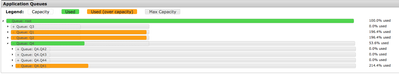

Finally - when preemption is enabled - the apps in Q1 and Q2 start losing resources and Q41 gets even more, and the final distribution will be like 25% - 25% - 50%, as you expected:

This means, that most likely the problem is not caused by the parameters you've provided in your question. I'd suggest you to check other parameters (you can get some of them easily from the ResourceManager Scheduler UI) - the most suspicious thing is that the apps in Q41 get so few resources, so I'd start there. Here are a few things that I can think of:

- Max Application Master Resources

- If you submit many small applications to Q41 (and big applications to Q1 and Q2), this might cause them to get hanging

- also: Max Application Master Resources Per User

- User Limit Factor

- The other way around: if you submit just one giant application to Q41

- Preemption parameters (mentioned in the previous comments)

- Maybe preemption is not set up properly - although I also tried the same setup with preemption disabled, and it worked exactly the same way, except the balancing phase took longer

- total_preemption_per_round

- max_wait_before_kill

- etc.

- Node labels

- You didn't mention in your question if you have node labels configured in your cluster. They can change the scheduling quite drastically.

If no luck, you could also check the logs of the capacity scheduler for further information.

Created 10-12-2017 08:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gnovak @tuxnet Would resource sharing still work if ACLs are configured for separate tenant queues? If ACLs are different for Q1 and Q2, will it still support elasticity and preemption?

Could you also please share the workload/application details that you used for these experiments? I am trying to run some experiments to do a similar test for elasticity and preemption of capacity schedulers. I am using a simple Spark word count application on a large file for the same, but I am not able to get a feel of resource sharing among queues using this application.

Thanks in advance.

- « Previous

-

- 1

- 2

- Next »