Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Zeppelin livy interpreter not working with Ker...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Zeppelin livy interpreter not working with Kerberos cluster on HDP 2.5.3

- Labels:

-

Apache Spark

-

Apache Zeppelin

Created on 12-30-2016 03:59 AM - edited 08-19-2019 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP 2.5.3 cluster kerberos with MIT KDC, openldap as the directory service. Ranger and Atlas are installed and working properly.

Zeppelin installed on node5, livy server installed on node1

Enabled ldap for user authentication with following shiro config. Login with ldap user is working fine.

[users] # List of users with their password allowed to access Zeppelin. # To use a different strategy (LDAP / Database / ...) check the shiro doc at http://shiro.apache.org/configuration.html#Configuration-INISections #admin = password1 #user1 = password2, role1, role2 #user2 = password3, role3 #user3 = password4, role2 # Sample LDAP configuration, for user Authentication, currently tested for single Realm [main] #activeDirectoryRealm = org.apache.zeppelin.server.ActiveDirectoryGroupRealm #activeDirectoryRealm.systemUsername = CN=Administrator,CN=Users,DC=HW,DC=EXAMPLE,DC=COM #activeDirectoryRealm.systemPassword = Password1! #activeDirectoryRealm.hadoopSecurityCredentialPath = jceks://user/zeppelin/zeppelin.jceks #activeDirectoryRealm.searchBase = CN=Users,DC=HW,DC=TEST,DC=COM #activeDirectoryRealm.url = ldap://ad-nano.test.example.com:389 #activeDirectoryRealm.groupRolesMap = "" #activeDirectoryRealm.authorizationCachingEnabled = true ldapRealm = org.apache.shiro.realm.ldap.JndiLdapRealm ldapRealm.userDnTemplate = uid={0},ou=Users,dc=field,dc=hortonworks,dc=com ldapRealm.contextFactory.url = ldap://node5:389 ldapRealm.contextFactory.authenticationMechanism = SIMPLE sessionManager = org.apache.shiro.web.session.mgt.DefaultWebSessionManager securityManager.sessionManager = $sessionManager # 86,400,000 milliseconds = 24 hour securityManager.sessionManager.globalSessionTimeout = 86400000 shiro.loginUrl = /api/login [urls] # anon means the access is anonymous. # authcBasic means Basic Auth Security # To enfore security, comment the line below and uncomment the next one /api/version = anon #/** = anon /** = authc

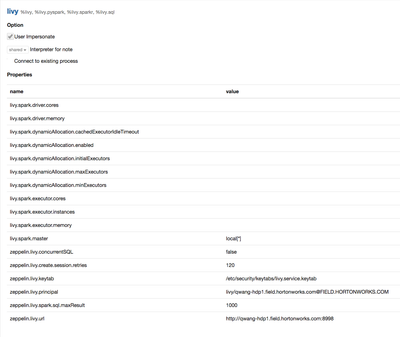

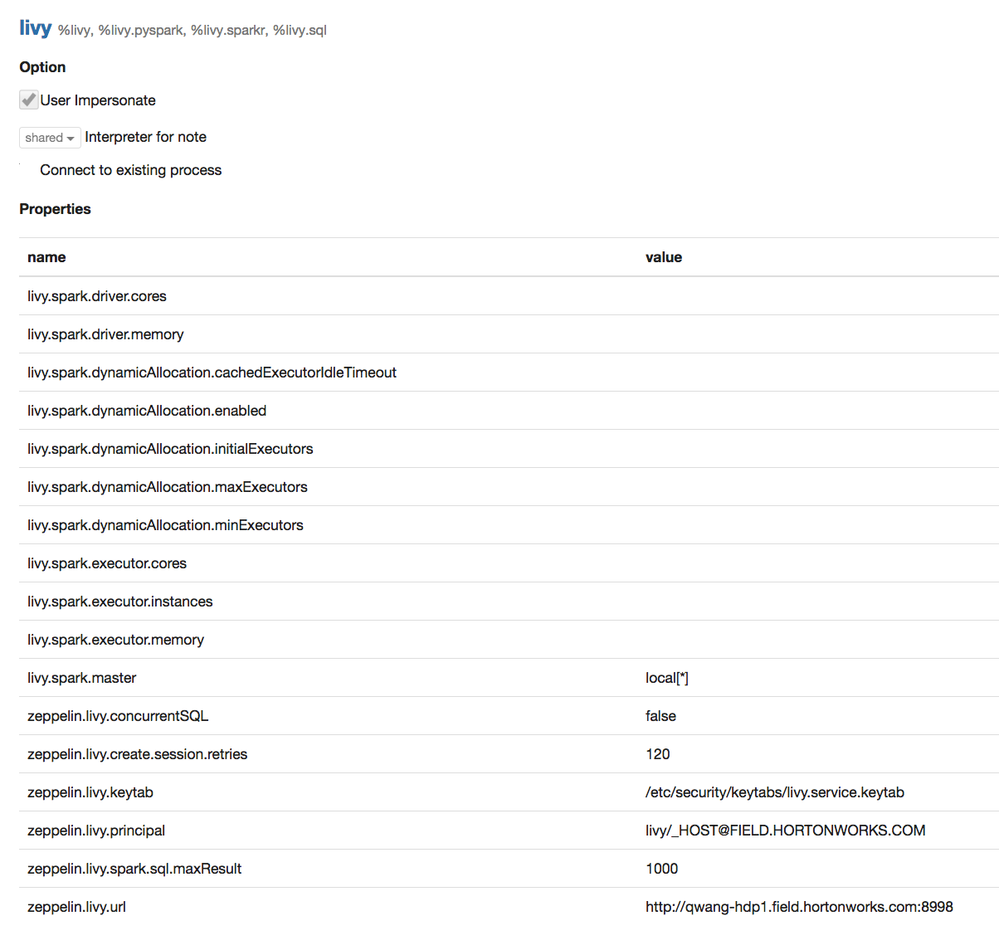

Then try to config the livy on Zeppelin with the following setting. please note the kerberos keytab is copied from node1, and principal have hostname from node1 as well

However, I keep getting the Connection refused error on Zeppelin while running sc.verison

%livy sc.version java.net.ConnectException: Connection refused at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:589) at org.apache.thrift.transport.TSocket.open(TSocket.java:182) at org.apache.zeppelin.interpreter.remote.ClientFactory.create(ClientFactory.java:51) at org.apache.zeppelin.interpreter.remote.ClientFactory.create(ClientFactory.java:37) at org.apache.commons.pool2.BasePooledObjectFactory.makeObject(BasePooledObjectFactory.java:60) at org.apache.commons.pool2.impl.GenericObjectPool.create(GenericObjectPool.java:861) at org.apache.commons.pool2.impl.GenericObjectPool.borrowObject(GenericObjectPool.java:435) at org.apache.commons.pool2.impl.GenericObjectPool.borrowObject(GenericObjectPool.java:363) at org.apache.zeppelin.interpreter.remote.RemoteInterpreterProcess.getClient(RemoteInterpreterProcess.java:189) at org.apache.zeppelin.interpreter.remote.RemoteInterpreter.init(RemoteInterpreter.java:173) at org.apache.zeppelin.interpreter.remote.RemoteInterpreter.getFormType(RemoteInterpreter.java:338) at org.apache.zeppelin.interpreter.LazyOpenInterpreter.getFormType(LazyOpenInterpreter.java:105) at org.apache.zeppelin.notebook.Paragraph.jobRun(Paragraph.java:262) at org.apache.zeppelin.scheduler.Job.run(Job.java:176) at org.apache.zeppelin.scheduler.RemoteScheduler$JobRunner.run(RemoteScheduler.java:328) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745)

Does the error mean ssl must be enabled?

Created 01-04-2017 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally found the resolution: because I have Ranger KMS installed on this cluster, livy user also need to be added in proxy user for ranger KMS

Add following in Ambari => Ranger KMS => custom core site

hadoop.kms.proxyuser.livy.hosts=* hadoop.kms.proxyuser.livy.users=*

Created 01-06-2017 07:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I too had run into several of the issues above but with 2.5.0. The 2 major things that I change on top of what is listed above is:

Add these items in Ambari:

livy.conf

livy.server.launch.kerberos.keytab /etc/security/keytabs/livy.service.keytab

livy.server.launch.kerberos.principal livy/_HOST@REALM

livy-env.sh

export HADOOP_HOME=/usr/hdp/current/hadoop-client

I was getting 401 errors from Zeppelin to Livy:

WARN [2017-01-06 11:07:29,393] ({pool-2-thread-4} HttpAuthenticator.java[generateAuthResponse]:207) - NEGOTIATE authentication error: No valid credentials provided (Mechani sm level: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - UNKNOWN_SERVER))

ERROR [2017-01-06 11:07:29,407] ({pool-2-thread-4} LivyHelper.java[createSession]:128) - Error getting session for user org.springframework.web.client.RestClientException: Error running rest call; nested exception is org.springframework.web.client.HttpClientErrorException: 401 Authentication required

Created 01-26-2017 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't really have an answer but I do have some more information.

I see that zeppelin contacts livy, authenticates successfully and that livy replies with a:

Set-Cookie: hadoop.auth="u=zeppelin..."; HttpOnly

However I don't ever see that cookie returned.

As far as I can see the livy interpreter should send the cookie on every call to livy. It uses org.springframework.web.client.RestTemplate to handle communication between itself and the livy server and I can see that that framework can handle cookies but I also see that the cookie is missing.

Created 02-03-2017 02:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Did you figured out the solution?

I'm working on similar configuration since couple weeks and I'm still getting error:

%livy.spark sc.version Cannot start spark.

I have debug enabled on livy but don't see any specific error.

In zeppelin-interpreter-livy log I see:

ERROR [2017-02-03 15:07:47,491] ({pool-2-thread-7} LivyHelper.java[createSession]:128) - Error getting session for user

java.lang.Exception: Cannot start spark. What's interesting, I see spark job created in yarn history with my user over livy-session with the status finished/suceeded most of the time, so from that side it looks correct. Unfortunatelly, still no result in zeppelin notebook.

I will really appreciate any suggestions from those who succeeded:)

Kind regards, Michał

Created 02-03-2017 07:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Michal, my problem was related to KMS proxy user and addressed by adding livy as proxy user. My cluster was secured with Kerberos and with Ranger and KMS. I am not sure if your is similar. You may want to start a new thread with more details.

Created 02-04-2017 03:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem isn't in zeppelin, it is in livy. Check livy.out you may see a timeout connecting to hive.

Created 02-08-2017 12:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I now have a working livy running, at least sc.version works

After trying everything I could find with livy 0.2.0 (the version in 2.5.0) I decided to give 0.3.0 a try. I believe that the problem is caused by a bug in spark 1.6.2 when connecting to the metadata store.

After compiling livy 0.3.0 with hadoop 2.7.3 and spark 2.0.0, and installing it beside 0.2.0 I had problems creating credentials for the HTTP principal. I solved that by using the hadoop jars from livy 0.2.0 instead of those from the build.

- « Previous

-

- 1

- 2

- Next »