Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ambari-agent connection refused after host reb...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ambari-agent connection refused after host reboot

- Labels:

-

Apache Ambari

Created on 05-24-2019 02:18 PM - edited 08-17-2019 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a 1-node ambari managed cluster which was working correctly, auto starting server, host and components when restarting the system.

I've changed to a public ip and a diferent hostname and after solving FQDN problems, I have problem with auto start when rebooting. Ambari server and agent are auto starting but heartbeat is lost and ambari-agent logs show connection refused, but if I manually restart ambari-agent, connection is correct and I can start services.

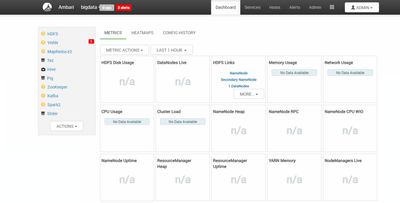

There's ambari-server UI just after rebooting.

ambari-agent log shows the nex tail.

ERROR 2019-05-24 12:28:47,235 script_alert.py:119 - [Alert][hive_metastore_process] Failed with result CRITICAL: ['Metastore on bigdata.es failed (Traceback (most recent call last):\n File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/alerts/alert_hive_metastore.py", line 200, in execute\n timeout_kill_strategy=TerminateStrategy.KILL_PROCESS_TREE,\n File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__\n self.env.run()\n File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run\n self.run_action(resource, action)\n File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action\n provider_action()\n File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 262, in action_run\n tries=self.resource.tries, try_sleep=self.resource.try_sleep)\n File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 72, in inner\n result = function(command, **kwargs)\n File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 102, in checked_call\n tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy)\n File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 150, in _call_wrapper\n result = _call(command, **kwargs_copy)\n File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 303, in _call\n raise ExecutionFailed(err_msg, code, out, err)\nExecutionFailed: Execution of \'export HIVE_CONF_DIR=\'/usr/hdp/current/hive-metastore/conf/conf.server\' ; hive --hiveconf hive.metastore.uris=thrift://bigdata.es:9083 --hiveconf hive.metastore.client.connect.retry.delay=1 --hiveconf hive.metastore.failure.retries=1 --hiveconf hive.metastore.connect.retries=1 --hiveconf hive.metastore.client.socket.timeout=14 --hiveconf hive.execution.engine=mr -e \'show databases;\'\' returned 1. Logging initialized using configuration in file:/etc/hive/2.6.3.0-71/0/conf.server/hive-log4j.properties\nException in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient\n\tat org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:547)\n\tat org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)\n\tat org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:625)\n\tat sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\n\tat sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\n\tat sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\n\tat java.lang.reflect.Method.invoke(Method.java:498)\n\tat org.apache.hadoop.util.RunJar.run(RunJar.java:233)\n\tat org.apache.hadoop.util.RunJar.main(RunJar.java:148)\nCaused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient\n\tat org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1566)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:92)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:138)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:110)\n\tat org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3510)\n\tat org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3542)\n\tat org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:528)\n\t... 8 more\nCaused by: java.lang.reflect.InvocationTargetException\n\tat sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)\n\tat sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)\n\tat sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)\n\tat java.lang.reflect.Constructor.newInstance(Constructor.java:423)\n\tat org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1564)\n\t... 14 more\nCaused by: MetaException(message:Could not connect to meta store using any of the URIs provided. Most recent failure: org.apache.thrift.transport.TTransportException: java.net.ConnectException: Conexi\xc3\xb3n rehusada (Connection refused)\n\tat org.apache.thrift.transport.TSocket.open(TSocket.java:226)\n\tat org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:487)\n\tat org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:282)\n\tat org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:76)\n\tat sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)\n\tat sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)\n\tat sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)\n\tat java.lang.reflect.Constructor.newInstance(Constructor.java:423)\n\tat org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1564)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:92)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:138)\n\tat org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:110)\n\tat org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3510)\n\tat org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3542)\n\tat org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:528)\n\tat org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:681)\n\tat org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:625)\n\tat sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\n\tat sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\n\tat sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\n\tat java.lang.reflect.Method.invoke(Method.java:498)\n\tat org.apache.hadoop.util.RunJar.run(RunJar.java:233)\n\tat org.apache.hadoop.util.RunJar.main(RunJar.java:148)\nCaused by: java.net.ConnectException: Conexi\xc3\xb3n rehusada (Connection refused)\n\tat java.net.PlainSocketImpl.socketConnect(Native Method)\n\tat java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)\n\tat java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)\n\tat java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)\n\tat java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)\n\tat java.net.Socket.connect(Socket.java:589)\n\tat org.apache.thrift.transport.TSocket.open(TSocket.java:221)\n\t... 22 more\n)\n\tat org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:534)\n\tat org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:282)\n\tat org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:76)\n\t... 19 more\n)']

INFO 2019-05-24 12:28:58,095 logger.py:71 - call[['test', '-w', '/dev']] {'sudo': True, 'quiet': False, 'timeout': 5}

INFO 2019-05-24 12:28:58,108 logger.py:71 - call returned (0, '')

INFO 2019-05-24 12:28:58,119 logger.py:71 - call[['test', '-w', '/']] {'sudo': True, 'quiet': False, 'timeout': 5}

INFO 2019-05-24 12:28:58,131 logger.py:71 - call returned (0, '')

INFO 2019-05-24 12:28:58,143 logger.py:71 - call[['test', '-w', '/Datos']] {'sudo': True, 'quiet': False, 'timeout': 5}

INFO 2019-05-24 12:28:58,154 logger.py:71 - call returned (0, '')

ERROR 2019-05-24 12:29:39,995 script_alert.py:119 - [Alert][hive_webhcat_server_status] Failed with result CRITICAL: ['Connection failed to http://bigdata.es:50111/templeton/v1/status?user.name=ambari-qa + \nTraceback (most recent call last):\n File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/alerts/alert_webhcat_server.py", line 190, in execute\n url_response = urllib2.urlopen(query_url, timeout=connection_timeout)\n File "/usr/lib/python2.7/urllib2.py", line 154, in urlopen\n return opener.open(url, data, timeout)\n File "/usr/lib/python2.7/urllib2.py", line 429, in open\n response = self._open(req, data)\n File "/usr/lib/python2.7/urllib2.py", line 447, in _open\n \'_open\', req)\n File "/usr/lib/python2.7/urllib2.py", line 407, in _call_chain\n result = func(*args)\n File "/usr/lib/python2.7/urllib2.py", line 1228, in http_open\n return self.do_open(httplib.HTTPConnection, req)\n File "/usr/lib/python2.7/urllib2.py", line 1198, in do_open\n raise URLError(err)\nURLError: <urlopen error [Errno 111] Conexi\xc3\xb3n rehusada>\n']

ERROR 2019-05-24 12:29:40,000 script_alert.py:119 - [Alert][yarn_nodemanager_health] Failed with result CRITICAL: ['Connection failed to http://bigdata.es:8042/ws/v1/node/info (Traceback (most recent call last):\n File "/var/lib/ambari-agent/cache/common-services/YARN/2.1.0.2.0/package/alerts/alert_nodemanager_health.py", line 171, in execute\n url_response = urllib2.urlopen(query, timeout=connection_timeout)\n File "/usr/lib/python2.7/urllib2.py", line 154, in urlopen\n return opener.open(url, data, timeout)\n File "/usr/lib/python2.7/urllib2.py", line 429, in open\n response = self._open(req, data)\n File "/usr/lib/python2.7/urllib2.py", line 447, in _open\n \'_open\', req)\n File "/usr/lib/python2.7/urllib2.py", line 407, in _call_chain\n result = func(*args)\n File "/usr/lib/python2.7/urllib2.py", line 1228, in http_open\n return self.do_open(httplib.HTTPConnection, req)\n File "/usr/lib/python2.7/urllib2.py", line 1198, in do_open\n raise URLError(err)\nURLError: <urlopen error [Errno 111] Conexi\xc3\xb3n rehusada>\n)']

INFO 2019-05-24 13:30:00,155 main.py:96 - loglevel=logging.INFO

INFO 2019-05-24 13:30:00,157 main.py:96 - loglevel=logging.INFO

INFO 2019-05-24 13:30:00,157 main.py:96 - loglevel=logging.INFO

INFO 2019-05-24 13:30:00,159 DataCleaner.py:39 - Data cleanup thread started

INFO 2019-05-24 13:30:00,164 DataCleaner.py:120 - Data cleanup started

INFO 2019-05-24 13:30:00,295 DataCleaner.py:122 - Data cleanup finished

INFO 2019-05-24 13:30:00,314 PingPortListener.py:50 - Ping port listener started on port: 8670

INFO 2019-05-24 13:30:00,314 main.py:132 - Newloglevel=logging.DEBUG

INFO 2019-05-24 13:30:00,314 main.py:405 - Connecting to Ambari server at https://bigdata.es:8440 (10.61.2.10)

DEBUG 2019-05-24 13:30:00,314 NetUtil.py:110 - Trying to connect to https://bigdata.es:8440

INFO 2019-05-24 13:30:00,315 NetUtil.py:67 - Connecting to https://bigdata.es:8440/ca

WARNING 2019-05-24 13:30:00,317 NetUtil.py:98 - Failed to connect to https://bigdata.es:8440/ca due to [Errno 111] Conexión rehusada

WARNING 2019-05-24 13:30:00,317 NetUtil.py:121 - Server at https://bigdata.es:8440 is not reachable, sleeping for 10 seconds...And ambari-agent.ini config is the following

[server] hostname = bigdata.es url_port = 8440 secured_url_port = 8441 connect_retry_delay = 10 max_reconnect_retry_delay = 30 [agent] logdir = /var/log/ambari-agent piddir = /var/run/ambari-agent prefix = /var/lib/ambari-agent/data loglevel = DEBUG data_cleanup_interval = 86400 data_cleanup_max_age = 2592000 data_cleanup_max_size_mb = 100 ping_port = 8670 cache_dir = /var/lib/ambari-agent/cache tolerate_download_failures = true run_as_user = root parallel_execution = 0 alert_grace_period = 5 status_command_timeout = 5 alert_kinit_timeout = 14400000 system_resource_overrides = /etc/resource_overrides [security] keysdir = /var/lib/ambari-agent/keys server_crt = ca.crt passphrase_env_var_name = AMBARI_PASSPHRASE ssl_verify_cert = 0 credential_lib_dir = /var/lib/ambari-agent/cred/lib credential_conf_dir = /var/lib/ambari-agent/cred/conf credential_shell_cmd = org.apache.hadoop.security.alias.CredentialShell force_https_protocol = PROTOCOL_TLSv1_2 [services] pidlookuppath = /var/run/ [heartbeat] state_interval_seconds = 60 dirs = /etc/hadoop,/etc/hadoop/conf,/etc/hbase,/etc/hcatalog,/etc/hive,/etc/oozie, /etc/sqoop, /var/run/hadoop,/var/run/zookeeper,/var/run/hbase,/var/run/templeton,/var/run/oozie, /var/log/hadoop,/var/log/zookeeper,/var/log/hbase,/var/run/templeton,/var/log/hive log_lines_count = 300 idle_interval_min = 1 idle_interval_max = 10 [logging] syslog_enabled = 0

If I run sudo ambari-agent restart command, then I can connect and start services in ambari-server ui.

Created 05-24-2019 03:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changing the hostname /etc/hosts is not enough there are the below step you MUST perform to have your Cluster working again. In Ambari Web > Dashboard, stop all services.

Stop ambari-server and ambari-agents on all hosts.

# ambari-server stop # ambari-agent stop

Create a file host_names_changes.json file with hostnames changes.

Contents of the host_names_changes.json

{

"bigdata" : {

"old_name_here" : "bigdata-es"

}

}From the directory where you saved the above file as a root user run

# ambari-server update-host-names host_names_changes.json

After successful execution, your ambari will update all the necessary files except the old hostnames in ambari.properties change the below values

Change these 3 values in /etc/ambari-server/conf/ambari.properties

server.jdbc.rca.url= server.jdbc.url=jdbc= server.jdbc.hostname=

You will need to change also values for hive,oozie etc change according to your installation

# Hive

grant all privileges on hive.* to 'hive'@'bigdata-es' identified by 'hive_password'; grant all privileges on hive.* to 'hive'@'bigdata-es' with grant option;

# Oozie

grant all privileges on oozie.* to 'hive'@'bigdata-es' identified by 'oozie_password'; grant all privileges on oozie.* to 'hive'@'bigdata-es' with grant option;

# Ranger

grant all privileges on ranger.* to 'hive'@'bigdata-es' identified by 'ranger_password'; grant all privileges on ranger.* to 'hive'@'bigdata-es' with grant option;

# Rangerkms

grant all privileges on rangerkms.* to 'hive'@'bigdata-es' identified by 'rangerkms_password'; grant all privileges on rangerkms.* to 'hive'@'bigdata-es' with grant option;

After doing the above now you can restart amabri and the ambari-agent hoping you have changed to the name in ambari-agent.ini,

Start ambari-server and ambari-agents on all hosts.

# ambari-server start # ambari-agent start

Before running the Ambari component startall make sure hive,oozie,ranger etc have the correct hostname in the Ambari UI Configs !!

Happy Hadooping

Created 05-27-2019 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your explained answer @Geoffrey Shelton Okot

I've tried to do so and changed all manually changeable values you mentioned (including setting same hostname as it had before ip change)

If I try this last option, I can make it correctly run with 'ambari-agent restart' but it won't in system 'sudo reboot'.

When trying a different hostanem and making changes you told me, I got an error when

- ambari-server update-host-names host_names_changes.json

And ambari-server.log shows folloging trace:

- 27 may 2019 09:59:38,771 ERROR [main] AmbariJpaLocalTxnInterceptor:180 - [DETAILED ERROR] Rollback reason: Local Exception Stack: Exception [EclipseLink-4002] (Eclipse Persistence Services - 2.6.2.v20151217-774c696): org.eclipse.persistence.exceptions.DatabaseException Internal Exception: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. Error Code: 0 Call: UPDATE hosts SET host_name = ? WHERE (host_id = ?) bind => [2 parameters bound] at org.eclipse.persistence.exceptions.DatabaseException.sqlException(DatabaseException.java:340) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.processExceptionForCommError(DatabaseAccessor.java:1620) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:900) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeNoSelect(DatabaseAccessor.java:964) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.basicExecuteCall(DatabaseAccess

Created 05-24-2019 04:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above question and the reply thread below were originally posted in the Community Help Track. On Fri May 24 09:14 PDT 2019, a member of the HCC moderation staff moved it to the Cloud & Operations Track. The Community Help Track is intended for questions about using the HCC site itself.

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 05-27-2019 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your explained answer @Geoffrey Shelton Okot

I've tried to do so and changed all manually changeable values you mentioned (including setting same hostname as it had before ip change)

If I try this last option, I can make it correctly run with 'ambari-agent restart' but it won't in system 'sudo reboot'.

When trying a different hostanem and making changes you told me, I got an error when

ambari-server update-host-names host_names_changes.json

And ambari-server.log shows folloging trace:

27 may 2019 09:59:38,771 ERROR [main] AmbariJpaLocalTxnInterceptor:180 - [DETAILED ERROR] Rollback reason: Local Exception Stack: Exception [EclipseLink-4002] (Eclipse Persistence Services - 2.6.2.v20151217-774c696): org.eclipse.persistence.exceptions.DatabaseException Internal Exception: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. Error Code: 0 Call: UPDATE hosts SET host_name = ? WHERE (host_id = ?) bind => [2 parameters bound] at org.eclipse.persistence.exceptions.DatabaseException.sqlException(DatabaseException.java:340) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.processExceptionForCommError(DatabaseAccessor.java:1620) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:900) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeNoSelect(DatabaseAccessor.java:964) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.basicExecuteCall(DatabaseAccessor.java:633) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatch(ParameterizedSQLBatchWritingMechanism.java:149) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatchedStatements(ParameterizedSQLBatchWritingMechanism.java:134) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.writesCompleted(DatabaseAccessor.java:1845) at org.eclipse.persistence.internal.sessions.AbstractSession.writesCompleted(AbstractSession.java:4300) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.writesCompleted(UnitOfWorkImpl.java:5592) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.acquireWriteLocks(UnitOfWorkImpl.java:1646) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitTransactionAfterWriteChanges(UnitOfWorkImpl.java:1614) at org.eclipse.persistence.internal.sessions.RepeatableWriteUnitOfWork.commitRootUnitOfWork(RepeatableWriteUnitOfWork.java:285) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitAndResume(UnitOfWorkImpl.java:1169) at org.eclipse.persistence.internal.jpa.transaction.EntityTransactionImpl.commit(EntityTransactionImpl.java:134) at org.apache.ambari.server.orm.AmbariJpaLocalTxnInterceptor.invoke(AmbariJpaLocalTxnInterceptor.java:153) at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72) at com.google.inject.internal.InterceptorStackCallback.intercept(InterceptorStackCallback.java:52) at org.apache.ambari.server.orm.dao.HostDAO$$EnhancerByGuice$$3e1957eb.merge(<generated>) at org.apache.ambari.server.update.HostUpdateHelper.updateHostsInDB(HostUpdateHelper.java:407) at org.apache.ambari.server.update.HostUpdateHelper.main(HostUpdateHelper.java:548) Caused by: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2161) at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:1890) at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:255) at org.postgresql.jdbc2.AbstractJdbc2Statement.execute(AbstractJdbc2Statement.java:559) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeWithFlags(AbstractJdbc2Statement.java:417) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeUpdate(AbstractJdbc2Statement.java:363) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:892) ... 18 more 27 may 2019 09:59:38,781 ERROR [main] AmbariJpaLocalTxnInterceptor:188 - [DETAILED ERROR] Internal exception (1) : org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2161) at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:1890) at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:255) at org.postgresql.jdbc2.AbstractJdbc2Statement.execute(AbstractJdbc2Statement.java:559) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeWithFlags(AbstractJdbc2Statement.java:417) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeUpdate(AbstractJdbc2Statement.java:363) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:892) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeNoSelect(DatabaseAccessor.java:964) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.basicExecuteCall(DatabaseAccessor.java:633) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatch(ParameterizedSQLBatchWritingMechanism.java:149) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatchedStatements(ParameterizedSQLBatchWritingMechanism.java:134) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.writesCompleted(DatabaseAccessor.java:1845) at org.eclipse.persistence.internal.sessions.AbstractSession.writesCompleted(AbstractSession.java:4300) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.writesCompleted(UnitOfWorkImpl.java:5592) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.acquireWriteLocks(UnitOfWorkImpl.java:1646) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitTransactionAfterWriteChanges(UnitOfWorkImpl.java:1614) at org.eclipse.persistence.internal.sessions.RepeatableWriteUnitOfWork.commitRootUnitOfWork(RepeatableWriteUnitOfWork.java:285) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitAndResume(UnitOfWorkImpl.java:1169) at org.eclipse.persistence.internal.jpa.transaction.EntityTransactionImpl.commit(EntityTransactionImpl.java:134) at org.apache.ambari.server.orm.AmbariJpaLocalTxnInterceptor.invoke(AmbariJpaLocalTxnInterceptor.java:153) at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72) at com.google.inject.internal.InterceptorStackCallback.intercept(InterceptorStackCallback.java:52) at org.apache.ambari.server.orm.dao.HostDAO$$EnhancerByGuice$$3e1957eb.merge(<generated>) at org.apache.ambari.server.update.HostUpdateHelper.updateHostsInDB(HostUpdateHelper.java:407) at org.apache.ambari.server.update.HostUpdateHelper.main(HostUpdateHelper.java:548) 27 may 2019 09:59:38,781 ERROR [main] HostUpdateHelper:564 - Unexpected error, host names update failed javax.persistence.RollbackException: Exception [EclipseLink-4002] (Eclipse Persistence Services - 2.6.2.v20151217-774c696): org.eclipse.persistence.exceptions.DatabaseException Internal Exception: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. Error Code: 0 Call: UPDATE hosts SET host_name = ? WHERE (host_id = ?) bind => [2 parameters bound] at org.eclipse.persistence.internal.jpa.transaction.EntityTransactionImpl.commit(EntityTransactionImpl.java:159) at org.apache.ambari.server.orm.AmbariJpaLocalTxnInterceptor.invoke(AmbariJpaLocalTxnInterceptor.java:153) at org.apache.ambari.server.update.HostUpdateHelper.updateHostsInDB(HostUpdateHelper.java:407) at org.apache.ambari.server.update.HostUpdateHelper.main(HostUpdateHelper.java:548) Caused by: Exception [EclipseLink-4002] (Eclipse Persistence Services - 2.6.2.v20151217-774c696): org.eclipse.persistence.exceptions.DatabaseException Internal Exception: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. Error Code: 0 Call: UPDATE hosts SET host_name = ? WHERE (host_id = ?) bind => [2 parameters bound] at org.eclipse.persistence.exceptions.DatabaseException.sqlException(DatabaseException.java:340) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.processExceptionForCommError(DatabaseAccessor.java:1620) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:900) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeNoSelect(DatabaseAccessor.java:964) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.basicExecuteCall(DatabaseAccessor.java:633) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatch(ParameterizedSQLBatchWritingMechanism.java:149) at org.eclipse.persistence.internal.databaseaccess.ParameterizedSQLBatchWritingMechanism.executeBatchedStatements(ParameterizedSQLBatchWritingMechanism.java:134) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.writesCompleted(DatabaseAccessor.java:1845) at org.eclipse.persistence.internal.sessions.AbstractSession.writesCompleted(AbstractSession.java:4300) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.writesCompleted(UnitOfWorkImpl.java:5592) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.acquireWriteLocks(UnitOfWorkImpl.java:1646) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitTransactionAfterWriteChanges(UnitOfWorkImpl.java:1614) at org.eclipse.persistence.internal.sessions.RepeatableWriteUnitOfWork.commitRootUnitOfWork(RepeatableWriteUnitOfWork.java:285) at org.eclipse.persistence.internal.sessions.UnitOfWorkImpl.commitAndResume(UnitOfWorkImpl.java:1169) at org.eclipse.persistence.internal.jpa.transaction.EntityTransactionImpl.commit(EntityTransactionImpl.java:134) ... 3 more Caused by: org.postgresql.util.PSQLException: ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2161) at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:1890) at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:255) at org.postgresql.jdbc2.AbstractJdbc2Statement.execute(AbstractJdbc2Statement.java:559) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeWithFlags(AbstractJdbc2Statement.java:417) at org.postgresql.jdbc2.AbstractJdbc2Statement.executeUpdate(AbstractJdbc2Statement.java:363) at org.eclipse.persistence.internal.databaseaccess.DatabaseAccessor.executeDirectNoSelect(DatabaseAccessor.java:892)

Created 05-27-2019 02:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What values are in your json file?

Can you share a redacted version of the /etc/hosts

and the output of

$ hostname -f

The below shows you are changing to a value which is no different from the old value 🙂

Detail: Key (host_name)=(bigdata.es) already exists. at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2161) at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:1890) at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:255)

Please revert

Created 05-27-2019 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What values do you have in /etc/host?

Can you share a redacted version of your /etc/hosts and the output of

$ hostname -f

The below shows that you are trying to change to a value which is no different from the older value 🙂

Detail: Key (host_name)=(bigdata.es) already exists. at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2161) at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:1890) at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:255) ERROR: duplicate key value violates unique constraint "uq_hosts_host_name" Detail: Key (host_name)=(bigdata.es) already exists. Error Code: 0 Call: UPDATE hosts SET host_name = ? WHERE (host_id = ?)bind => [2 parameters bound]

Please revert

Created on 05-27-2019 06:31 PM - edited 08-17-2019 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

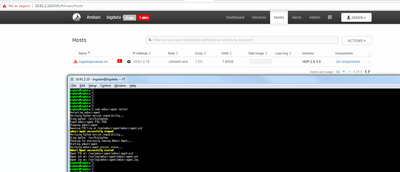

There you have hostname -f and /etc/hosts outputs.

bigdata@bigdata:~$ hostname -f bigdatapruebas.es bigdata@bigdata:~$ sudo cat /etc/hosts 10.61.2.10 bigdatapruebas.es bigdata.adurizenergia.es bigdata.es bigdata ::1 localhost ip6-localhost ip6-loopback ff02::1 ip6-allnodes ff02::02 ip6-allrouters bigdata@bigdata:~$

Also script is the following one.

{

"bigdata" : {

"bigdata.es" : "bigdatapruebas.es"

}

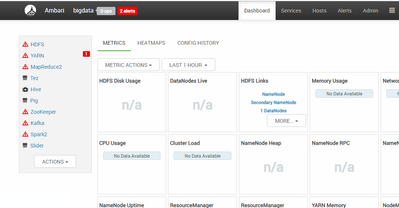

}It did finnaly succeded, but as it's shown on the following picture, heartbeat is still lost after sudo reboot the system. I changed values from different try to make sure names are completly different.

After sudo ambari-agent restart command, it's recognized again and we can see stopped status and not heartbeat lost one.

Created 05-27-2019 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You succeeded but encountering heartbeat lost because your /etc/hosts entry is wrong 🙂 I really can't understand how you can connect 🙂 The below entry is wrong and shouldn't resolve that somehow explains why you had difficulty in running the host_names_changes.json

bigdata@bigdata:~$ sudo cat /etc/hosts 10.61.2.10 bigdatapruebas.es bigdata.adurizenergia.es bigdata.es bigdata

Should be the /etc/host entry should be exactly the output of

$ hostname -f bigdatapruebas.es

So your /etc/hosts should look like below

$ sudo cat /etc/hosts 10.61.2.10 bigdatapruebas.es

But if you had an FQDN like bigdata.endesa.es the entry could be IP FQDN ALIAS

$ sudo cat /etc/hosts IP FQDN ALIAS ------------------------------------------------ 10.61.2.10 bigdata.endesa.es bigdata

With the above entry in the /etc/hosts, you can access ambari successfully in 2 ways

http://bigdata.endesa.es:8080

and

http://bigdata:8080

Please do the necessary changes and revert

Created 05-28-2019 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Already done /etc/hosts modifications and with this result:

- bigdata@bigdata:~$ cat /etc/hosts

- IP FQDN ALIAS

- ------------------------------------------------

- 10.61.2.10 bigdatapruebas.es bigdata.es

- #10.61.2.10 bigdatapruebas.es #bigdata.es bigdata bigdata.pruebasenergia.es

- ::1 localhost ip6-localhost ip6-loopback

- ff02::1 ip6-allnodes

- ff02::02 ip6-allrouters

- bigdata@bigdata:~$ hotname -f No se ha encontrado la orden «hotname», quizás quiso decir:

- La orden «hostname» del paquete «hostname» (main) hotname: no se encontró la orden

- bigdata@bigdata:~$ hostname -f

- bigdatapruebas.es

- bigdata@bigdata:~$

But happens the same thing. When applying changes it works but not working if rebooting until I manually restart agent 😞