Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: cdsw -tcp-ingress controller failing..grpc_sta...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

cdsw -tcp-ingress controller failing..grpc_status":14,"os_error":"invalid argument"}]}]}

Created on

09-17-2019

12:09 PM

- last edited on

09-17-2019

10:18 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

All,

while installing cdsw tcp-ingress controller and ds_reconsiler pods are failing due to connection failure with Grps server.. from below web service logs i see grps server status failing with port binding (description":"Failed to bind to port","file":"../deps/grpc/src/core/lib/iomgr/tcp_uv.cc","file_line":73,

"grpc_status":14,"os_error":"invalid argument"}]}]}), any suggestions how to fix this...

[..]

reated":"@1568398264.632135163","description":"No address added out of total 1 resolved","file":"../deps/grpc/src/core/ext/transport/chttp2/server/chttp2_server.cc",

"file_line":349,"referenced_errors":[{"created":"@1568398264.632132593","description":"Failed to add port to server","file":"../deps/grpc/src/core/lib/iomgr/tcp_server_custom.cc",

"file_line":402,"referenced_errors":[{"created":"@1568398264.632131142","description":"Failed to bind to port","file":"../deps/grpc/src/core/lib/iomgr/tcp_uv.cc","file_line":73,"grpc_status":14,"os_error":"invalid argument"}]}]}

E0913 18:11:04.634478860 1 server_secure_chttp2.cc:81] {"created":"@1568398264.634442264","description":"No address added out of total 1 resolved","file":"../deps/grpc/src/core/ext/transport/chttp2/server/chttp2_server.cc",

"file_line":349,"referenced_errors":[{"created":"@1568398264.634440097","description":"Failed to add port to server",

"file":"../deps/grpc/src/core/lib/iomgr/tcp_server_custom.cc","file_line":402,"referenced_errors":[{"created":"@1568398264.634439104","description":"Failed to bind to port","file":"../deps/grpc/src/core/lib/iomgr/tcp_uv.cc","file_line":73,

"grpc_status":14,"os_error":"invalid argument"}]}]}

[{"code":"EXTRA_REFERENCE_PROPERTIES","message":"Extra JSON Reference properties will be ignored: type, description","path":["definitions","DescribeRunResponse"]},

{"code":"EXTRA_REFERENCE_PROPERTIES","message":"Extra JSON Reference properties will be ignored: type, description","path":["definitions","RepeatRunResponse"]},

{"code":"EXTRA_REFERENCE_PROPERTIES","message":"Extra JSON Reference properties will be ignored: type, description","path":["definitions","Run","properties","submitter"]},

{"code":"UNUSED_DEFINITION","message":"Definition is not used: #/definitions/ValidationError","path":["definitions","ValidationError"]}] 2

2019-09-13 18:12:08.090 1 INFO AppServer.HTTP Req-ff9b1780-d651-11e9-b240-a59f514efc13 GET chd6-cdsw.udl.net /editor/file/NativeFileError.js 304 34.273 ms - -

2019-09-13 18:39:14.744 1 INFO AppServer.HTTP Req-c92f3830-d655-11e9-b240-a59f514efc13 GET chd6-cdsw.udl.net /editor/file/NativeFileError.js 304 4.623 ms - -

Created 09-18-2019 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@aahbs we recently observed this with CDSW 1.6 on hosts which have ipv6 disabled. If you're hitting this behaviour please check dmesg, it would likely show segfaults on node process. We are working internally to understand the GRPC behaviour and its connection with ipv6 but in the meantime, you might want to enable ipv6 per the RedHat article https://access.redhat.com/solutions/8709#rhel7enable

1. Edit /etc/default/grub and delete the entry ipv6.disable=1 from the GRUB_CMDLINE_LINUX, like the following sample:

GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/swap crashkernel=auto rd.lvm.lv=rhel/root"2. Run the grub2-mkconfig command to regenerate the grub.cfg file:

# grub2-mkconfig -o /boot/grub2/grub.cfg

Alternatively, on UEFI systems, run the following:

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

3. Delete the file /etc/sysctl.d/ipv6.conf which contains the entry:

# To disable for all interfaces net.ipv6.conf.all.disable_ipv6 = 1 # the protocol can be disabled for specific interfaces as well. net.ipv6.conf.<interface>.disable_ipv6 = 1

4. Check the content of the file /etc/ssh/sshd_config and make sure the AddressFamily line is commented:

#AddressFamily inet

5. Make sure the following line exists in /etc/hosts, and is not commented out:

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

6. Enable ipv6 support on the ethernet interface. Double check /etc/sysconfig/network and /etc/sysconfig/network-scripts/ifcfg-* and ensure we've IPV6INIT=yes .This setting is required for IPv6 static and DHCP assignment of IPv6 addresses.

7. Stop CDSW service.

8. Reboot the CDSW hosts to enable IPv6 support.

9. Start CDSW service

Created 09-18-2019 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@aahbs we recently observed this with CDSW 1.6 on hosts which have ipv6 disabled. If you're hitting this behaviour please check dmesg, it would likely show segfaults on node process. We are working internally to understand the GRPC behaviour and its connection with ipv6 but in the meantime, you might want to enable ipv6 per the RedHat article https://access.redhat.com/solutions/8709#rhel7enable

1. Edit /etc/default/grub and delete the entry ipv6.disable=1 from the GRUB_CMDLINE_LINUX, like the following sample:

GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/swap crashkernel=auto rd.lvm.lv=rhel/root"2. Run the grub2-mkconfig command to regenerate the grub.cfg file:

# grub2-mkconfig -o /boot/grub2/grub.cfg

Alternatively, on UEFI systems, run the following:

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

3. Delete the file /etc/sysctl.d/ipv6.conf which contains the entry:

# To disable for all interfaces net.ipv6.conf.all.disable_ipv6 = 1 # the protocol can be disabled for specific interfaces as well. net.ipv6.conf.<interface>.disable_ipv6 = 1

4. Check the content of the file /etc/ssh/sshd_config and make sure the AddressFamily line is commented:

#AddressFamily inet

5. Make sure the following line exists in /etc/hosts, and is not commented out:

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

6. Enable ipv6 support on the ethernet interface. Double check /etc/sysconfig/network and /etc/sysconfig/network-scripts/ifcfg-* and ensure we've IPV6INIT=yes .This setting is required for IPv6 static and DHCP assignment of IPv6 addresses.

7. Stop CDSW service.

8. Reboot the CDSW hosts to enable IPv6 support.

9. Start CDSW service

Created 09-18-2019 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ThankU very much for the update, ya we are facing this issue with cdsw 1.6...

Let us try this option and update...

Created 09-19-2019 02:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After enabling ipv6 cdsw upand running..ThankU and appreciated!

But when we try to launch a session its always showing as launching....whenw e open another window session is shwoing as running but not able to run any commands also not able to launch terminal that means still session still not ready.... any help will be greatly appreciated.

ThankU.

Created 09-19-2019 08:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i can see pod logs as below..

Error from server (BadRequest): a container name must be specified for pod 1256prl4sdxs0o6w, choose one of: [engine kinit ssh] or one of the init containers: [engine-deps]

Created 09-20-2019 12:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@aahbs good to hear that you are past node.js segfaults. Regarding the session stuck in launching state, start by having a look at the engine pod logs.

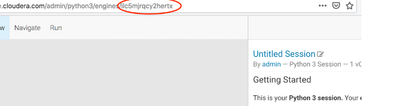

The engine pod name will be the ID at the end of the session URL (eg in this case ilc5mjrqcy2hertx).

You can then run kubectl get pods to find out the namespace that the pod is launched with

kubectl get pods --all-namespaces=true | grep -i <engine ID>

Followed by kubectl logs to review the logs of the engine and kinit containers

kubectl logs <engineID> -n <namespace> -c engine

BTW, is this a new installation or an upgrade of the existing one? Do you use kerberos and https? If TLS is enabled are you using self-signed certificates?

Created 09-20-2019 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a new installation with kerberos enabled but no tls..

Please see below engine container logs which leads to check live-log pod logs which show some failure(019-09-20 08:30:03.593 7 ERROR Livelog.Server message error data = {"addr":"100.66.0.29:51370","err":"websocket: close 1006 (abnormal closure): unexpected EOF"})

engine container logs :

Livelog Open: Fri Sep 20 2019 08:30:22 GMT+0000 (UTC)

2019-09-20 08:30:22.431 1 INFO EngineInit.BrowserSvcs 76jt0ox8nexowxq5 Service port has become ready data = {"hostname":"ds-runtime-76jt0ox8nexowxq5.default-user-1.svc.cluster.local","port":8000,"user":"cdsw"}

2019-09-20 08:30:22.431 1 INFO EngineInit.BrowserSvcs 76jt0ox8nexowxq5 Emitting new UI status to LiveLog data = {"message":{"name":"terminal","url":"http://tty-76jt0ox8nexowxq5.chd6-cdsw.udl.net","status":true,"title":"GoTTY"},"user":"cdsw"}

2019-09-20 08:30:22.434 29 INFO Engine 76jt0ox8nexowxq5 Finish Authenticating to livelog: success data = {"secondsSinceStartup":0.271}

2019-09-20 08:30:22.434 29 INFO Engine 76jt0ox8nexowxq5 Start Searching for engine module data = {"secondsSinceStartup":0.272}

2019-09-20 08:30:22.670 29 INFO Engine 76jt0ox8nexowxq5 Finish Searching for engine module: success data = {"engineModule_path":"/usr/local/lib/node_modules/python2-engine"}

2019-09-20 08:30:22.671 29 INFO Engine 76jt0ox8nexowxq5 Start Creating engine data = {"secondsSinceStartup":0.508}

PID of parser IPython process is 80

PID of main IPython process is 83

2019-09-20 08:30:22.812 29 INFO Engine 76jt0ox8nexowxq5 Finish Creating engine data = {"secondsSinceStartup":0.65}

2019-09-20 08:30:24.747 29 INFO Engine 76jt0ox8nexowxq5 Start Registering running status data = {"useHttps":false,"host":"web.default.svc.cluster.local","path":"/api/********/project1/dashboards/76jt0ox8nexowxq5/register-status","senseDomain":"***********}

2019-09-20 08:30:24.762 29 INFO Engine 76jt0ox8nexowxq5 Finish Registering running status: success

2019-09-20 08:30:24.763 29 INFO Engine 76jt0ox8nexowxq5 Pod is ready data = {"secondsSinceStartup":2.6,"engineModuleShare":2.092}

-----------live logs-----

2019-09-20 08:30:03.593 7 ERROR Livelog.Server message error data = {"addr":"100.66.0.29:51370","err":"websocket: close 1006 (abnormal closure): unexpected EOF"}

2019-09-20 08:30:03.593 7 INFO Livelog.Server websocket disconnected data = {"addr":"100.66.0.29:51370"}

2019-09-20 08:30:03.593 7 INFO Livelog.Server unsubscribed on disconnect data = {"addr":"100.66.0.29:51370","stream":4,"topic":"2bkebt3msffbhihr_input"}

2019-09-20 08:30:20.408 7 INFO Livelog.Server websocket connected data = {"addr":"100.66.0.28:56216"}

2019-09-20 08:30:20.408 7 INFO Livelog.Server auth successful data = {"addr":"100.66.0.28:56216"}

2019-09-20 08:30:20.508 7 INFO Livelog.Server websocket connected data = {"addr":"100.66.0.28:56246"}

2019-09-20 08:30:20.509 7 INFO Livelog.Server auth successful data = {"addr":"100.66.0.28:56246"}

2019-09-20 08:30:21.512 7 ERROR Livelog.Server message error data = {"addr":"100.66.0.28:56246","err":"websocket: close 1006 (abnormal closure): unexpected EOF"}

2019-09-20 08:30:21.513 7 INFO Livelog.Server websocket disconnected data = {"addr":"100.66.0.28:56246"}

2019-09-20 08:30:22.423 7 INFO Livelog.Server websocket connected data = {"addr":"100.66.0.28:56288"}

2019-09-20 08:30:22.431 7 INFO Livelog.Server auth successful data = {"addr":"100.66.0.28:56288"}

2019-09-20 08:30:24.762 7 INFO Livelog.Server subscribe data = {"addr":"100.66.0.28:56288","stream":3,"topic":"76jt0ox8nexowxq5_input"}

-----

Created 09-20-2019 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@aahbs these 2 lines suggests the POD is ready from k8s perspective.

2019-09-20 08:30:24.762 29 INFO Engine 76jt0ox8nexowxq5 Finish Registering running status: success

2019-09-20 08:30:24.763 29 INFO Engine 76jt0ox8nexowxq5 Pod is ready data = {"secondsSinceStartup":2.6,"engineModuleShare":2.092}

Basically once the init process completes in the engine and the kernel (eg python) boots up the handler code in the engine, it directly updates the livelog status badge that the engine has transitioned from Starting to Running state. In our case this is broken which could indicate a problem with websockets.

You can enable developer console in the browser to check the websocket errors. To open the Developer console in chrome, click on the Three Dots on the extreme right side of the URL bar. Then click on more tools -> developer tools -> console.

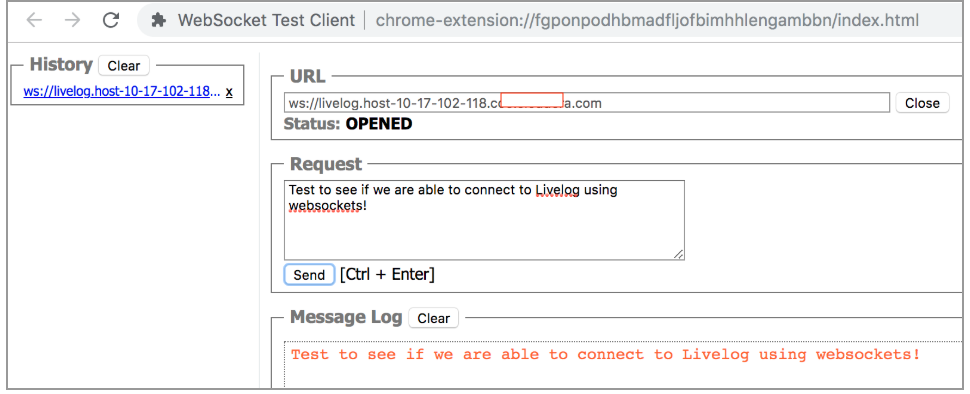

To identify if the browser supports websockets and connect to, use the echo test from here https://www.websocket.org/echo.html You can also use a chrome extension which lets you connect to the livelog pod from the browser using websockets and ensures that there are no connectivity problems between the browser and CDSW’s livelog using websockets.

Another thing to ensure is that you are able to resolve the wildcard subdomain from both your laptop and the server. For eg if you configured your DOMAIN in CDSW configuration as "cdsw.company.com", then a dig *.cdsw.comapny.com and a dig cdsw.company.com should return the A record correctly from both your laptop and CDSW host. You might also want to double check that there are no conflicting environment variables at the global or project level.

Created 09-20-2019 10:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 09-20-2019 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

and i am not able to launch below urls from our browsers or unreachable

" https://www.websocket.org/echo.html You can also use a chrome extension" as our browser is firewall protected (This site can’t be reached), can you please provide more details how to fix this...

and we have no issue with the domain...we are able to dig..