Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: changing temp directory in sqoop import

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

changing temp directory in sqoop import

- Labels:

-

Apache Sqoop

Created on

11-04-2019

05:13 AM

- last edited on

11-04-2019

02:28 PM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A ".temp" directory is getting created in the target directory while importing data using sqoop. I want to change the directory location where the .temp/job_1569164528775_11264/mr/* directories are getting created.

command:

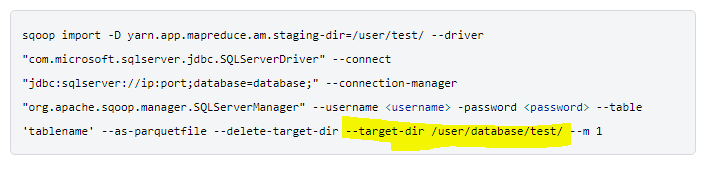

sqoop import -D yarn.app.mapreduce.am.staging-dir=/user/test/ --driver "com.microsoft.sqlserver.jdbc.SQLServerDriver" --connect "jdbc:sqlserver://ip:port;database=database;" --connection-manager "org.apache.sqoop.manager.SQLServerManager" --username <username> -password <password> --table 'tablename' --as-parquetfile --delete-target-dir --target-dir /user/database/test/ --m 1

result now:

hadoop fs -ls -R /user/database/test/

/user/database/test/.temp

/user/database/test/.temp/job_1569164528775_11264/mr/.....*

Expected result:

hadoop fs -ls -R /user/test/

/user/test/.temp/job_1569164528775_11264/mr/.....*

tried the below aswell:

mapred.system.dir

mapreduce.task.output.dir

mapreduce.task.tmp.dir

mapred.work.output.dir

mapreduce.cluster.temp.dir

mapreduce.cluster.local.dir

mapred.temp.dir

mapreduce.jobtracker.system.dir

hadoop.tmp.dir

Created 11-04-2019 07:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried changing your --target-dir /user/database/test/ --m 1

$ sqoop import -D yarn.app.mapreduce.am.staging-dir=/user/test/ --driver "com.microsoft.sqlserver.jdbc.SQLServerDriver" --connect "jdbc:sqlserver://ip:port;database=database;" --connection-manager "org.apache.sqoop.manager.SQLServerManager" --username <username> -password <password> --table 'tablename' --as-parquetfile --delete-target-dir --target-dir /user/test/ --m 1

While running hive import target-dir argument value controls where the data needs to store temporarily before loading into Hive table, but target-dir doesn't create hive table in that location.

If you want to import to specific directory then use target-dir without hive-import argument and create hive table on top of HDFS directory.

HTH

Created 11-04-2019 09:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shelton

I am not using hive-import or trying to create hive table.

The issue here is that a .temp directory that gets created at the target-dir i specify.

The .temp will be deleted once the execution is complete but my use case is to change the directory where the .temp is getting created during run time.

Created 11-05-2019 12:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not using hive-import or trying to create a hive table. but below is your code I can see target -dir

Thanks

Created 11-05-2019 10:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The target-dir is specified so that the generated files are placed in that directory.

The problem I am facing here is with the temporary directory (.temp) that gets created during run time (i.e when the mapreduce job is initiated) at the target -dir.

The solution I am looking for is to change the temporary directory(.temp) location.

Created 11-07-2019 08:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You can use this tag "--temporary-rootdir" to make sure that the temporary data goes into this folder.

Example:- [example]

sqoop import --target-dir /<hdfs path>/<import_data_dir> --temporary-rootdir /<dfs path>/<tmp_dir> ...

Regards

Nitish

Created 11-10-2019 10:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 11-10-2019 10:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you please share the sqoop command that you are running?

Regards

Nitish

Created on 11-10-2019 11:13 PM - edited 11-10-2019 11:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

command used :

sqoop import --driver "com.microsoft.sqlserver.jdbc.SQLServerDriver" --connect "jdbc:sqlserver://server:port;database=db_name;" --connection-manager "org.apache.sqoop.manager.SQLServerManager" --username <> -password <> --table 'table_name' --as-parquetfile --delete-target-dir --target-dir /user/test/axe/ --temporary-rootdir /user/test2/ --m 4 --split-by user_id

before mapreduce starts:

hadoop fs -ls -R /user/test/

/user/test/axe

/user/test/axe/.metadata

/user/test/axe/.metadata/descriptor.properties

/user/test/axe/.metadata/schema.avsc

/user/test/axe/.metadata/schemas

/user/test/axe/.metadata/schemas/1.avsc

when the mapreduce job starts:

hadoop fs -ls -R /user/test/

/user/test/.temp

/user/test/.temp/job_1571067970221_0156

/user/test/.temp/job_1571067970221_0156/mr

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156/.metadata

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156/.metadata/descriptor.properties

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156/.metadata/schema.avsc

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156/.metadata/schemas

/user/test/.temp/job_1571067970221_0156/mr/job_1571067970221_0156/.metadata/schemas/1.avsc

/user/test/axe

/user/test/axe/.metadata

/user/test/axe/.metadata/descriptor.properties

/user/test/axe/.metadata/schema.avsc

/user/test/axe/.metadata/schemas

/user/test/axe/.metadata/schemas/1.avsc

once the import is complete:

hadoop fs -ls -R /user/test/

/user/test/axe

/user/test/axe/.metadata

/user/test/axe/.metadata/descriptor.properties

/user/test/axe/.metadata/schema.avsc

/user/test/axe/.metadata/schemas

/user/test/axe/.metadata/schemas/1.avsc

/user/test/axe/.signals

/user/test/axe/.signals/unbounded

/user/test/axe/679dadfc-3657-410b-a805-6c98b8d1720b.parquet

/user/test/axe/923bbf06-35d6-4156-8df3-a6e53ebf00f7.parquet

/user/test/axe/aef24eac-15dd-4ebc-a61a-68265d53a320.parquet

/user/test/axe/f903a079-87b3-48a0-bea6-aa02d92c5aac.parquet

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

NOTE:- Parquet is hard coded to write the temporary data in /tmp even though the target directory is different.

Kindly check /tmp for intermediate data, you will see it there.

Regards