Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: failure in starting zookeeper service

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

failure in starting zookeeper service

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 02-26-2017 09:47 PM - edited 08-19-2019 02:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

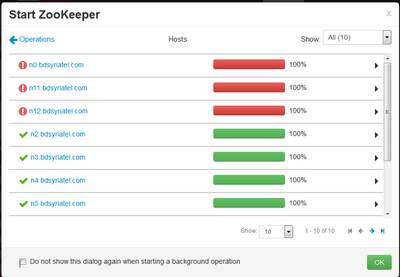

I installed HDP 2.5 successufully on 10 hosts with Centos 7, but I can't start zookeeper service. I have three zookeeper server installed and 10 zookeeper clients. I get the following error

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 189, in <module>

ZookeeperServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 56, in start

self.configure(env, upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 51, in configure

zookeeper(type='server', upgrade_type=upgrade_type)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper.py", line 42, in zookeeper

conf_select.select(params.stack_name, "zookeeper", params.current_version)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/conf_select.py", line 315, in select

shell.checked_call(_get_cmd("set-conf-dir", package, version), logoutput=False, quiet=False, sudo=True)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-python-wrap /usr/bin/conf-select set-conf-dir --package zookeeper --stack-version 2.5.0.0-1245 --conf-version 0' returned 1. Traceback (most recent call last):

File "/usr/bin/conf-select", line 178, in <module>

setConfDir(options.pname, options.sver, options.cver)

File "/usr/bin/conf-select", line 136, in setConfDir

raise Exception("Expected confdir %s to be a symlink." % confdir)

Exception: Expected confdir /usr/hdp/2.5.0.0-1245/zookeeper/conf to be a symlink.

stdout:

2017-02-26 22:55:17,520 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-26 22:55:17,522 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-26 22:55:17,524 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:17,565 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:17,566 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-26 22:55:17,603 - checked_call returned (0, '')

2017-02-26 22:55:17,604 - Ensuring that hadoop has the correct symlink structure

2017-02-26 22:55:17,604 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-26 22:55:17,805 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-26 22:55:17,807 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-26 22:55:17,809 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:17,848 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:17,849 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-26 22:55:17,885 - checked_call returned (0, '')

2017-02-26 22:55:17,886 - Ensuring that hadoop has the correct symlink structure

2017-02-26 22:55:17,886 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-26 22:55:17,887 - Group['livy'] {}

2017-02-26 22:55:17,889 - Group['spark'] {}

2017-02-26 22:55:17,889 - Group['zeppelin'] {}

2017-02-26 22:55:17,889 - Group['hadoop'] {}

2017-02-26 22:55:17,890 - Group['users'] {}

2017-02-26 22:55:17,890 - Group['knox'] {}

2017-02-26 22:55:17,890 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,891 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,892 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,892 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,893 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,894 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,895 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,896 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,896 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,897 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,898 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,898 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,899 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,900 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,900 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,901 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,902 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,902 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,903 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,904 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,905 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,905 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,906 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,907 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,907 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-26 22:55:17,909 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2017-02-26 22:55:17,916 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2017-02-26 22:55:17,916 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2017-02-26 22:55:17,917 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-26 22:55:17,918 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2017-02-26 22:55:17,924 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2017-02-26 22:55:17,924 - Group['hdfs'] {}

2017-02-26 22:55:17,925 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2017-02-26 22:55:17,925 - FS Type:

2017-02-26 22:55:17,925 - Directory['/etc/hadoop'] {'mode': 0755}

2017-02-26 22:55:17,939 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,939 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2017-02-26 22:55:17,954 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2017-02-26 22:55:17,962 - Skipping Execute[('setenforce', '0')] due to not_if

2017-02-26 22:55:17,962 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2017-02-26 22:55:17,965 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2017-02-26 22:55:17,965 - Changing owner for /var/run/hadoop from 1018 to root

2017-02-26 22:55:17,965 - Changing group for /var/run/hadoop from 1004 to root

2017-02-26 22:55:17,966 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2017-02-26 22:55:17,971 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2017-02-26 22:55:17,973 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2017-02-26 22:55:17,974 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2017-02-26 22:55:17,989 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,990 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2017-02-26 22:55:17,991 - File['/usr/hdp/current/hadoop-client/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,997 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2017-02-26 22:55:18,001 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2017-02-26 22:55:18,256 - Checking if need to create versioned conf dir /etc/zookeeper/2.5.0.0-1245/0

2017-02-26 22:55:18,258 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:18,293 - call returned (1, '/etc/zookeeper/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:18,293 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

Command failed after 1 tries

zookeeper client started successfully on all nodes. Zookeeper server failed on the three nodes.

I tried this command on the three server nodes

ln -s /etc/zookeeper/2.5.0.0-1245/0 /usr/hdp/2.5.0.0-1245/zookeeper/conf

but the same error still exists.

Created 02-26-2017 10:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems like a symlink issue. Can you post the content of

ls -l /usr/hdp/2.5.0.0-1245/zookeeper/conf

It should have permissions like

lrwxrwxrwx. 1 root root

Also, to be safe can you run the ln command on all the hosts manually once. Make sure the permissions are also correct.

Created 02-26-2017 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Namit Maheshwari thank you for your quick reply.The output of the command on the three server hosts.

[root@n12 ~]# ls -l /usr/hdp/2.5.0.0-1245/zookeeper/conf total 16 lrwxrwxrwx 1 root root 29 Feb 26 21:19 0 -> /etc/zookeeper/2.5.0.0-1245/0 -rw-r--r-- 1 zookeeper hadoop 548 Feb 26 21:09 configuration.xsl -rw-r--r-- 1 zookeeper hadoop 2444 Feb 26 21:09 log4j.properties -rw-r--r-- 1 zookeeper hadoop 1048 Feb 26 21:09 zoo.cfg -rw-r--r-- 1 zookeeper hadoop 310 Feb 26 21:09 zookeeper-env.sh -rw-r--r-- 1 zookeeper hadoop 0 Feb 26 21:09 zoo_sample.cfg

Created 02-26-2017 10:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On my current working cluster, the output is something like:

[root@mycluster ~]# ls -l /usr/hdp/2.5.0.0-1245/zookeeper/conf lrwxrwxrwx. 1 root root 28 Feb 26 01:52 /usr/hdp/2.5.0.0-1245/zookeeper/conf -> /etc/zookeeper/2.5.0.0-1245/0 [root@myclsuter ~]#

So, the output should not have the other folders as shown above.

Also, I see the link you have is something like

0 -> /etc/zookeeper/2.5.0.0-1245/0

which does not seem to be correct.

Created 02-27-2017 01:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Namit Maheshwari I deleted the directory /usr/hdp/2.5.0.0-1245/zookeeper/conf, but the ambari re-created it and all the contents that were in it previously.

Zookeeper client starts successfully and it contains the same contents of that directory in client hosts. For example, on the host "n4", which is client zookeeper, I get this:

[root@n4 ~]# ls -l /usr/hdp/2.5.0.0-1245/zookeeper/conf total 16 -rw-r--r-- 1 zookeeper hadoop 548 Feb 9 01:22 configuration.xsl -rw-r--r-- 1 zookeeper hadoop 2444 Feb 9 01:22 log4j.properties -rw-r--r-- 1 zookeeper hadoop 1048 Feb 9 01:22 zoo.cfg -rw-r--r-- 1 zookeeper hadoop 310 Feb 9 01:22 zookeeper-env.sh -rw-r--r-- 1 zookeeper hadoop 0 Feb 9 01:22 zoo_sample.cfg

Created 02-27-2017 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try if the below fix works for you:

Created 02-27-2017 11:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I solved the problem, but zookeeper is still not starting. I solved it by deleting the directory /usr/hdp/2.5.0.0-1245/zookeeper/conf, then I created the correct symbol link.

[root@n12 ~]# rm -rf /usr/hdp/2.5.0.0-1245/zookeeper/conf [root@n12 ~]# ln -s /etc/zookeeper/2.5.0.0-1245/0 /usr/hdp/2.5.0.0-1245/zookeeper/conf

During installation I faced the same problem in

But I solved it and the installation was completed successfully on all nodes for all services.

The new problem as follow

resource_management.core.exceptions.Fail: Execution of 'source /usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh ; env ZOOCFGDIR=/usr/hdp/current/zookeeper-server/conf ZOOCFG=zoo.cfg /usr/hdp/current/zookeeper-server/bin/zkServer.sh start' returned 127. env: /usr/hdp/current/zookeeper-server/bin/zkServer.sh: No such file or directory

I searched on the file zkServer.sh I found it in this location /var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/files/

Then I copied all the files in that directory to the directory /usr/hdp/2.5.0.0-1245/zookeeper/bin, I created yhis directory manually.

BUT I get this error now, It is about permissions about the directory /usr/hdp/2.5.0.0-1245/zookeeper/bin

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 189, in <module>

ZookeeperServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 57, in start

zookeeper_service(action='start', upgrade_type=upgrade_type)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_service.py", line 52, in zookeeper_service

user=params.zk_user

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'source /usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh ; env ZOOCFGDIR=/usr/hdp/current/zookeeper-server/conf ZOOCFG=zoo.cfg /usr/hdp/current/zookeeper-server/bin/zkServer.sh start' returned 126. env: /usr/hdp/current/zookeeper-server/bin/zkServer.sh: Permission denied

stdout:

2017-02-28 01:05:40,203 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-28 01:05:40,207 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-28 01:05:40,210 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-28 01:05:40,243 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-28 01:05:40,244 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-28 01:05:40,278 - checked_call returned (0, '')

2017-02-28 01:05:40,279 - Ensuring that hadoop has the correct symlink structure

2017-02-28 01:05:40,279 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-28 01:05:40,495 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-28 01:05:40,497 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-28 01:05:40,499 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-28 01:05:40,528 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-28 01:05:40,528 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-28 01:05:40,555 - checked_call returned (0, '')

2017-02-28 01:05:40,556 - Ensuring that hadoop has the correct symlink structure

2017-02-28 01:05:40,556 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-28 01:05:40,557 - Group['livy'] {}

2017-02-28 01:05:40,558 - Group['spark'] {}

2017-02-28 01:05:40,559 - Group['zeppelin'] {}

2017-02-28 01:05:40,559 - Group['hadoop'] {}

2017-02-28 01:05:40,559 - Group['users'] {}

2017-02-28 01:05:40,559 - Group['knox'] {}

2017-02-28 01:05:40,560 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,560 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,561 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,562 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,563 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,563 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-28 01:05:40,564 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,565 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-28 01:05:40,565 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-28 01:05:40,566 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,567 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,568 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,568 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,569 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,570 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-28 01:05:40,570 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,571 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,572 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,573 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,573 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,574 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,575 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,575 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,576 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-28 01:05:40,577 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-28 01:05:40,578 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2017-02-28 01:05:40,585 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2017-02-28 01:05:40,585 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2017-02-28 01:05:40,586 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-28 01:05:40,587 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2017-02-28 01:05:40,593 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2017-02-28 01:05:40,593 - Group['hdfs'] {}

2017-02-28 01:05:40,593 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2017-02-28 01:05:40,594 - FS Type:

2017-02-28 01:05:40,594 - Directory['/etc/hadoop'] {'mode': 0755}

2017-02-28 01:05:40,608 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-28 01:05:40,608 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2017-02-28 01:05:40,623 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2017-02-28 01:05:40,648 - Skipping Execute[('setenforce', '0')] due to not_if

2017-02-28 01:05:40,648 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2017-02-28 01:05:40,651 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2017-02-28 01:05:40,652 - Creating directory Directory['/var/run/hadoop'] since it doesn't exist.

2017-02-28 01:05:40,652 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2017-02-28 01:05:40,663 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2017-02-28 01:05:40,669 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2017-02-28 01:05:40,677 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2017-02-28 01:05:40,699 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-28 01:05:40,705 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2017-02-28 01:05:40,714 - File['/usr/hdp/current/hadoop-client/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2017-02-28 01:05:40,723 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2017-02-28 01:05:40,728 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2017-02-28 01:05:40,979 - Checking if need to create versioned conf dir /etc/zookeeper/2.5.0.0-1245/0

2017-02-28 01:05:40,981 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-28 01:05:41,007 - call returned (1, '/etc/zookeeper/2.5.0.0-1245/0 exist already', '')

2017-02-28 01:05:41,007 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-28 01:05:41,032 - checked_call returned (0, '')

2017-02-28 01:05:41,033 - Ensuring that zookeeper has the correct symlink structure

2017-02-28 01:05:41,033 - Execute[('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245')] {'sudo': True}

2017-02-28 01:05:41,063 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module status_params

2017-02-28 01:05:41,066 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module params_linux

2017-02-28 01:05:41,067 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module params

2017-02-28 01:05:41,067 - Directory['/usr/hdp/current/zookeeper-server/conf'] {'owner': 'zookeeper', 'create_parents': True, 'group': 'hadoop'}

2017-02-28 01:05:41,073 - File['/usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh'] {'owner': 'zookeeper', 'content': InlineTemplate(...), 'group': 'hadoop'}

2017-02-28 01:05:41,073 - Writing File['/usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh'] because contents don't match

2017-02-28 01:05:41,079 - File['/usr/hdp/current/zookeeper-server/conf/zoo.cfg'] {'owner': 'zookeeper', 'content': Template('zoo.cfg.j2'), 'group': 'hadoop', 'mode': None}

2017-02-28 01:05:41,081 - File['/usr/hdp/current/zookeeper-server/conf/configuration.xsl'] {'owner': 'zookeeper', 'content': Template('configuration.xsl.j2'), 'group': 'hadoop', 'mode': None}

2017-02-28 01:05:41,081 - Directory['/var/run/zookeeper'] {'owner': 'zookeeper', 'create_parents': True, 'group': 'hadoop', 'mode': 0755}

2017-02-28 01:05:41,082 - Directory['/var/log/zookeeper'] {'owner': 'zookeeper', 'create_parents': True, 'group': 'hadoop', 'mode': 0755}

2017-02-28 01:05:41,082 - Directory['/hadoop/zookeeper'] {'owner': 'zookeeper', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2017-02-28 01:05:41,083 - File['/hadoop/zookeeper/myid'] {'content': '3', 'mode': 0644}

2017-02-28 01:05:41,086 - File['/usr/hdp/current/zookeeper-server/conf/log4j.properties'] {'content': ..., 'owner': 'zookeeper', 'group': 'hadoop', 'mode': 0644}

2017-02-28 01:05:41,087 - File['/usr/hdp/current/zookeeper-server/conf/zoo_sample.cfg'] {'owner': 'zookeeper', 'group': 'hadoop'}

2017-02-28 01:05:41,096 - Checking if need to create versioned conf dir /etc/zookeeper/2.5.0.0-1245/0

2017-02-28 01:05:41,100 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-28 01:05:41,139 - call returned (1, '/etc/zookeeper/2.5.0.0-1245/0 exist already', '')

2017-02-28 01:05:41,140 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-28 01:05:41,176 - checked_call returned (0, '')

2017-02-28 01:05:41,177 - Ensuring that zookeeper has the correct symlink structure

2017-02-28 01:05:41,178 - Execute[('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245')] {'sudo': True}

2017-02-28 01:05:41,221 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module status_params

2017-02-28 01:05:41,227 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module params_linux

2017-02-28 01:05:41,228 - After ('ambari-python-wrap', u'/usr/bin/hdp-select', 'set', 'zookeeper-server', u'2.5.0.0-1245'), reloaded module params

2017-02-28 01:05:41,229 - Execute['source /usr/hdp/current/zookeeper-server/conf/zookeeper-env.sh ; env ZOOCFGDIR=/usr/hdp/current/zookeeper-server/conf ZOOCFG=zoo.cfg /usr/hdp/current/zookeeper-server/bin/zkServer.sh start'] {'not_if': 'ls /var/run/zookeeper/zookeeper_server.pid >/dev/null 2>&1 && ps -p `cat /var/run/zookeeper/zookeeper_server.pid` >/dev/null 2>&1', 'user': 'zookeeper'}

2017-02-28 01:05:41,333 - Execute['find /var/log/zookeeper -maxdepth 1 -type f -name '*' -exec echo '==> {} <==' \; -exec tail -n 40 {} \;'] {'logoutput': True, 'ignore_failures': True, 'user': 'zookeeper'}

Command failed after 1 tries

Created 02-28-2017 12:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you need to create a symlink rather than copying files:

https://www.mail-archive.com/dev@ambari.apache.org/msg60487.html

ln -s /usr/hdp/2.5.0.0-1245/zookeeper /usr/hdp/current/zookeeper-server

Created 02-28-2017 09:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Were you able to resolve the problem?

Created 03-10-2017 09:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Checking in, if the issue is resolved.