Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: failure in starting zookeeper service

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

failure in starting zookeeper service

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 02-26-2017 09:47 PM - edited 08-19-2019 02:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

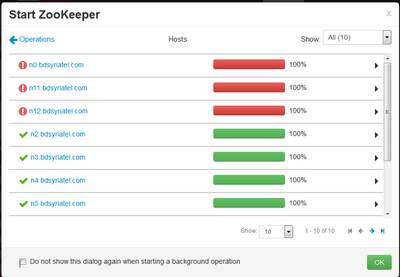

I installed HDP 2.5 successufully on 10 hosts with Centos 7, but I can't start zookeeper service. I have three zookeeper server installed and 10 zookeeper clients. I get the following error

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 189, in <module>

ZookeeperServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 56, in start

self.configure(env, upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper_server.py", line 51, in configure

zookeeper(type='server', upgrade_type=upgrade_type)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/scripts/zookeeper.py", line 42, in zookeeper

conf_select.select(params.stack_name, "zookeeper", params.current_version)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/conf_select.py", line 315, in select

shell.checked_call(_get_cmd("set-conf-dir", package, version), logoutput=False, quiet=False, sudo=True)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-python-wrap /usr/bin/conf-select set-conf-dir --package zookeeper --stack-version 2.5.0.0-1245 --conf-version 0' returned 1. Traceback (most recent call last):

File "/usr/bin/conf-select", line 178, in <module>

setConfDir(options.pname, options.sver, options.cver)

File "/usr/bin/conf-select", line 136, in setConfDir

raise Exception("Expected confdir %s to be a symlink." % confdir)

Exception: Expected confdir /usr/hdp/2.5.0.0-1245/zookeeper/conf to be a symlink.

stdout:

2017-02-26 22:55:17,520 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-26 22:55:17,522 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-26 22:55:17,524 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:17,565 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:17,566 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-26 22:55:17,603 - checked_call returned (0, '')

2017-02-26 22:55:17,604 - Ensuring that hadoop has the correct symlink structure

2017-02-26 22:55:17,604 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-26 22:55:17,805 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.0.0-1245

2017-02-26 22:55:17,807 - Checking if need to create versioned conf dir /etc/hadoop/2.5.0.0-1245/0

2017-02-26 22:55:17,809 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:17,848 - call returned (1, '/etc/hadoop/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:17,849 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-02-26 22:55:17,885 - checked_call returned (0, '')

2017-02-26 22:55:17,886 - Ensuring that hadoop has the correct symlink structure

2017-02-26 22:55:17,886 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-02-26 22:55:17,887 - Group['livy'] {}

2017-02-26 22:55:17,889 - Group['spark'] {}

2017-02-26 22:55:17,889 - Group['zeppelin'] {}

2017-02-26 22:55:17,889 - Group['hadoop'] {}

2017-02-26 22:55:17,890 - Group['users'] {}

2017-02-26 22:55:17,890 - Group['knox'] {}

2017-02-26 22:55:17,890 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,891 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,892 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,892 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,893 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,894 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,895 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,896 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,896 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,897 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,898 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,898 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,899 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,900 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,900 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-02-26 22:55:17,901 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,902 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,902 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,903 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,904 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,905 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,905 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,906 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,907 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-02-26 22:55:17,907 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-26 22:55:17,909 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2017-02-26 22:55:17,916 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2017-02-26 22:55:17,916 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2017-02-26 22:55:17,917 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-02-26 22:55:17,918 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2017-02-26 22:55:17,924 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2017-02-26 22:55:17,924 - Group['hdfs'] {}

2017-02-26 22:55:17,925 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2017-02-26 22:55:17,925 - FS Type:

2017-02-26 22:55:17,925 - Directory['/etc/hadoop'] {'mode': 0755}

2017-02-26 22:55:17,939 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,939 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2017-02-26 22:55:17,954 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2017-02-26 22:55:17,962 - Skipping Execute[('setenforce', '0')] due to not_if

2017-02-26 22:55:17,962 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2017-02-26 22:55:17,965 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2017-02-26 22:55:17,965 - Changing owner for /var/run/hadoop from 1018 to root

2017-02-26 22:55:17,965 - Changing group for /var/run/hadoop from 1004 to root

2017-02-26 22:55:17,966 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2017-02-26 22:55:17,971 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2017-02-26 22:55:17,973 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2017-02-26 22:55:17,974 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2017-02-26 22:55:17,989 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,990 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2017-02-26 22:55:17,991 - File['/usr/hdp/current/hadoop-client/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2017-02-26 22:55:17,997 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2017-02-26 22:55:18,001 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2017-02-26 22:55:18,256 - Checking if need to create versioned conf dir /etc/zookeeper/2.5.0.0-1245/0

2017-02-26 22:55:18,258 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-02-26 22:55:18,293 - call returned (1, '/etc/zookeeper/2.5.0.0-1245/0 exist already', '')

2017-02-26 22:55:18,293 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'zookeeper', '--stack-version', u'2.5.0.0-1245', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

Command failed after 1 tries

zookeeper client started successfully on all nodes. Zookeeper server failed on the three nodes.

I tried this command on the three server nodes

ln -s /etc/zookeeper/2.5.0.0-1245/0 /usr/hdp/2.5.0.0-1245/zookeeper/conf

but the same error still exists.

Created 03-06-2017 02:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Namit Maheshwari

I solved all the errors, zookeeper starts for about 10 seconds and then stops. There are no logs in the log directory. I tried to start zookeeper from command line, but I get this

[root@n12 ~]# /usr/hdp/2.5.0.0-1245/zookeeper/bin/zkServer.sh start JMX enabled by default Using config: /etc/zookeeper/zoo.cfg Starting zookeeper ... STARTED [root@n12 ~]# Error: Could not find or load main class org.apache.zookeeper.server.quorum.QuorumPeerMain

Created 11-23-2017 11:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please how did you solve the permission problems

Created 03-06-2017 02:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you mentioned that "There are no logs in the log directory." That could be because of missing "${zookeeper.log.dir}" prefix.

Please check if you have added the "${zookeeper.log.dir}" to zookeeper log file as following in your ambari config.

Ambari UI --> "Zookeeper" --> "Configs" --> Advanced --> "Advanced zookeeper-log4j"

.

CHANGE from:

log4j.appender.ROLLINGFILE.File=zookeeper.log

TO Following:

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/zookeeper.log.

After making the changes restart the zookeeper service and then please share the log "${zookeeper.log.dir}/zookeeper.log"

.

Created 11-23-2017 11:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get this error while trying to start zookeeper:

/usr/hdfp/2.6.3-235/zookeeper/bin/zkServer.sh start

/zkServer.sh: Permission denied

Created on 03-06-2017 02:58 PM - edited 08-19-2019 02:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @Bangalore

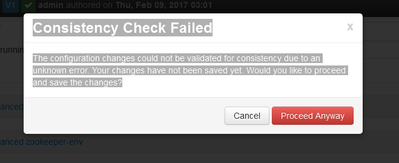

I think there are no logs because Zookeeper doesn't start. Any way, I tried to change the property as you recommended. I copied the value ${zookeeper.log.dir}/zookeeper.log, I clicked overwrite, then it told that there is no configuration group and I have to create new one. I created new one but i get this error

Consistency Check Failed The configuration changes could not be validated for consistency due to an unknown error. Your changes have not been saved yet. Would you like to proceed and save the changes?

The configuration changes could not be validated for consistency due to an unknown error. Your changes have not been saved yet. Would you like to proceed and save the changes?

Created 05-09-2017 06:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I could at least start Zookeeper by doing this (change for your environment's exact paths):

cd /usr/hdp/2.5.3.0-37/zookeeper; mkdir bin; cd bin; cp /var/lib/ambari-agent/cache/common-services/ZOOKEEPER/3.4.5/package/files/* .; ln -s /usr/hdp/2.3.0-37/zookeeper /usr/hdp/current/zookeeper-server

Source for the last command in the string of commands was: https://github.com/KaveIO/AmbariKave/wiki/Detailed-Guides-Troubleshooting

- « Previous

-

- 1

- 2

- Next »