Support Questions

- Cloudera Community

- Support

- Support Questions

- hdfs-datanode size

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

hdfs-datanode size

- Labels:

-

Apache Hive

-

HDFS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

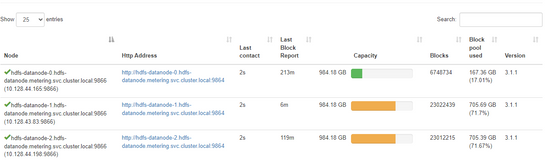

We want to breakdown the files/data for these datanodes.

May we know if this is the correct command? or path? to check how the data came up to 705GB.

hdfs dfs -du -v /operator_metering/storage

Created 09-20-2023 11:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

with this command you can see a detailed list of files and directories present at the path :

1.SIZE

2.DISK_SPACE_CONSUMED_WITH_ALL_REPLICAS

3.FULL_PATH_NAME

Lorenzo.

Created 09-21-2023 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Lorenzo ,

I've added the total bytes of the result of this command, and it didn't reach 705 GB.

May I know if there are other ways to calculate to get the 705 GB?

Thank you!

Created 09-29-2023 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Noel_0317 If you want to know how the Datanode got upto 705GB, you will need to do a du at the Linux filesystem level for the datanode blockpool.

For ex : du -s -h /data/dfs/dn/current/BP-331341740-172.25.35.200-1680172307700/

/data/dfs/dn/ ==> Datanode data dir

BP ==> Blockpool used by the datanode

The above should return 705GB. The Blockpool will contain the subdir which would be holding the File blocks present on this specific datanode.

When you run 'hdfs dfs -du' it takes the entire HDFS storage into account.

Created 09-30-2023 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Noel_0317

I am sharing few hdfs commands to be checked on file system level of hdfs.

hdfs dfs -df /hadoop - Shows the capacity, free and used space of the filesystem.

hdfs dfs -df -h /hadoop - Shows the capacity, free and used space of the filesystem. -h

parameter Formats the sizes of files in a human-readable fashion.

hdfs dfs -du /hadoop/file - Show the amount of space, in bytes, used by the files that match the specified file pattern.

hdfs dfs -du -s /hadoop/file - Rather than showing the size of each individual file that matches the pattern, shows the total (summary) size.

hdfs dfs -du -h /hadoop/file - Show the amount of space, in bytes, used by the files that match the specified file pattern. Formats the sizes of files in a human-readable.

fashion

Let me know if this helps.