Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: how to query data from mongodb with spark in z...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to query data from mongodb with spark in zeeplin?

- Labels:

-

Apache Spark

-

Apache Zeppelin

Created 04-23-2018 09:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello , I recently installed hdp-2. with hdp2.6 sandbox in vmawre ! I runned some job with spark to transform csv data and I saved them in mongodb Now I want to visualise some charts dashboard from my Mongodb data base so I added mongodb interpreter to zeeplin but it seems mongodb not good since I have a collection that contain 2 GB so I decide to work with spark ! how can I read data from Mongodb ! I must import some library for example com.mongodb.spark.sql._ and com.mongodb.spark.config.ReadConfig com.mongodb.spark.MongoSpark how I can do this in zeeplin !

in addition spark interpreter is better than mongodb interpreter in this context.

Thanks in advance

Created 06-01-2018 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are 2 approaches you can take. One is using package and the other is using jars (you need to download the jars)

Package approach

Add the following configuration on your zeppelin spark interpreter:

spark.jars.packages = org.mongodb.spark:mongo-spark-connector_2.11:2.2.2 # for more information read here https://spark-packages.org/package/mongodb/mongo-spark

Jar approach

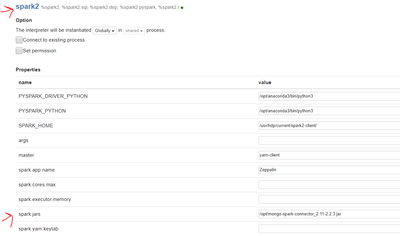

You need to add the mongo db connector jars to the spark interpreter configuration.

1. Download the mongodb connector jar for spark (depending on your spark version make sure you download the correct scala version - if spark2 you should use 2.11 scala)

2. Add the jars to the zeppelin spark interpreter using spark.jars property

spark.jars = /location/of/jars

On both cases you need to save and restart the interpreter.

HTH

*** If you found this answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created 06-01-2018 12:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

any updates on this issue ? it is very important.

Created 06-01-2018 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are 2 approaches you can take. One is using package and the other is using jars (you need to download the jars)

Package approach

Add the following configuration on your zeppelin spark interpreter:

spark.jars.packages = org.mongodb.spark:mongo-spark-connector_2.11:2.2.2 # for more information read here https://spark-packages.org/package/mongodb/mongo-spark

Jar approach

You need to add the mongo db connector jars to the spark interpreter configuration.

1. Download the mongodb connector jar for spark (depending on your spark version make sure you download the correct scala version - if spark2 you should use 2.11 scala)

2. Add the jars to the zeppelin spark interpreter using spark.jars property

spark.jars = /location/of/jars

On both cases you need to save and restart the interpreter.

HTH

*** If you found this answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created on 06-26-2018 08:39 AM - edited 08-18-2019 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Felix Albani Can you kindly help me with this error

And my code is

%spark2.pyspark

from pyspark.sql import SparkSession

my_spark = SparkSession .builder .appName("myApp") .config("spark.mongodb.input.uri", "mongodb://127.0.0.1/db.col") .config("spark.mongodb.output.uri", "mongodb://127.0.0.1/db.col") .getOrCreate()<pyspark.sql.session.SparkSession object at 0x7ffa96a92c18>

df = my_spark.read.format("com.mongodb.spark.sql.DefaultSource").load()And the error is

": java.lang.NoClassDefFoundError: com/mongodb/ConnectionString"

Here is the jar file I added in the Zeppelin interpreter

Created 06-01-2018 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Felix Albani ! I have a question! is spark interpreter is the best in this case? is spark work with mongodb in the same way with hdfs (memory+speed) ??

Created 06-01-2018 02:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@chaouki trabelsi mongodb connector is build to leverage spark parallelism. So I think is a good alternative on this case. If you have further questions on how to use it or anything else please open a separate thread! Thanks!

Created 09-18-2018 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

guys I am having the following issue trying to query mongo db from zeppelin with spark:

java.lang.IllegalArgumentException: Missing collection name. Set via the 'spark.mongodb.input.uri' or 'spark.mongodb.input.collection' property

I have set mongo-spark-connector_2.11:2.2.2 in dependencies of spark2 interpretator

and my code is:

%spark2

import com.mongodb.spark._

spark.conf.set("spark.mongodb.input.uri", "mongodb://myip:myport/mydb.collection")

spark.conf.set("spark.mongodb.output.uri", "mongodb://myip:myport/mydb.collection")

val rdd = MongoSpark.load(sc)

I also tried:

%spark2

sc.stop()

import org.apache.spark.sql.SparkSession

import com.mongodb.spark._

import com.mongodb.spark.config._

val spark_custom_session = SparkSession.builder()

.master("local")

.appName("ZeplinMongo")

.config("spark.mongodb.input.database", "mongodb://myip:myport/mydb.collection")

.config("spark.mongodb.output.uri", "mongodb://myip:myport/mydb.collection")

.config("spark.mongodb.output.collection", "mongodb://myip:myport/mydb.collection") .getOrCreate()

val customRdd = MongoSpark.load(spark_custom_session)

rdd.count

And

import com.mongodb.spark.config._

val readConfig = ReadConfig(Map(

"spark.mongodb.input.uri" -> "mongodb://myip:myport/mydb.collection",

"spark.mongodb.input.readPreference.name" -> "secondaryPreferred"),

Some(ReadConfig(sc)))

val customRdd = MongoSpark.load(sc, readConfig)

customRdd.countWhat ever I do I get:

import org.apache.spark.sql.SparkSession import com.mongodb.spark._ import com.mongodb.spark.config._ spark_custom_session: org.apache.spark.sql.SparkSession = org.apache.spark.sql.SparkSession@4f9c7e5f java.lang.IllegalArgumentException: Missing collection name. Set via the 'spark.mongodb.input.uri' or 'spark.mongodb.input.collection' property at com.mongodb.spark.config.MongoCompanionConfig$class.collectionName(MongoCompanionConfig.scala:270) at com.mongodb.spark.config.ReadConfig$.collectionName(ReadConfig.scala:39) at com.mongodb.spark.config.ReadConfig$.apply(ReadConfig.scala:60) at com.mongodb.spark.config.ReadConfig$.apply(ReadConfig.scala:39) at com.mongodb.spark.config.MongoCompanionConfig$class.apply(MongoCompanionConfig.scala:124) at com.mongodb.spark.config.ReadConfig$.apply(ReadConfig.scala:39) at com.mongodb.spark.config.MongoCompanionConfig$class.apply(MongoCompanionConfig.scala:113) at com.mongodb.spark.config.ReadConfig$.apply(ReadConfig.scala:39) at com.mongodb.spark.MongoSpark$Builder.build(MongoSpark.scala:231) at com.mongodb.spark.MongoSpark$.load(MongoSpark.scala:84) ... 73 elided

PLEASE HELP! 🙂

Created 09-18-2018 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Daniel Pevni what's spark version and mongodb version ?

Created 09-20-2018 10:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

spark2 mongoldb 3.2