Support Questions

- Cloudera Community

- Support

- Support Questions

- how to set the yarn application priority in hive ?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to set the yarn application priority in hive ?

- Labels:

-

Apache Hive

-

Apache Spark

-

Apache YARN

Created 11-24-2022 06:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

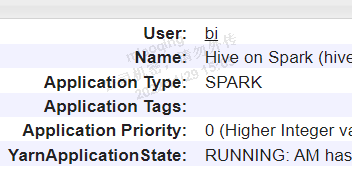

Yarn's application priority can be found in yarn 8088 resource manager's website,

How do the priority work in yarn?

Now the version of CDH I use is 6.3.2.

Hadoop 3.0.0

Hive 2.1.1

I use hive on spark.

Can I use the config to manage the application priority?

For some reason some hive sqls should have high application priority when those sqls are appending and run ahead of the other. Instead of running the same time and equal share the compute resources.

When I set the following config in Hive, it seems do not work well in Yarn.

| MapReduce | "-Dmapreduce.job.priority=xx" |

| Flink | "-yD yarn.applicaiton.priority=xx" |

| Spark | "spark.yarn.priority=xx" |

In hive sql."set spark.yarn.priority=10;"

It does not work ...

Created 11-25-2022 06:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @bulbcat

- Spark version used in CDH 6.3.2 is Spark 2.4.0 [1]

- Also spark.yarn.priority will only effect from spark-3.0 [2]

[2]https://github.com/apache/spark/pull/27856

If you want to update priority for application during runtime you can use below

yarn application -updatePriority 10 -appId application_xxxx_xx

Thanks!

Created 11-25-2022 06:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @bulbcat

- Spark version used in CDH 6.3.2 is Spark 2.4.0 [1]

- Also spark.yarn.priority will only effect from spark-3.0 [2]

[2]https://github.com/apache/spark/pull/27856

If you want to update priority for application during runtime you can use below

yarn application -updatePriority 10 -appId application_xxxx_xx

Thanks!

Created 11-28-2022 11:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot.

This "yarn application -updatePriority 10 -appId application_xxxx_xx"

seems a config of yarn.

It does not work for spark 2.x in CDH 6.3.2 either.

Does it the same reason which means the 'Application Priority' must match the yarn version with spark version?