Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: invalid type length for Decimal fields when Im...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

invalid type length for Decimal fields when Impala query on Parquet stored Hive Table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

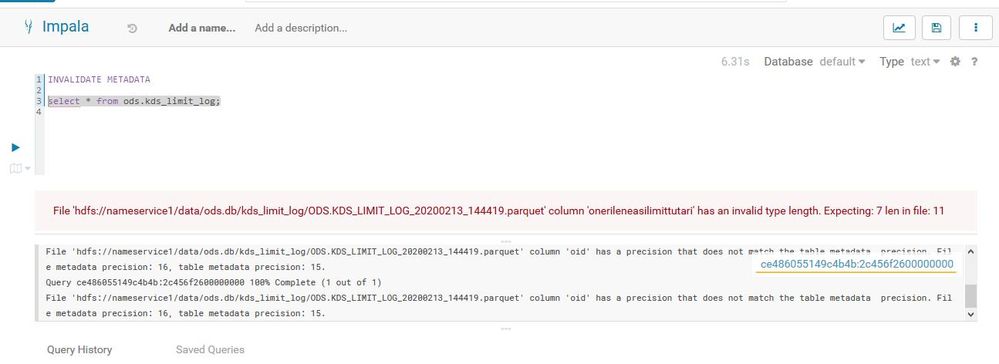

I am trying to custom parquet-avro schema creation for a table which is taken from Kafka Topic using Java Avro API. Output parquet file is worn with Hive table table. Decimal fields are created as fixed_len_byte_array on the schema. The Hive table can be queried using Hive and Spark. But, when impala query is used, it gives like below error.

I try to define byte array size according to cloudera impala documentation

1 <= precision <=9, then 4 bytes

10<= precision <=18, then 8 bytes

precision>18, then 16 bytes.

In the impala query screen on Cloudera Hue. It shows Hive table description.

I am using array size detection for fixed_len_byte_array like below (accrording to https://issues.apache.org/jira/browse/IMPALA-2515)

But it gives above error. What is the formula for catching expected array size for Impala?

Created 02-10-2020 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Onur ,

I did a google search and found this existing Impala bug:

https://issues.apache.org/jira/browse/IMPALA-7087

Do you think maybe you are hitting this one?

Thanks!

Li Wang, Technical Solution Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 02-13-2020 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Iwang,

Thank you for your response. I tried with

TBLPROPERTIES ('OBJCAPABILITIES'='EXTREAD,EXTWRITEhive table properties for creating table.

But, as Yongzhi stated that it is not solution for the fixed-length type.

My hive table creation script is;

CREATE TABLE ods.kds_limit_log(ONERILENLIMITADI STRING,PROPOSEDCOLLATERALTYPE STRING,ONERILENMAXVADE STRING,ONERILENDOVIZ STRING,KDSREFORDER DECIMAL(3,0),LASTUPDATED DECIMAL(15,0),ONERILENMORTGAGELIMITTUTARI DECIMAL(25,2),OID DECIMAL(16,0),ONERILENKGFLIMITTUTARI DECIMAL(25,2),MEVCUTMAXVADE STRING,STATUS DECIMAL(1,0),MEVCUTLIMITTUTARI DECIMAL(25,2),ENTERANCEDATE DECIMAL(8,0),ONERILENLIMITTUTARI DECIMAL(25,2),MEVCUTDOVIZ STRING,MEVCUTRISKTUTARI DECIMAL(25,2),ONERILENLIMITREFERANS STRING,ENTERANCETIME DECIMAL(6,0),MEVCUTLIMITADI STRING,EXISTINGCOLLATERALTYPE STRING,ONERILENEASILIMITTUTARI DECIMAL(25,2),KDSREFNO STRING,MEVCUTLIMITREFERANS STRING) STORED AS PARQUET LOCATION '/data/ods.db/kds_limit_log/' TBLPROPERTIES ('OBJCAPABILITIES'='EXTREAD,EXTWRITE')

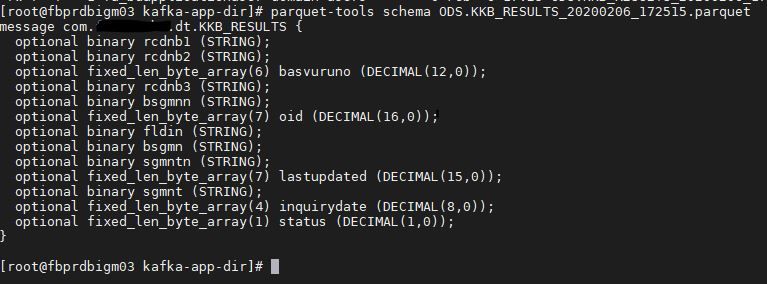

Parquet file schema;

It can be run with Hive, but not with Impala

Best regards

Onur