Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: is there a way to set Hive Parameters in Apach...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

is there a way to set Hive Parameters in Apache Nifi ?

- Labels:

-

Apache Hive

-

Apache NiFi

Created 05-10-2018 08:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All , is there a way to set hive parameters in Apache Nifi . I would want to set some Hive related parameters before ingestion . Is it possible using Apache Nifi ? If yes , could I set these values in say the Update Attribute processor and then direct it to a PutHiveQL or PutHiveStreaming ??

Created 05-10-2018 11:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Controller service doesn't change parameters based on the flowfile attributes, once the controller service is enabled with some parameter then we are going to have that parameter set to each job that we are executing by using same controller.

You can use Jdbc connection string in Hive controller service to add all the parameters that needs to be added by using ;(semicolon) as a delimiter.

Example:-

jdbc:hive2://<connection string>?tez.queue.name=<queue-name>;hive.support.quoted.identifiers=none

in the above connection string i have added two parameters to the connection pool service.

(or)

Connection pool Connection string will be declared without all the parameters.

jdbc:hive2://<connection string>

Keep your attributes to the flowfile before PutHiveQl processor use Replace text processor and replace all your parameters with the statement(ddl/dml..) that you are executing using PutHiveQL processor.

Example:-

While executing the job you can set all the parameters like

set tez.queue.name=<queue-name>;<br>set hive.support.quoted.identifiers=none;<br>insert into final table select * from staging table

in puthiveql processor we are having Statement Delimiter property value as ; which going to execute all the statements at once and for the session that has been initialized we are going to have all these parameters has been set.

For PutHiveStreaming processor supports expression language for Database name,table name.. etc properties so we can change the parameters based on the flowfile attributes.NOTE: If multiple concurrent tasks are configured for this processor, only one table can be written to at any time by a single thread. Additional tasks intending to write to the same table will wait for the current task to finish writing to the table.

For more information please take a look into this jira addressing about dynamic properties for Connection pool.

Created 05-10-2018 11:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Controller service doesn't change parameters based on the flowfile attributes, once the controller service is enabled with some parameter then we are going to have that parameter set to each job that we are executing by using same controller.

You can use Jdbc connection string in Hive controller service to add all the parameters that needs to be added by using ;(semicolon) as a delimiter.

Example:-

jdbc:hive2://<connection string>?tez.queue.name=<queue-name>;hive.support.quoted.identifiers=none

in the above connection string i have added two parameters to the connection pool service.

(or)

Connection pool Connection string will be declared without all the parameters.

jdbc:hive2://<connection string>

Keep your attributes to the flowfile before PutHiveQl processor use Replace text processor and replace all your parameters with the statement(ddl/dml..) that you are executing using PutHiveQL processor.

Example:-

While executing the job you can set all the parameters like

set tez.queue.name=<queue-name>;<br>set hive.support.quoted.identifiers=none;<br>insert into final table select * from staging table

in puthiveql processor we are having Statement Delimiter property value as ; which going to execute all the statements at once and for the session that has been initialized we are going to have all these parameters has been set.

For PutHiveStreaming processor supports expression language for Database name,table name.. etc properties so we can change the parameters based on the flowfile attributes.NOTE: If multiple concurrent tasks are configured for this processor, only one table can be written to at any time by a single thread. Additional tasks intending to write to the same table will wait for the current task to finish writing to the table.

For more information please take a look into this jira addressing about dynamic properties for Connection pool.

Created on 05-10-2018 11:45 PM - edited 08-18-2019 12:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for these details. I will try all this and see if I can get this fixed . The basic issue we are facing here is when we are trying to insert data into a partitioned hive table using "put hive streaming" processor . The issue that we are getting is as follows :-

org.apache.nifi.util.hive.HiveWriter$CommitFailure: Commit of Txn 36315 failed on EndPoint: {metaStoreUri='thrift://caplifs0c.telecom.tcnz.net:9083', database='adhoc', table='nifi_s_order_test1', partitionVals=[2015-10-26] }

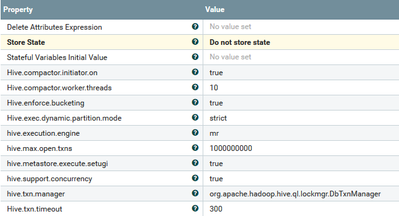

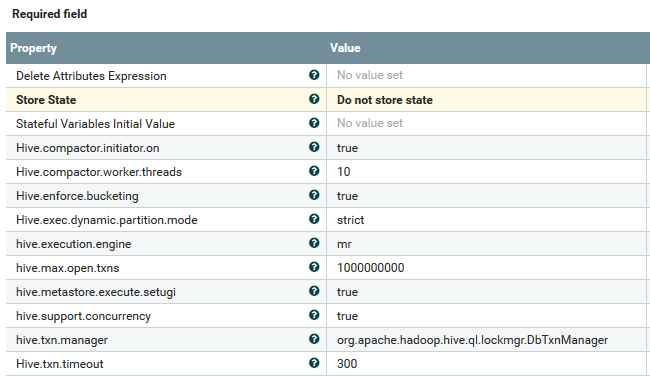

Do you by any chance have an idea how to fix this issue . The "PutHiveStreaming" works fine for a non partitioned table but as soon as we have this set to a partitioned table , we start seeing these issues. Actually , we set all the required hive parameters in the update attribute processor just before the put hive streaming processor. Screen shot below :-

Have you used the put hive streaming processor to insert data into a partitioned table ?

Thanks @Shu . waiting for your response

Created 05-11-2018 01:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu

Thanks - this is now fixed. We realized the Nifi Nodes werent added to the hadoop topology which is why we were getting that error. This has been added and the data is going through !!

Thanks for all the help .. Have accepted your answer ..