Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: java.lang.RuntimeException: The root scratch d...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable.

- Labels:

-

Apache Hive

-

Apache Storm

Created 08-30-2017 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am having issue with storm and hive streaming. I did as post https://community.hortonworks.com/questions/96995/storm-hdfs-javalangruntimeexception-error-preparin... but did not helped.

I also looked at https://community.hortonworks.com/questions/111874/non-local-session-path-expected-to-be-non-null-tr... post. But not understood as which jar to be included.

Can anybody please help me.

Below is my POM.

<dependencies>

<dependency>

<groupId>joda-time</groupId>

<artifactId>joda-time</artifactId>

<version>2.9.9</version>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>1.0.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-hive</artifactId>

<version>1.0.1</version>

</dependency> <dependency>

<groupId>org.eclipse.paho</groupId>

<artifactId>org.eclipse.paho.client.mqttv3</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.3.3</version>

</dependency> <dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.2.0</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.2.0</version>

<exclusions>

<exclusion> <groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

Below is my maven shade plugin

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-source-plugin</artifactId>

</plugin>

<plugin> <groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<!-- Maven shade plugin -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<configuration>

<createDependencyReducedPom>true</createDependencyReducedPom>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<!-- <artifactSet> <excludes> <exclude>org.slf4j:slf4j-log4j12:*</exclude> <exclude>log4j:log4j:jar:</exclude> <exclude>org.slf4j:slf4j-simple:jar</exclude> <exclude>org.apache.storm:storm-core</exclude> </excludes> </artifactSet> --> <transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ServicesResourceTransformer"/>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass></mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

Please see below error.

java.lang.RuntimeException: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx------

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444) ~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.createPartitionIfNotExists(HiveEndPoint.java:358) ~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:276) ~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:243) ~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnectionImpl(HiveEndPoint.java:180) ~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnection(HiveEndPoint.java:157) ~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter$5.call(HiveWriter.java:238) ~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter$5.call(HiveWriter.java:235) ~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter$9.call(HiveWriter.java:366) ~[stormjar.jar:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) ~[?:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) ~[?:1.8.0_77]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_77]

Caused by: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx------

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:529) ~[stormjar.jar:?]

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:478) ~[stormjar.jar:?]

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:430) ~[stormjar.jar:?]

... 12 more

2017-08-30 14:51:58.022 o.a.s.d.executor [INFO] BOLT fail TASK: 3 TIME: TUPLE: source: mqttprocesser:4, stream: default, id: {}, [My count is, 11]

2017-08-30 14:51:58.023 o.a.s.d.executor [INFO] Execute done TUPLE source: mqttprocesser:4, stream: default, id: {}, [My count is, 11] TASK: 3 DELTA:

2017-08-30 14:51:58.023 o.a.s.d.executor [INFO] Processing received message FOR 3 TUPLE: source: mqttprocesser:4, stream: default, id: {}, [My count is, 12]

2017-08-30 14:51:58.050 h.metastore [INFO] Trying to connect to metastore with URI thrift://base1.rolta.com:9083

2017-08-30 14:51:58.052 h.metastore [INFO] Connected to metastore.

2017-08-30 14:51:58.124 o.a.h.h.q.l.PerfLogger [INFO] <PERFLOG method=Driver.run from=org.apache.hadoop.hive.ql.Driver>

2017-08-30 14:51:58.124 o.a.h.h.q.l.PerfLogger [INFO] <PERFLOG method=TimeToSubmit from=org.apache.hadoop.hive.ql.Driver>

2017-08-30 14:51:58.124 o.a.h.h.q.l.PerfLogger [INFO] <PERFLOG method=compile from=org.apache.hadoop.hive.ql.Driver>

2017-08-30 14:51:58.202 STDIO [ERROR] FAILED: NullPointerException Non-local session path expected to be non-null

2017-08-30 14:51:58.202 o.a.h.h.q.Driver [ERROR] FAILED: NullPointerException Non-local session path expected to be non-null

java.lang.NullPointerException: Non-local session path expected to be non-null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.hadoop.hive.ql.session.SessionState.getHDFSSessionPath(SessionState.java:590)

at org.apache.hadoop.hive.ql.Context.<init>(Context.java:129)

at org.apache.hadoop.hive.ql.Context.<init>(Context.java:116)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:382)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:303)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1067)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1129)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1004)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:994)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.runDDL(HiveEndPoint.java:404)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.createPartitionIfNotExists(HiveEndPoint.java:369)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:276)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:243)

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnectionImpl(HiveEndPoint.java:180)

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnection(HiveEndPoint.java:157)

at org.apache.storm.hive.common.HiveWriter$5.call(HiveWriter.java:238)

at org.apache.storm.hive.common.HiveWriter$5.call(HiveWriter.java:235)

at org.apache.storm.hive.common.HiveWriter$9.call(HiveWriter.java:366)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Created 08-30-2017 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I updated hive-site.xml with /tmp/mydir value for scratchdir property on namenode. And it kept showing me error for /tmp/hive. So I changed configuration file on all datanodes and error went (but another error came.) So,

1. Is it correct way to change property on all nodes.

2. Does that also suggest that in case hive property change it has to be changed for all thenodes.

Can anybody please let me know.

Created 08-30-2017 11:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@parag dharmadhikari, the permission of /tmp dir is not correct on HDFS.

Typically /tmp dir has 777 permission. Run below command on your cluster, it will resolve this permission denied error.

hdfs dfs -chmod 777 /tmp

Created 04-16-2022 07:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

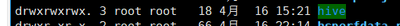

This is the permission of /tmp/hive, tried giving permission using chmod command,

Still getting the following error

hadoop is hadoop2.7.4, spark is spark3.0.0, hive is hive2.3.1

Created 04-18-2022 05:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Welcome to the community @ChinaRed-Liu .

As this is an older post you would have a better chance of receiving a resolution by starting a new thread. This will also provide the opportunity to provide details specific to your environment that could aid others in providing a more accurate answer to your question.

Cy Jervis, Manager, Community Program

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.