Support Questions

- Cloudera Community

- Support

- Support Questions

- jsontosql Error :None of the fields in the JSON ma...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

jsontosql Error :None of the fields in the JSON map to the columns defined by the table_name table

- Labels:

-

Apache NiFi

Created on 05-16-2017 05:50 PM - edited 08-18-2019 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

LOG File Error:

16 23:15:16,178 ERROR [Timer-Driven Process Thread-9] o.apache.nifi.processors.standard.PutSQL PutSQL[id=0d5dd381-015c-1000-b798-206309b21f43] Failed to update database for [StandardFlowFileRecord[uuid=0f3aef07-2ea4-46aa-8752-b6d440decfc7,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1494956697846-199, container=default, section=199], offset=327246, length=256],offset=0,name=47886655293009.json,size=256]] due to com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2); it is possible that retrying the operation will succeed, so routing to retry: com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2) 2017-05-16 23:15:16,178 ERROR [Timer-Driven Process Thread-9] o.apache.nifi.processors.standard.PutSQL com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2) at com.sap.db.jdbc.exceptions.SQLExceptionSapDB.createException(SQLExceptionSapDB.java:334) ~[na:na] at com.sap.db.jdbc.exceptions.SQLExceptionSapDB.generateDatabaseException(SQLExceptionSapDB.java:174) ~[na:na] at com.sap.db.jdbc.packet.ReplyPacket.buildExceptionChain(ReplyPacket.java:104) ~[na:na] at com.sap.db.jdbc.ConnectionSapDB.execute(ConnectionSapDB.java:1142) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.sendCommand(CallableStatementSapDB.java:1961) ~[na:na] at com.sap.db.jdbc.StatementSapDB.sendSQL(StatementSapDB.java:981) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.doParse(CallableStatementSapDB.java:253) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.constructor(CallableStatementSapDB.java:212) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.<init>(CallableStatementSapDB.java:123) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDBFinalize.<init>(CallableStatementSapDBFinalize.java:31) ~[na:na] at com.sap.db.jdbc.ConnectionSapDB.prepareStatement(ConnectionSapDB.java:1365) ~[na:na] at com.sap.db.jdbc.trace.Connection.prepareStatement(Connection.java:413) ~[na:na] at org.apache.commons.dbcp.DelegatingConnection.prepareStatement(DelegatingConnection.java:281) ~[na:na] at org.apache.commons.dbcp.PoolingDataSource$PoolGuardConnectionWrapper.prepareStatement(PoolingDataSource.java:313) ~[na:na] at org.apache.nifi.processors.standard.PutSQL.getEnclosure(PutSQL.java:569) ~[nifi-standard-processors-1.1.2.jar:1.1.2] at org.apache.nifi.processors.standard.PutSQL.onTrigger(PutSQL.java:230) ~[nifi-standard-processors-1.1.2.jar:1.1.2] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) [nifi-api-1.1.2.jar:1.1.2] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1099) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.1.2.jar:1.1.2] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_102] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_102] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_102] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_102] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_102] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_102] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_102] 2017-05-16 23:15:16,544 INFO [NiFi Web Server-876] o.a.n.controller.StandardProcessorNode Stopping processor: class org.apache.nifi.processors.standard.PutSQL 2017-05-16 23:15:16,544 INFO [StandardProcessScheduler Thread-2] o.a.n.c.s.TimerDrivenSchedulingAgent Stopped scheduling PutSQL[id=0d5dd381-015c-1000-b798-206309b21f43] to run 2017-05-16 23:15:17,126 ERROR [Timer-Driven Process Thread-1] o.apache.nifi.processors.standard.PutSQL PutSQL[id=0d5dd381-015c-1000-b798-206309b21f43] Failed to update database for [StandardFlowFileRecord[uuid=55524bac-37b1-4d82-80d3-8a52964a86fe,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1494956697846-199, container=default, section=199], offset=327502, length=346],offset=0,name=47886670846428.json,size=346]] due to com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2); it is possible that retrying the operation will succeed, so routing to retry: com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2) 2017-05-16 23:15:17,126 ERROR [Timer-Driven Process Thread-1] o.apache.nifi.processors.standard.PutSQL com.sap.db.jdbc.exceptions.JDBCDriverException: SAP DBTech JDBC: [257]: sql syntax error: incorrect syntax near """: line 1 col 2 (at pos 2) at com.sap.db.jdbc.exceptions.SQLExceptionSapDB.createException(SQLExceptionSapDB.java:334) ~[na:na] at com.sap.db.jdbc.exceptions.SQLExceptionSapDB.generateDatabaseException(SQLExceptionSapDB.java:174) ~[na:na] at com.sap.db.jdbc.packet.ReplyPacket.buildExceptionChain(ReplyPacket.java:104) ~[na:na] at com.sap.db.jdbc.ConnectionSapDB.execute(ConnectionSapDB.java:1142) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.sendCommand(CallableStatementSapDB.java:1961) ~[na:na] at com.sap.db.jdbc.StatementSapDB.sendSQL(StatementSapDB.java:981) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.doParse(CallableStatementSapDB.java:253) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.constructor(CallableStatementSapDB.java:212) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDB.<init>(CallableStatementSapDB.java:123) ~[na:na] at com.sap.db.jdbc.CallableStatementSapDBFinalize.<init>(CallableStatementSapDBFinalize.java:31) ~[na:na] at com.sap.db.jdbc.ConnectionSapDB.prepareStatement(ConnectionSapDB.java:1365) ~[na:na] at com.sap.db.jdbc.trace.Connection.prepareStatement(Connection.java:413) ~[na:na] at org.apache.commons.dbcp.DelegatingConnection.prepareStatement(DelegatingConnection.java:281) ~[na:na] at org.apache.commons.dbcp.PoolingDataSource$PoolGuardConnectionWrapper.prepareStatement(PoolingDataSource.java:313) ~[na:na] at org.apache.nifi.processors.standard.PutSQL.getEnclosure(PutSQL.java:569) ~[nifi-standard-processors-1.1.2.jar:1.1.2] at org.apache.nifi.processors.standard.PutSQL.onTrigger(PutSQL.java:230) ~[nifi-standard-processors-1.1.2.jar:1.1.2] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) [nifi-api-1.1.2.jar:1.1.2] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1099) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.1.2.jar:1.1.2] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.1.2.jar:1.1.2] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_102] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_102] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_102] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_102] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_102] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_102] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_102] 2017-05-16 23:15:17,141 INFO [Flow Service Tasks Thread-2] o.a.nifi.controller.StandardFlowService Saved flow controller org.apache.nifi.controller.FlowController@6ba604a4 // Another save pending = false 2017-05-16 23:15:18,371 INFO [NiFi Web Server-943] o.a.n.controller.StandardProcessorNode Stopping processor: class org.apache.nifi.processors.standard.ConvertJSONToSQL 2017-05-16 23:15:18,386 INFO [StandardProcessScheduler Thread-5] o.a.n.c.s.TimerDrivenSchedulingAgent Stopped scheduling ConvertJSONToSQL[id=102c1958-015c-1000-cbaf-142d83a47629] to run 2017-05-16 23:15:18,815 INFO [Flow Service Tasks Thread-2] o.a.nifi.controller.StandardFlowService Saved flow controller org.apache.nifi.controller.FlowController@6ba604a4 // Another save pending = false 2017-05-16 23:15:28,409 INFO [Provenance Maintenance Thread-3] o.a.n.p.PersistentProvenanceRepository Created new Provenance Event Writers for events starting with ID 293831 2017-05-16 23:15:28,519 INFO [Provenance Repository Rollover Thread-2] o.a.n.p.PersistentProvenanceRepository Successfully merged 16 journal files (1567 records) into single Provenance Log File .\provenance_repository\292264.prov in 126 milliseconds 2017-05-16 23:15:28,519 INFO [Provenance Repository Rollover Thread-2] o.a.n.p.PersistentProvenanceRepository Successfully Rolled over Provenance Event file containing 1624 records. In the past 5 minutes, 1716 events have been written to the Provenance Repository, totaling 844.84 KB 2017-05-16 23:15:31,295 INFO [pool-8-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Initiating checkpoint of FlowFile Repository 2017-05-16 23:15:32,517 INFO [pool-8-thread-1] org.wali.MinimalLockingWriteAheadLog org.wali.MinimalLockingWriteAheadLog@6915351c checkpointed with 1100 Records and 0 Swap Files in 1224 milliseconds (Stop-the-world time = 485 milliseconds, Clear Edit Logs time = 490 millis), max Transaction ID 174586 2017-05-16 23:15:32,517 INFO [pool-8-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Successfully checkpointed FlowFile Repository with 1100 records in 1224 milliseconds 2017-05-16 23:15:33,032 INFO [FileSystemRepository Workers Thread-2] o.a.n.c.repository.FileSystemRepository Successfully archived 1 Resource Claims for Container default in 2 millis

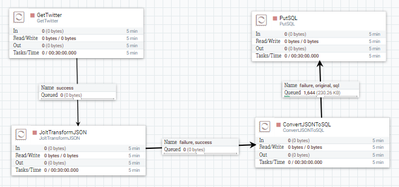

Flow is

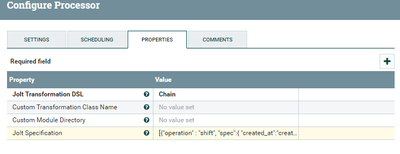

JoltTransformJSON configuration:

and jolt specification is below:

[{"operation" : "shift", "spec":{ "created_at":"created_date", "id":"tweet_id", "text":"tweet", "user":{ "id":"user_id" } } }]

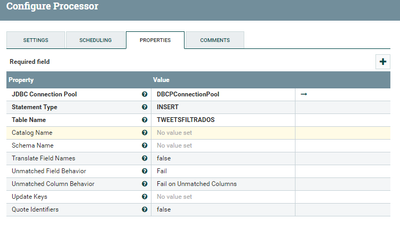

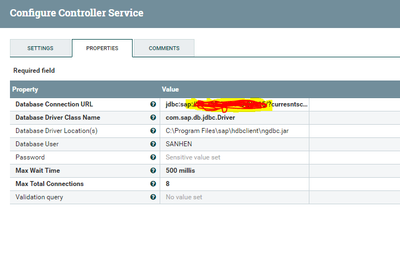

convert JSONtoSQL Configuration: i am getting error in this processor

its getting json from joltTransformationJSON is

{"created_date":"Tue May 16 08:47:58 +0000 2017","tweet_id":864402031007715329,"tweet":"The Amazing Spider-Man (2012) Kostenlos Online Anschauen \nLink: https://t.co/L4QGSD0RBQ \n#TheAmazingSpiderMan","user_id":4913539714}

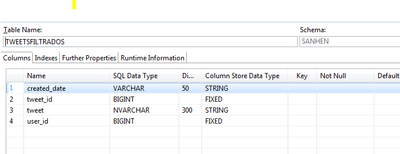

My table structure in HANA

After JSONtoSQL processor i am getting below JSON

{"created_date":"Tue May 16 08:47:58 +0000 2017","tweet_id":864402031007715329,"tweet":"The Amazing Spider-Man (2012) Kostenlos Online Anschauen \nLink: https://t.co/L4QGSD0RBQ \n#TheAmazingSpiderMan","user_id":4913539714}

connection with hana database is fine i have tested with querydatabasetable processor that is working fine.

help is appreciated.

Created 05-16-2017 09:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After ConvertJSONToSQL you should not have JSON in the flowfile content, rather it should be a SQL statement. From the screenshot, you are sending all relationships to PutSQL, when you should only send the "sql" relationship. The "original" and "failure" relationships contain the input JSON, the "sql" relationship contains the corresponding SQL statement(s).

Created 05-16-2017 09:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After ConvertJSONToSQL you should not have JSON in the flowfile content, rather it should be a SQL statement. From the screenshot, you are sending all relationships to PutSQL, when you should only send the "sql" relationship. The "original" and "failure" relationships contain the input JSON, the "sql" relationship contains the corresponding SQL statement(s).

Created 05-17-2017 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

Thanks for reply. Now Recored are inserting into HANA table.