Support Questions

- Cloudera Community

- Support

- Support Questions

- knox to hivserver2 call does not work on ssl clust...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

knox to hivserver2 call does not work on ssl cluster

- Labels:

-

Apache Hive

-

Apache Knox

Created 03-27-2017 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am seeing issue when I configured knox to work with hive ssl , using the following doc.

https://hortonworks.com/blog/end-end-wire-encryption-apache-knox/

I am trying to make following call :

beeline --silent=true -u "jdbc:hive2://<knox_host>:8443/;ssl=true;sslTrustStore=/usr/hdp/current/knox-server/data/security/keystores/gateway.jks;trustStorePassword=knoxsecret;transportMode=http;httpPath=gateway/default/hive;hive.server2.use.SSL=true" -d org.apache.hive.jdbc.HiveDriver -n sam -p sam-password

17/03/27 13:01:12 [main]: ERROR jdbc.HiveConnection: Error opening session org.apache.thrift.transport.TTransportException: HTTP Response code: 500 at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:262) at org.apache.thrift.transport.THttpClient.flush(THttpClient.java:313) at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:73) at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:62) at org.apache.hive.service.cli.thrift.TCLIService$Client.send_OpenSession(TCLIService.java:154) at org.apache.hive.service.cli.thrift.TCLIService$Client.OpenSession(TCLIService.java:146) at org.apache.hive.jdbc.HiveConnection.openSession(HiveConnection.java:553) at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:171) at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:105) at java.sql.DriverManager.getConnection(DriverManager.java:664) at java.sql.DriverManager.getConnection(DriverManager.java:208) at org.apache.hive.beeline.DatabaseConnection.connect(DatabaseConnection.java:146) at org.apache.hive.beeline.DatabaseConnection.getConnection(DatabaseConnection.java:211) at org.apache.hive.beeline.Commands.close(Commands.java:1016) at org.apache.hive.beeline.Commands.closeall(Commands.java:998) at org.apache.hive.beeline.BeeLine.close(BeeLine.java:846) at org.apache.hive.beeline.BeeLine.begin(BeeLine.java:793) at org.apache.hive.beeline.BeeLine.mainWithInputRedirection(BeeLine.java:491) at org.apache.hive.beeline.BeeLine.main(BeeLine.java:474) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:233) at org.apache.hadoop.util.RunJar.main(RunJar.java:148)

gateway-audit.log

17/03/27 13:01:12 ||1ebe2bff-8ed2-4c68-84fa-13166d10b73f|audit|HIVE||||access|uri|/gateway/default/hive|unavailable|Request method: POST17/03/27 13:01:12 ||1ebe2bff-8ed2-4c68-84fa-13166d10b73f|audit|HIVE|sam|||authentication|uri|/gateway/default/hive|success|17/03/27 13:01:12 ||1ebe2bff-8ed2-4c68-84fa-13166d10b73f|audit|HIVE|sam|||authentication|uri|/gateway/default/hive|success|Groups: []17/03/27 13:01:12 ||1ebe2bff-8ed2-4c68-84fa-13166d10b73f|audit|HIVE|sam|||dispatch|uri|https://<hiveserver>:10001/cliservice?doAs=sam|unavailable|Request method: POST17/03/27 13:01:12 ||1ebe2bff-8ed2-4c68-84fa-13166d10b73f|audit|HIVE|sam|||dispatch|uri|https://<hiveserver>:10001/cliservice?doAs=sam|failure|3

gateway.log

Caused by: org.apache.shiro.subject.ExecutionException: java.security.PrivilegedActionException: java.io.IOException: Service connectivity error. at org.apache.shiro.subject.support.DelegatingSubject.execute(DelegatingSubject.java:385) at org.apache.hadoop.gateway.filter.ShiroSubjectIdentityAdapter.doFilter(ShiroSubjectIdentityAdapter.java:72) at org.apache.hadoop.gateway.GatewayFilter$Holder.doFilter(GatewayFilter.java:332) at org.apache.hadoop.gateway.GatewayFilter$Chain.doFilter(GatewayFilter.java:232) at org.apache.shiro.web.servlet.ProxiedFilterChain.doFilter(ProxiedFilterChain.java:61) at org.apache.shiro.web.servlet.AdviceFilter.executeChain(AdviceFilter.java:108) at org.apache.shiro.web.servlet.AdviceFilter.doFilterInternal(AdviceFilter.java:137) ... 48 moreCaused by: java.security.PrivilegedActionException: java.io.IOException: Service connectivity error. at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.gateway.filter.ShiroSubjectIdentityAdapter$CallableChain.call(ShiroSubjectIdentityAdapter.java:138) at org.apache.hadoop.gateway.filter.ShiroSubjectIdentityAdapter$CallableChain.call(ShiroSubjectIdentityAdapter.java:75) at org.apache.shiro.subject.support.SubjectCallable.doCall(SubjectCallable.java:90) at org.apache.shiro.subject.support.SubjectCallable.call(SubjectCallable.java:83) at org.apache.shiro.subject.support.DelegatingSubject.execute(DelegatingSubject.java:383) ... 54 moreCaused by: java.io.IOException: Service connectivity error. at org.apache.hadoop.gateway.dispatch.DefaultDispatch.executeOutboundRequest(DefaultDispatch.java:147) at org.apache.hadoop.gateway.dispatch.DefaultDispatch.executeRequest(DefaultDispatch.java:115) at org.apache.hadoop.gateway.dispatch.DefaultDispatch.doPost(DefaultDispatch.java:304) at org.apache.hadoop.gateway.dispatch.GatewayDispatchFilter$PostAdapter.doMethod(GatewayDispatchFilter.java:130) at org.apache.hadoop.gateway.dispatch.GatewayDispatchFilter.doFilter(GatewayDispatchFilter.j

tried configuring both of the following topology(http/https), same operation was working before enabling ssl:

<service>

<role>HIVE</role>

<url>https://<hive_host>:10001/cliservice</url>

</service>

<service>

<role>HIVE</role>

<url>http://<hive_host>:10001/cliservice</url>

</service>

Created 03-28-2017 06:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Deepak Sharma,

If you are using HDP version 2.5 there is a bug when using wire encryption with hive and trying to access with knox in a kerberized cluster. See https://issues.apache.org/jira/browse/KNOX-762 . You will see in the knox kerberos debug log that knox is trying to authenticate using spengo keytab with HTTPS instead of HTTP. To resolve this issue downgrade the httpclient jar to httpclient-4.5.1.jar .on knox.

Created 03-27-2017 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Deepak Sharma,

Looks like the connectivity between Knox server and HiveServer2 (HS2) is broken. So,

1. Have you checked that Beeline works fine without Knox & using HS2 (over SSL) directly?

2. Also after enabling SSL for Hive, you need to establish trust between Knox service and HS2 by importing their certificates into each other's truststore. Have you done this?

These two should definitely give you some breakthrough. Let us know !

Created 03-27-2017 08:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hey thanks @Vipin Rathor for reply

>> 1. Have you checked that Beeline works fine without Knox & using HS2 (over SSL) directly

yes beeline works using HS2 over ssl

2. Also after enabling SSL for Hive, you need to establish trust between Knox service and HS2 by importing their certificates into each other's truststore. Have you done this?

>> I sense here one way ssl should be enough, assuming for hbase and webhdfs one way ssl works, i have not imported knox crt into hive truststore, so i guess behaviour should be same here also

Created 03-27-2017 08:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, can you please try importing knox cert into hive truststore? That will be a logical thing to try if one way is not working.

Created 03-27-2017 09:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Vipin, tried configuring two way ssl also , it does not work, so strange part is :

1) the error i am getting does not seems relevant to wire encryption

2) but whenever i disable ssl for hive, knox to hive flow it start working

Created 03-27-2017 09:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

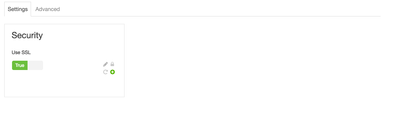

1- check if hive.server2.use.SSL property is set to true on Hive front , 2- make sure the HS2 host certificate(that exists in /etc/security/serverKeys) is copied to cacerts on knox host 3- make sure the knox topology is referring to https://<hiveserver2_host>:<port>;

Created on 03-27-2017 09:09 PM - edited 08-18-2019 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes surya it is set to true

Created 03-28-2017 06:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Deepak Sharma,

If you are using HDP version 2.5 there is a bug when using wire encryption with hive and trying to access with knox in a kerberized cluster. See https://issues.apache.org/jira/browse/KNOX-762 . You will see in the knox kerberos debug log that knox is trying to authenticate using spengo keytab with HTTPS instead of HTTP. To resolve this issue downgrade the httpclient jar to httpclient-4.5.1.jar .on knox.

Created 03-28-2017 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dvillarreal thanks for the reply, actually this issues fix is there in my cluster, i am using knox 0.12.0.

i have httpclient-4.5.1.jar in knox lib, and the issue you had mentioned has impacted WEBHDFS too, but for me WEBHDFS flow works , i am facing issue only with hive,

Created 03-30-2017 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

actually either there was something wrong in my clsuter or I had not restarted the knox after adding hive cert to the knox trust cacert. because after i lost the clsuter and created the new cluster and after doing necessary steps it worked, accepting your answer because the problem you shared was real issue that i had faced earlier with webhdfs , and was seeing the such issue.