Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: nifi UnpackContent source file missing

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

nifi UnpackContent source file missing

- Labels:

-

Apache NiFi

Created 03-31-2017 04:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to utilize the UnpackContent processor being fed from a "FetchHDFS", but I am having an issue where the source path of the zip file, prior to being unpacked, is being dropped after being unpacked. Is there a way to either retain the path through the processor or another method I can ensure that path gets added as an attribute to the unpacked flow file?

Created 04-06-2017 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for uploading the screenshots. I can see in the code that segment.original.filename is specifically removing the extension and this appears to have been like this since the initial code for NiFi was open-sourced, so I'm not sure if this is considered a bug or really a preference. The path attribute is being updated to reflect the path within the archive, although I believe there could be a bug here, but I believe it makes sense since the path of the children is not necessarily the path of the original flow file.

In the short-term, I think the easiest thing to do is stick an UpdateAttribute processor right before UnpackContent and add two properties that copy the filename and path to new attributes like this:

archive.filename = ${filename}

archive.path = ${path}

The flow files for the unpacked files should retain these attributes.

Created 04-04-2017 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you elaborate more on where you see the source path and where it is getting dropped?

Going into FetchHDFS there should be a flow file with the content being a path to fetch like /data/foo.zip, after FetchHDFS it wrote the content of foo.zip to the flow file content and the filename attribute of the flow file should be foo.zip, then it goes to UnpackContent which produced multiple child flow files that were unpacked and each one should have segment.original.filename with foo.zip.

Are you asking to retain the original HDFS path that went into FetchHDFS?

Created 04-04-2017 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the UnpackContent processor on, the original file and path seem to be lost.

I see the path in queue going into the UnpackContent Processor, but after, when the new flow files are generated by the unpackcontent processor, the source file is not in the attributes anymore.

Created 04-04-2017 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok what are the exact attribute names that you see in the queue going into UnpackContent that are being lost?

Created 04-04-2017 03:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

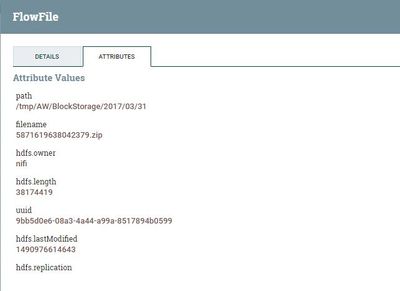

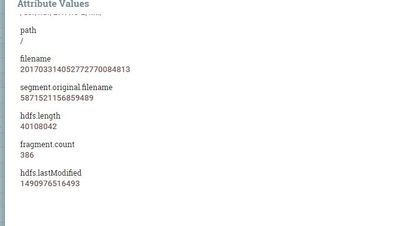

segment.original.filename is gone and filename and path are with the new values after extract. i am looking for the file name and path that came from the fetch

Created on 04-06-2017 02:41 PM - edited 08-18-2019 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Before unpack, i have the path of the file in HDFS, and the actual file name in HDFS

After unpack, the "segment.original.filename" contains the filename without extension, and no reference to the source path anymore.

My main issue is i need that path when feeding to spark to create the relationships.

Created 04-06-2017 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for uploading the screenshots. I can see in the code that segment.original.filename is specifically removing the extension and this appears to have been like this since the initial code for NiFi was open-sourced, so I'm not sure if this is considered a bug or really a preference. The path attribute is being updated to reflect the path within the archive, although I believe there could be a bug here, but I believe it makes sense since the path of the children is not necessarily the path of the original flow file.

In the short-term, I think the easiest thing to do is stick an UpdateAttribute processor right before UnpackContent and add two properties that copy the filename and path to new attributes like this:

archive.filename = ${filename}

archive.path = ${path}

The flow files for the unpacked files should retain these attributes.

Created 04-06-2017 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. This ended up being a good workaround to the attributes dropping, and gave me a couple ideas on how to extend the information i need.