Support Questions

- Cloudera Community

- Support

- Support Questions

- problem with yarn from ambari dashboard

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

problem with yarn from ambari dashboard

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

Apache YARN

Created on 10-25-2017 08:05 AM - edited 08-17-2019 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what could be the reason that yarn memory is very high?

any suggestion to verify this?

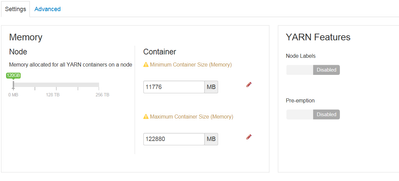

we have this value from ambari

yarn.scheduler.capacity.root.default.user-limit-factor=1

yarn.scheduler.minimum-allocation-mb=11776

yarn.scheduler.maximum-allocation-mb=122880

yarn.nodemanager.resource.memory-mb=120G

/usr/bin/yarn application -list -appStates RUNNING | grep RUNNING

Thrift JDBC/ODBC Server SPARK hive default RUNNING UNDEFINED 10%

Thrift JDBC/ODBC Server SPARK hive default RUNNING UNDEFINED 10%

mcMFM SPARK hdfs default RUNNING UNDEFINED 10%

mcMassRepo SPARK hdfs default RUNNING UNDEFINED 10%

mcMassProfiling SPARK hdfs default RUNNING UNDEFINED

free -g

total used free shared buff/cache available

Mem: 31 24 0 1 6 5

Swap: 7 0 7

Created 10-25-2017 08:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have not set it on your own then , I guess based on the Stack Advisor script as:

putYarnProperty('yarn.nodemanager.resource.memory-mb', int(round(min(clusterData['containers'] * clusterData['ramPerContainer'], nodemanagerMinRam))))

.

Created 10-25-2017 08:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have not set it on your own then , I guess based on the Stack Advisor script as:

putYarnProperty('yarn.nodemanager.resource.memory-mb', int(round(min(clusterData['containers'] * clusterData['ramPerContainer'], nodemanagerMinRam))))

.

Created 10-25-2017 08:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you mean that I need to change the yarn.nodemanager.resource.memory-m to other value? if yes how to calculate this value ? ( or maybe we need to increase this value for example to 200G )

Created 10-25-2017 09:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

another question once I change this value - yarn.nodemanager.resource.memory-mbthe , then yarn memory value is now 100% will immediately decrease ? or need to do some other action to refresh it ?

Created 10-25-2017 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will have to check with some Yarn experts. I will check and update.

Created 10-25-2017 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the response i got from @pjoseph

Example:

- The "yarn.nodemanager.resource.memory-mb" is NodeManager memory reserved for yarn tasks.

- So for example, the machine RAM is 300GB and you reserve 50GB for OS, Dameons and provide 250GB to yarn

- So i configure "yarn.nodemanager.resource.memory-mb" to 250GB

- Say i have 5 nodes, each node will register to RM once at startup

- So RM will have total memory 250 * 5 = 1250GB

- In case m increasing the yarn.nodemanager.resource.memory-mb value to 260GB and restarting NM now, 260 * 5 will be total

.

Created 10-25-2017 09:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can also have a look at the "The HDP utility script is the recommended method for calculating HDP memory configuration settings"

.

Created 10-25-2017 09:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok thank you waiting for your answer

Created 10-25-2017 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I update the question with more info ( like which application are runing under yarn and machine memory )

Created 10-25-2017 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so on each worker machine we have 32G ( lets say we allocate 7 to the OS ) so we have 25G , in my system we have 5 workers so this mean 25*5=125 , and this is actually what configured , am I corect ? ( we have in the cluster 3 master machines , 5 workers machines ) , in that case we need to increase each worker memory to at least 50G - am I correct ? and after that set the value of yarn.nodemanager.resource.memory-mb to 260G for example