Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: "Memory Limit Exceeded" error on Impala when i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"Memory Limit Exceeded" error on Impala when inserting into a partitioned table

Created on 12-02-2015 09:25 AM - edited 09-16-2022 02:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to run the following query on Impala and I'm getting a "Memory Limit Exceeded" error:

```

insert into flattened_impressions

partition(project)

select * from flattened_impressions limit 100000000;

```

I’ve tried prepending `SET MEM_LIMIT=16g` to no avail. The memory per box does not change, as shown below:

```

Query 6d4f783fcda5ee50:1ed49f2c6d07aea1: 5% Complete (57 out of 977)

Memory Limit Exceeded

Process: memory limit exceeded. Limit=1.00 GB Consumption=1.15 GB

RequestPool=root.imacalinao: Consumption=618.70 MB

Query(6d4f783fcda5ee50:1ed49f2c6d07aea1) Limit: Limit=16.00 GB Consumption=618.70 MB

Fragment 6d4f783fcda5ee50:1ed49f2c6d07aea2: Consumption=550.91 MB

EXCHANGE_NODE (id=1): Consumption=0

DataStreamRecvr: Consumption=38.53 MB

HdfsTableSink: Consumption=512.37 MB

Block Manager: Limit=819.20 MB Consumption=0

Fragment 6d4f783fcda5ee50:1ed49f2c6d07aea6: Consumption=67.79 MB

HDFS_SCAN_NODE (id=0): Consumption=67.21 MB

DataStreamSender: Consumption=4.00 KB

WARNING: The following tables are missing relevant table and/or column statistics.

ravenfish.flattened_impressions

Memory Limit Exceeded

Process: memory limit exceeded. Limit=1.00 GB Consumption=1.15 GB

RequestPool=root.imacalinao: Consumption=618.70 MB

Query(6d4f783fcda5ee50:1ed49f2c6d07aea1) Limit: Limit=16.00 GB Consumption=618.70 MB

Fragment 6d4f783fcda5ee50:1ed49f2c6d07aea2: Consumption=550.91 MB

EXCHANGE_NODE (id=1): Consumption=0

DataStreamRecvr: Consumption=38.53 MB

HdfsTableSink: Consumption=512.37 MB

Block Manager: Limit=819.20 MB Consumption=0

Fragment 6d4f783fcda5ee50:1ed49f2c6d07aea6: Consumption=67.79 MB

HDFS_SCAN_NODE (id=0): Consumption=67.21 MB

DataStreamSender: Consumption=4.00 KB

WARNING: The following tables are missing relevant table and/or column statistics.

ravenfish.flattened_impressions

```

Note that "Process: memory limit exceeded. Limit=1.00 GB Consumption=1.15 GB”, despite me asking for 16gb.

The EXPLAIN tells me I need much less:

```

Estimated Per-Host Requirements: Memory=576.00MB VCores=1

WARNING: The following tables are missing relevant table and/or column statistics.

ravenfish.flattened_impressions

WRITE TO HDFS [ravenfish.flattened_impressions, OVERWRITE=false, PARTITION-KEYS=(ravenfish.flattened_impressions.project)]

| partitions=173

|

01:EXCHANGE [UNPARTITIONED]

| limit: 100000000

|

00:SCAN HDFS [ravenfish.flattened_impressions]

partitions=173/173 files=977 size=2.62GB

limit: 100000000

```

I'm trying to duplicate the data that already exists in the tables. Is there a different way of doing this? I am on CDH 5.4.

Also, the parquet files generated by Impala are less than 1MB. Is there a way of making sure the parquet files are larger? This may be related to the issue.

Thanks!

Regards,

Ian

Created 12-02-2015 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

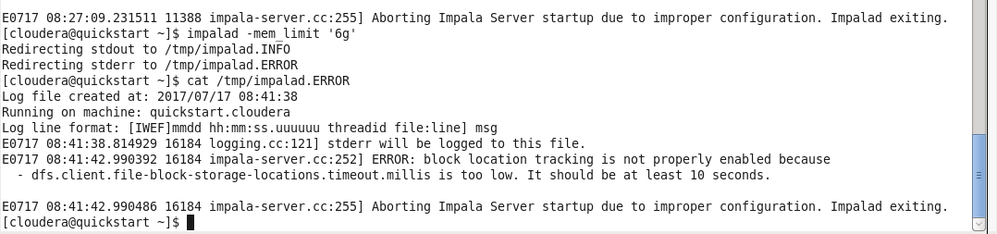

It looks like your Impala's process memory limit is set to 1GB. Queries can't use more than the process memory limit, even if the query memory limit is set higher. To work around this you or your administrator need to restart impala with a higher process memory limit. 1GB is very low and you will run out of memory on many queries.

The process memory limit is set when impala is started with the -mem_limit option to impalad. The default is 80% of the machine's physical memory. The valid options are described in impalad --help

-mem_limit (Process memory limit specified as number of bytes

('<int>[bB]?'), megabytes ('<float>[mM]'), gigabytes ('<float>[gG]'), or

percentage of the physical memory ('<int>%'). Defaults to bytes if no

unit is given) type: string default: "80%"

Created 01-24-2017 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

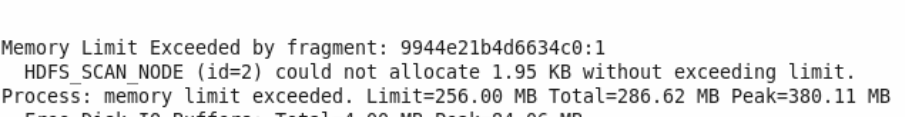

Users are getting *non-deterministic* "Memory limit exceeded" for impala queries. Impala Daemon Memory Limit: 100 GB. spill to disk is enabled

However, a query failed with the above memory with Aggregate Peak Memory Usage: 125 MiB

Explored query profile by CM -> Impala -> Queries -> {failed query "oom=true AND stats_missing=false"}

Want help in narrowing down the cause of the failure: inaccurate stats, congestion, hdfs disk rebalancing, or something else?

Where can I find the detailed of the failure? /var/log/impalad and catalogd state the "Query ID" but not the failure details. For example, impala logs stated the Query ID only: a24fb2eae077b513:45c8d35936a35e9e

impalad.w0575oslphcda11.bell.corp.bce.ca.impala.log.INFO.20170124-031735.13521:I0124 03:57:06.889070 44232 plan-fragment-executor.cc:92] Prepare(): query_id=a24fb2eae077b513:45c8d35936a35e93 instance_id=a24fb2eae077b513:45c8d35936a35e9e

Created 02-08-2017 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the key question is why the effective process memory limit is 1GB. In the error you pasted above it says the process limit is 1.00GB: "Process: memory limit exceeded. Limit=1.00 GB Consumption=1.15 GB"

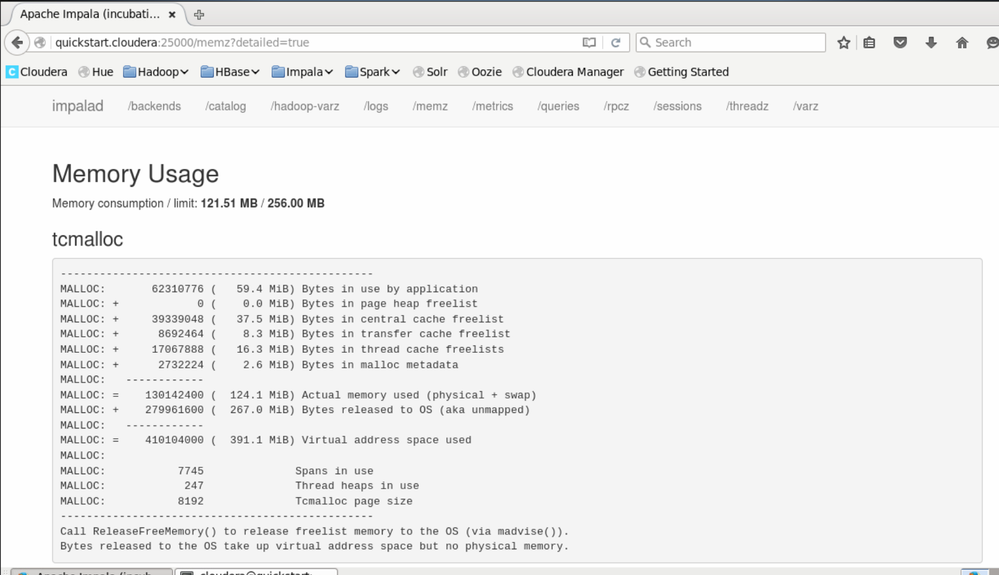

You can look at the memory limits and consumption on an Impala daemon's debug page at http://hostname:25000/memz?detailed=true - that may help identify any problematic Impala daemons.

Even if you're setting the memory limit to 100GB, then on startup the Impala daemon does some additional checks for the amount of memory available. You can look for a couple of things in the logs:

1. If vm overcommit and swapping are both disabled you may see a message in the WARNING and INFO logs along the lines of "This system shows a discrepancy between the available memory and the memory commit limit ..." that will explain what the effective memory limit is

2. Impala's view of the amount of physical memory available, e.g. "I0208 14:54:02.425094 4482 init.cc:220] Physical Memory: 31.33 GB"

Created 02-08-2017 03:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There may also be more details about the "memory limit exceeded" error in the log on a different Impala daemon where it did run out of memory.

I should also mention that we've been making a big push to improve the memory consumption and general performance of partitioned inserts - currently with dynamic partitioning memory consumption is very high with a large number of partitions. See https://issues.cloudera.org/browse/IMPALA-2522

Created 07-12-2017 03:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am unable to implement your suggestions. Pl can you elaborate. How do i increase memory of my impala daemon from 256 MB. mem_limit at startup did not work. My queries need more memory. My computer has 10GB of Ram overall.

Created 07-12-2017 03:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@adi91 - How did you set --mem_limit? What value did you pass to it? What did http://hostname:25000/memz?detailed=true say after applying --mem_limit to the command line options? Did your value show up there?

Created 07-12-2017 04:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I gave impalad --mem_limit '1 gG' as my command

Created 07-17-2017 11:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Lars Volker @Tim Armstrong

when I run the impalad- mem_limt to 1gb(6gb etc), I get the error as shown in the image.

Created 07-17-2017 10:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

go inside the IMPALA -SHELL

excute impala-shell in the terminal

and then once you hit the terminal .

fire the command inside the shell

[localhost:21000] > set mem_limit=6g;

let me know if that works for you