Support Questions

- Cloudera Community

- Support

- Support Questions

- sqoop error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

sqoop error

- Labels:

-

Apache Sqoop

Created 07-06-2016 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I copied the downloaded and installed JDBC drivers for sqoop in /usr/hdp/current/sqoop-client/lib configured a sqoop import to our network Oracle Ebusiness suite environment.

But when I run the import I get an error I am blowing my head trying to resolve this easy problem . Any quick solution?

Permission denied: user=sqoop, access=WRITE, inode="/user/sqoop/.staging":hdfs:hdfs:drwxr-xr-x

[sqoop@sandbox lib]$ sqoop import --connect jdbc:oracle:thin:@192.168.0.15:1521/PROD --username sqoop -P --table DEPT_INFO Warning: /usr/hdp/2.3.2.0-2950/accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 16/07/06 09:39:44 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6.2.3.2.0-2950 Enter password: 16/07/06 09:39:49 INFO oracle.OraOopManagerFactory: Data Connector for Oracle and Hadoop is disabled. 16/07/06 09:39:49 INFO manager.SqlManager: Using default fetchSize of 1000 16/07/06 09:39:49 INFO tool.CodeGenTool: Beginning code generation SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/07/06 09:39:51 INFO manager.OracleManager: Time zone has been set to GMT 16/07/06 09:39:51 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM DEPT_INFO t WHERE 1=0 16/07/06 09:39:51 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.3.2.0-2950/hadoop-mapreduce Note: /tmp/sqoop-sqoop/compile/c34f78f377ce385f2582badaf9bd81a8/DEPT_INFO.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 16/07/06 09:39:54 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-sqoop/compile/c34f78f377ce385f2582badaf9bd81a8/DEPT_INFO.jar 16/07/06 09:39:54 INFO manager.OracleManager: Time zone has been set to GMT 16/07/06 09:39:54 INFO manager.OracleManager: Time zone has been set to GMT 16/07/06 09:39:54 INFO mapreduce.ImportJobBase: Beginning import of DEPT_INFO 16/07/06 09:39:55 INFO manager.OracleManager: Time zone has been set to GMT 16/07/06 09:39:58 INFO impl.TimelineClientImpl: Timeline service address: http://sandbox.hortonworks.com:8188/ws/v1/timeline/ 16/07/06 09:39:58 INFO client.RMProxy: Connecting to ResourceManager at sandbox.hortonworks.com/192.168.0.104:8050 16/07/06 09:39:59 ERROR tool.ImportTool: Encountered IOException running import job: org.apache.hadoop.security.AccessControlException: Permission denied: user=sqoop, access=WRITE, inode="/user/sqoop/.staging":hdfs:hdfs:drwxr-xr-x

Created 07-07-2016 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The code snippet didn't work see

[root@sandbox ~]# su - hdfs [hdfs@sandbox ~]$ hdfs dfs -chown -R sqoop:hdfs /user/root chown: `/user/root': No such file or directory

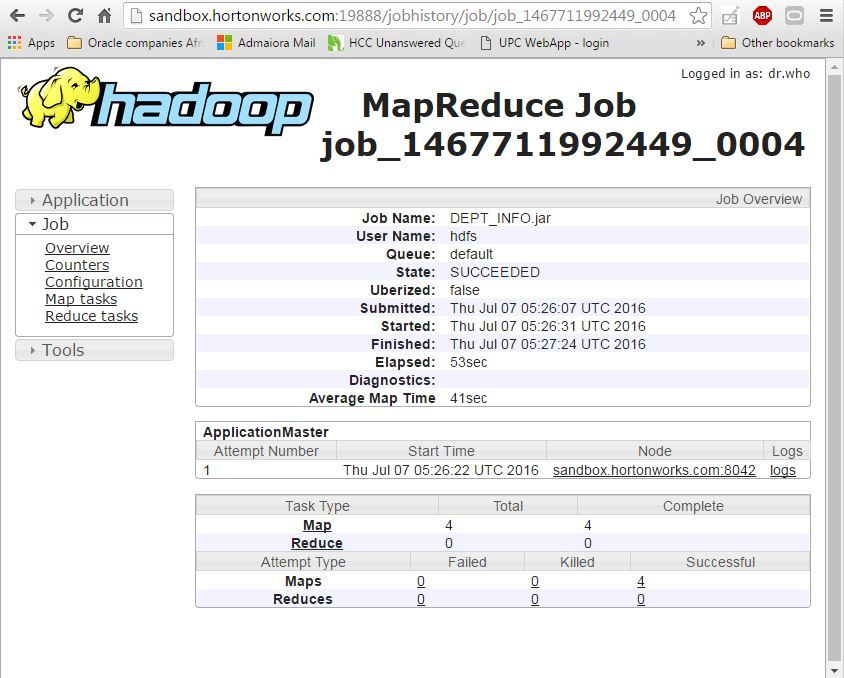

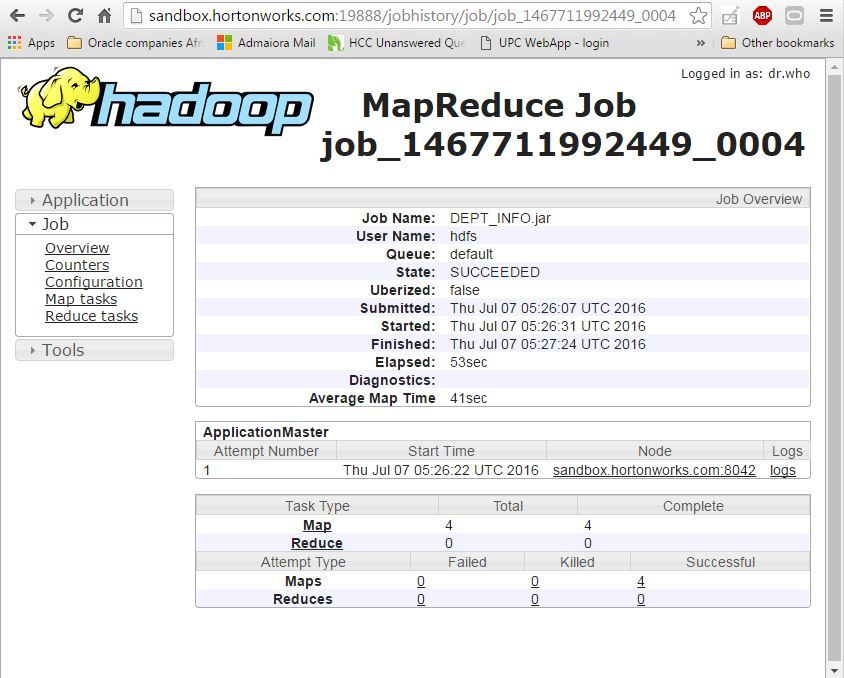

So what I did I just run the sqoop command as hdfs and it run successfully

[hdfs@sandbox ~]$ sudo sqoop import --connect jdbc:oracle:thin:@192.168.0.15:1521/PROD --username sqoop -P --table DEPT_INFO

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for hdfs:

[hdfs@sandbox ~]$ sqoop import --connect jdbc:oracle:thin:@192.168.0.15:1521/PROD --username sqoop -P --table DEPT_INFO

Warning: /usr/hdp/2.3.2.0-2950/accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

16/07/07 05:25:32 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6.2.3.2.0-2950

Enter password:

16/07/07 05:25:39 INFO oracle.OraOopManagerFactory: Data Connector for Oracle and Hadoop is disabled.

16/07/07 05:25:39 INFO manager.SqlManager: Using default fetchSize of 1000

16/07/07 05:25:39 INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/07/07 05:25:52 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:53 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM DEPT_INFO t WHERE 1=0

16/07/07 05:25:53 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.3.2.0-2950/hadoop-mapreduce

Note: /tmp/sqoop-hdfs/compile/075dc9427b098234773ffaadf17b1b5f/DEPT_INFO.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

16/07/07 05:25:57 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/075dc9427b098234773ffaadf17b1b5f/DEPT_INFO.jar

16/07/07 05:25:57 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:57 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:57 INFO mapreduce.ImportJobBase: Beginning import of DEPT_INFO

16/07/07 05:25:58 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:26:00 INFO impl.TimelineClientImpl: Timeline service address: http://sandbox.hortonworks.com:8188/ws/v1/timeline/

16/07/07 05:26:01 INFO client.RMProxy: Connecting to ResourceManager at sandbox.hortonworks.com/192.168.0.104:8050

16/07/07 05:26:06 INFO db.DBInputFormat: Using read commited transaction isolation

16/07/07 05:26:06 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(DEPT_ID), MAX(DEPT_ID) FROM DEPT_INFO

16/07/07 05:26:06 INFO mapreduce.JobSubmitter: number of splits:4

16/07/07 05:26:06 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1467711992449_0004

16/07/07 05:26:07 INFO impl.YarnClientImpl: Submitted application application_1467711992449_0004

16/07/07 05:26:07 INFO mapreduce.Job: The url to track the job: http://sandbox.hortonworks.com:8088/proxy/application_1467711992449_0004/

16/07/07 05:26:07 INFO mapreduce.Job: Running job: job_1467711992449_0004

16/07/07 05:26:32 INFO mapreduce.Job: Job job_1467711992449_0004 running in uber mode : false

16/07/07 05:26:32 INFO mapreduce.Job: map 0% reduce 0%

16/07/07 05:26:55 INFO mapreduce.Job: map 25% reduce 0%

16/07/07 05:27:22 INFO mapreduce.Job: map 50% reduce 0%

16/07/07 05:27:25 INFO mapreduce.Job: map 100% reduce 0%

16/07/07 05:27:26 INFO mapreduce.Job: Job job_1467711992449_0004 completed successfully

16/07/07 05:27:27 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=584380

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=417

HDFS: Number of bytes written=95

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=164533

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=164533

Total vcore-seconds taken by all map tasks=164533

Total megabyte-seconds taken by all map tasks=41133250

Map-Reduce Framework

Map input records=9

Map output records=9

Input split bytes=417

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=871

CPU time spent (ms)=10660

Physical memory (bytes) snapshot=659173376

Virtual memory (bytes) snapshot=3322916864

Total committed heap usage (bytes)=533200896

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=95

16/07/07 05:27:27 INFO mapreduce.ImportJobBase: Transferred 95 bytes in 87.9458 seconds (1.0802 bytes/sec)

16/07/07 05:27:27 INFO mapreduce.ImportJobBase: Retrieved 9 records.

Created 07-06-2016 10:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is due to permission issue, please do the following:

su - hdfs

hdfs dfs -chown -R sqoop:hdfs /user/root

Then run sqoop command.

Thanks and Regards,

Sindhu

Created 07-07-2016 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The code snippet didn't work see

[root@sandbox ~]# su - hdfs [hdfs@sandbox ~]$ hdfs dfs -chown -R sqoop:hdfs /user/root chown: `/user/root': No such file or directory

So what I did I just run the sqoop command as hdfs and it run successfully

[hdfs@sandbox ~]$ sudo sqoop import --connect jdbc:oracle:thin:@192.168.0.15:1521/PROD --username sqoop -P --table DEPT_INFO

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for hdfs:

[hdfs@sandbox ~]$ sqoop import --connect jdbc:oracle:thin:@192.168.0.15:1521/PROD --username sqoop -P --table DEPT_INFO

Warning: /usr/hdp/2.3.2.0-2950/accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

16/07/07 05:25:32 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6.2.3.2.0-2950

Enter password:

16/07/07 05:25:39 INFO oracle.OraOopManagerFactory: Data Connector for Oracle and Hadoop is disabled.

16/07/07 05:25:39 INFO manager.SqlManager: Using default fetchSize of 1000

16/07/07 05:25:39 INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/2.3.2.0-2950/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/07/07 05:25:52 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:53 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM DEPT_INFO t WHERE 1=0

16/07/07 05:25:53 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.3.2.0-2950/hadoop-mapreduce

Note: /tmp/sqoop-hdfs/compile/075dc9427b098234773ffaadf17b1b5f/DEPT_INFO.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

16/07/07 05:25:57 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/075dc9427b098234773ffaadf17b1b5f/DEPT_INFO.jar

16/07/07 05:25:57 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:57 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:25:57 INFO mapreduce.ImportJobBase: Beginning import of DEPT_INFO

16/07/07 05:25:58 INFO manager.OracleManager: Time zone has been set to GMT

16/07/07 05:26:00 INFO impl.TimelineClientImpl: Timeline service address: http://sandbox.hortonworks.com:8188/ws/v1/timeline/

16/07/07 05:26:01 INFO client.RMProxy: Connecting to ResourceManager at sandbox.hortonworks.com/192.168.0.104:8050

16/07/07 05:26:06 INFO db.DBInputFormat: Using read commited transaction isolation

16/07/07 05:26:06 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(DEPT_ID), MAX(DEPT_ID) FROM DEPT_INFO

16/07/07 05:26:06 INFO mapreduce.JobSubmitter: number of splits:4

16/07/07 05:26:06 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1467711992449_0004

16/07/07 05:26:07 INFO impl.YarnClientImpl: Submitted application application_1467711992449_0004

16/07/07 05:26:07 INFO mapreduce.Job: The url to track the job: http://sandbox.hortonworks.com:8088/proxy/application_1467711992449_0004/

16/07/07 05:26:07 INFO mapreduce.Job: Running job: job_1467711992449_0004

16/07/07 05:26:32 INFO mapreduce.Job: Job job_1467711992449_0004 running in uber mode : false

16/07/07 05:26:32 INFO mapreduce.Job: map 0% reduce 0%

16/07/07 05:26:55 INFO mapreduce.Job: map 25% reduce 0%

16/07/07 05:27:22 INFO mapreduce.Job: map 50% reduce 0%

16/07/07 05:27:25 INFO mapreduce.Job: map 100% reduce 0%

16/07/07 05:27:26 INFO mapreduce.Job: Job job_1467711992449_0004 completed successfully

16/07/07 05:27:27 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=584380

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=417

HDFS: Number of bytes written=95

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=164533

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=164533

Total vcore-seconds taken by all map tasks=164533

Total megabyte-seconds taken by all map tasks=41133250

Map-Reduce Framework

Map input records=9

Map output records=9

Input split bytes=417

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=871

CPU time spent (ms)=10660

Physical memory (bytes) snapshot=659173376

Virtual memory (bytes) snapshot=3322916864

Total committed heap usage (bytes)=533200896

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=95

16/07/07 05:27:27 INFO mapreduce.ImportJobBase: Transferred 95 bytes in 87.9458 seconds (1.0802 bytes/sec)

16/07/07 05:27:27 INFO mapreduce.ImportJobBase: Retrieved 9 records.