Support Questions

- Cloudera Community

- Support

- Support Questions

- sqoop import from oracle to Hadoop not getting co...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

sqoop import from oracle to Hadoop not getting completed

- Labels:

-

Apache Sqoop

Created on 06-06-2018 02:07 PM - edited 09-16-2022 06:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am new to BigData, I am trying to Load data from Oracle to Hadoop. This is first time I am trying to load data from Oracle to Hadoop.

It is taking time or not getting completed.

Hadoop Version

[oracle@ebsoim 11.1.0]$ hdfs version

Hadoop 2.6.0-cdh5.14.2

Subversion http://github.com/cloudera/hadoop -r 5724a4ad7a27f7af31aa725694d3df09a68bb213

Compiled by jenkins on 2018-03-27T20:40Z

Compiled with protoc 2.5.0

From source with checksum 302899e86485742c090f626a828b28

This command was run using /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/hadoop-common-2.6.0-cdh5.14.2.jar

[oracle@ebsoim 11.1.0]$

It is running since last 3 hrs, Select query contains only one row.

Below is the command to select the data from oracle to Hadoop

[hdfs@ebsoim ~]$ sqoop import --connect jdbc:oracle:thin:@192.168.56.101:1526:PROD --query "select person_id from HR.PER_ALL_PEOPLE_F where \$CONDITIONS" --username apps -P --target-dir '/tmp/oracle' -m 1

Warning: /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/bin/../lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/06/05 22:50:39 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2

Enter password:

18/06/05 22:50:41 INFO oracle.OraOopManagerFactory: Data Connector for Oracle and Hadoop is disabled.

18/06/05 22:50:41 INFO manager.SqlManager: Using default fetchSize of 1000

18/06/05 22:50:41 INFO tool.CodeGenTool: Beginning code generation

18/06/05 22:50:41 INFO manager.OracleManager: Time zone has been set to GMT

18/06/05 22:50:41 INFO manager.SqlManager: Executing SQL statement: select person_id from HR.PER_ALL_PEOPLE_F where (1 = 0)

18/06/05 22:50:41 INFO manager.SqlManager: Executing SQL statement: select person_id from HR.PER_ALL_PEOPLE_F where (1 = 0)

18/06/05 22:50:41 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce

Note: /tmp/sqoop-hdfs/compile/43977b74d0f6d3f2adbad6c90968547f/QueryResult.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/06/05 22:50:43 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/43977b74d0f6d3f2adbad6c90968547f/QueryResult.jar

18/06/05 22:50:43 INFO mapreduce.ImportJobBase: Beginning query import.

18/06/05 22:50:43 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/06/05 22:50:43 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/06/05 22:50:43 INFO client.RMProxy: Connecting to ResourceManager at ebsoim.hdfc.com/192.168.56.101:8032

18/06/05 22:50:47 INFO db.DBInputFormat: Using read commited transaction isolation

18/06/05 22:50:47 INFO mapreduce.JobSubmitter: number of splits:1

18/06/05 22:50:48 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528221835245_0002

18/06/05 22:50:49 INFO impl.YarnClientImpl: Submitted application application_1528221835245_0002

18/06/05 22:50:49 INFO mapreduce.Job: The url to track the job: http://ebsoim.hdfc.com:8088/proxy/application_1528221835245_0002/

18/06/05 22:50:49 INFO mapreduce.Job: Running job: job_1528221835245_0002

everytime the job is getting stuck at this point.

Please help me on this since i am trying since last 6-7 Days. But no luck.

Thanks and Regards,

Created 07-06-2018 04:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

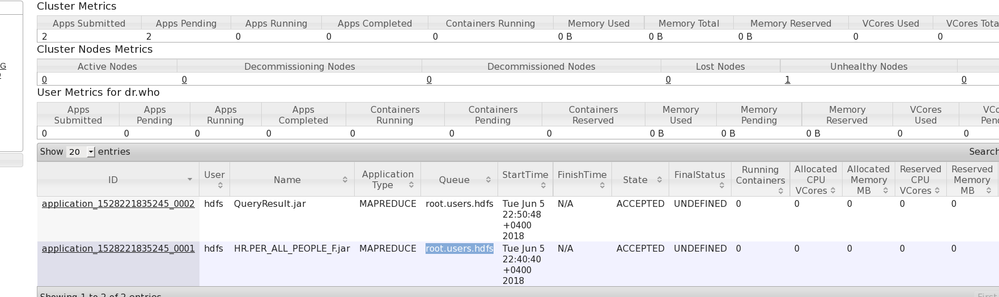

The jobs was hung because it was waiting for resources to be available due to above.

You need to fix your unhealthy node and make sure it is active before running your job again.

Created 07-06-2018 04:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The jobs was hung because it was waiting for resources to be available due to above.

You need to fix your unhealthy node and make sure it is active before running your job again.