Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: sqoop import is not identifying mysql tables s...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

sqoop import is not identifying mysql tables showing ClassNotFountException Error.

- Labels:

-

Apache Hadoop

-

Apache Sqoop

Created 10-31-2017 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a mysql table "customers" and tried importing data from mysql to hdfs location using sqoop import. Below are the versions I installed on my machine:

sqoop version: 1.4.6

hive version: 2.3.0

Hadoop version: 2.8.1

Sqoop import Command:

sqoop import --connect jdbc:mysql://localhost/localdb --username root --password mnbv@1234 --table customers -m 1 --target-dir /user/hduser/sqoop_import/customers1/

and showing below ClassNotFountException:

Tue Oct 31 09:57:21 IST 2017 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.17/10/31 09:57:21 INFO db.DBInputFormat: Using read commited transaction isolation 17/10/31 09:57:21 INFO mapred.MapTask: Processing split: 1=1 AND 1=1

17/10/31 09:57:21 INFO mapred.LocalJobRunner: map task executor complete.

17/10/31 09:57:22 WARN mapred.LocalJobRunner: job_local1437452057_0001java.lang.Exception: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class customers not found

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:489

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:549)

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class customers not found at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2216)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getInputClass(DBConfiguration.java:403) at org.apache.sqoop.mapreduce.db.DataDrivenDBInputFormat.createDBRecordReader(DataDrivenDBInputFormat.java:237)

at org.apache.sqoop.mapreduce.db.DBInputFormat.createRecordReader(DBInputFormat.java:263) at org.apache.hadoop.mapred.MapTask$NewTrackingRecordReader.<init>(MapTask.java:515)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:758) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:270) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: Class customers not found at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2122)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2214)

... 12 more

17/10/31 09:57:22 INFO mapreduce.Job: Job job_local1437452057_0001 running in uber mode : false 17/10/31 09:57:22 INFO mapreduce.Job: map 0% reduce 0%

17/10/31 09:57:22 INFO mapreduce.Job: Job job_local1437452057_0001 failed with state FAILED due to: NA 17/10/31 09:57:22 INFO mapreduce.Job: Counters: 0

17/10/31 09:57:22 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead 17/10/31 09:57:22 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 4.105 seconds (0 bytes/sec)

17/10/31 09:57:22 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead 17/10/31 09:57:22 INFO mapreduce.ImportJobBase: Retrieved 0 records.

17/10/31 09:57:22 ERROR tool.ImportTool: Error during import: Import job failed!But when I tried listing tables using sqoop command it is working fine and showing customers table.

Sqoop command:

sqoop list-tables --connect jdbc:mysql://localhost/localdb --username root --password mnbv@1234;

Output is displayed properly as shown:

17/10/31 10:07:09 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. Tue Oct 31 10:07:09 IST 2017 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. customers

what might be the issue, why sqoop import from table is not recognizing the table from mysql. Kindly help me on the same.

Thanks in Advance.

Created 10-31-2017 06:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try adding the option --bindir to your sqoop command.

--bindir <path to sqoop home>/lib e.g. /usr/lib/sqoop/sqoop-1.4.6/lib/

Created 10-31-2017 08:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP 2.6.x

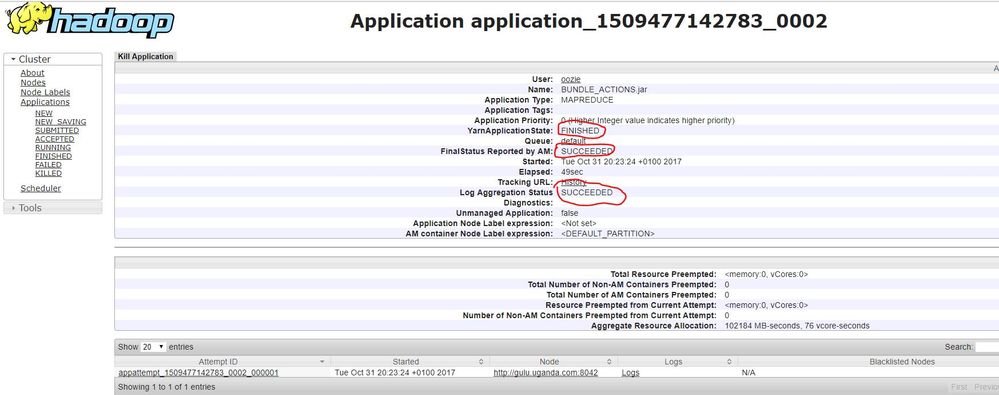

I just tried out the same command on my kerberized cluster it was successful see attached sqoop.jpg.

$ sqoop import --connect jdbc:mysql://localhost/oozie --username oozie --password oozie --table BUNDLE_ACTIONS -m 1 --target-dir /user/oozie/sqoop_import/BUNDLE_ACTIONS/

Has the user root got a home directory in hdfs ? check using the below

$ hdfs dfs -ls /user

Otherwise, create one dummy directory or change the permsiions on the existing

$ hdfs dfs -chmod 777 /user/hduser

Then run your sqoop I added --class-name [force creating the customer_part] and customers3 to create a new file as you'd already created cusomer2

sqoop import--connect jdbc:mysql://localhost/localdb --username root --password mnbv@1234 --table customers -m 1 --class-name customers_part --target-dir /user/hduser/sqoop_import/customers3/

Please let me know

Created 11-01-2017 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sqoop import --bindir $SQOOP_HOME/lib --connect jdbc:mysql://localhost/localdb --username root --password mnbv@1234 --table customers -m 1 --class-name customers_part --target-dir /user/hduser/sqoop_import/customers/

and this time facing NullPointerException as shown below:

17/11/01 09:46:44 INFO mapred.LocalJobRunner: map task executor complete.

17/11/01 09:46:44 WARN mapred.LocalJobRunner: job_local270107642_0001

java.lang.Exception: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/hduser/sqoop_import/customers/_temporary/0/_temporary/attempt_local270107642_0001_m_000000_0/part-m-00000 could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1733)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:265)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2496)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:828)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:447)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:845)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:788)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2455)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1481)

at org.apache.hadoop.ipc.Client.call(Client.java:1427)

at org.apache.hadoop.ipc.Client.call(Client.java:1337)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:227)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy11.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:440)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:398)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:163)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:155)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:335)

at com.sun.proxy.$Proxy12.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DataStreamer.locateFollowingBlock(DataStreamer.java:1733)

at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1536)

at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:658)

17/11/01 09:46:45 INFO mapreduce.Job: Job job_local270107642_0001 running in uber mode : false

17/11/01 09:46:45 INFO mapreduce.Job: map 0% reduce 0%

17/11/01 09:46:45 INFO mapreduce.Job: Job job_local270107642_0001 failed with state FAILED due to: NA

17/11/01 09:46:45 INFO mapreduce.Job: Counters: 8

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=164

17/11/01 09:46:45 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead

17/11/01 09:46:45 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 3.946 seconds (0 bytes/sec)

17/11/01 09:46:45 INFO mapreduce.ImportJobBase: Retrieved 2 records.

17/11/01 09:46:45 ERROR tool.ImportTool: Error during import: Import job failed!

Kindly help me on the above issue.

Created 11-01-2017 04:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please run HDFS Service check from Ambari Server UI to see if all the DataNodes are healthy and running?

java.lang.Exception: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/hduser/sqoop_import/customers/_temporary/0/_temporary/attempt_local270107642_0001_m_000000_0/part-m-00000 could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

Above error indicates that No DataNodes are running or DataNodes are not healthy. So please check if your Sqoop is using the correct hdfs-site.xml / core-site.xml in it's classpath with Valid Running DataNodes.

.

You can also try running your Sqoop command using "--verbose" option to see the "Classpath" setting to know if it is including the correct "hadoop/conf" directory something like: "/usr/hdp/2.6.0.3-8/hadoop/conf"

.

Please check the DataNode process is running and try to put sode file to HDFS to see if your HDFS store operations are running fine?

# ps -ef | grep DataNode # su - hdfs # hdfs dfs -put /var/log/messages /tmp

.