Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: standby namenode & ZKFailoverController down ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

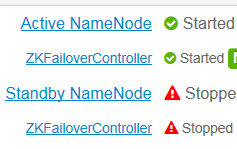

standby namenode & ZKFailoverController down failed to start

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 01-22-2018 08:22 PM - edited 08-17-2019 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

from some unclear reason we saw the following services are down without success to start them

standby namenode & ZKFailoverController

name node log:

ERROR namenode.NameNode (NameNode.java:main(1774)) - Failed to start namenode. java.lang.IllegalStateException: Could not determine own NN ID in namespace 'hdfsha'. Please ensure that this node is one of the machines listed as an NN RPC address, or configure dfs.ha.namenode.id at com.google.common.base.Preconditions.checkState(Preconditions.java:172) 017-12-20 18:57:24,771 INFO zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(1019)) - Opening socket connection to server master02.sys56.com/100.4.22.18:2181. Will not attempt to authenticate using SASL (unknown error) 2017-12-21 02:48:29,403 INFO zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(1019)) - Opening socket connection to server master03.sys56.com/100.4.22.18:2181. Will not attempt to authenticate using SASL (unknown error) CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=10468982784 -XX:MaxHeapSize=10468982784 -XX:MaxNewSize=1308622848 -XX:MaxTenuringThreshold=6 -XX:NewSize=1308622848 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError

from ambari-server log

<strong>ERROR [ambari-heartbeat-processor-0] HeartbeatProcessor:554 - Operation failed - may be retried. Service component host: ZKFC, host: master03.sys57.com Action id 475-0 and taskId 1659</strong>

ZKFailoverController log

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/zkfc_slave.py", line 230, in <module>

ZkfcSlave().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 314, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/zkfc_slave.py", line 70, in start

ZkfcSlaveDefault.start_static(env, upgrade_type)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/zkfc_slave.py", line 92, in start_static

raise Fail("Could not initialize HA state in zookeeper")

resource_management.core.exceptions.Fail: Could not initialize HA state in zookeeper

2018-01-22 19:48:41,824 - HA state initialization in ZooKeeper failed with 1 error code. Will retry

Command failed after 1 tries

please advice how to resolve both service to became up

Created 01-22-2018 10:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you recently configured NameNode HA to this cluster ?

It looks like your NmaeNode HA is not configured properly. Or there might be a slight difference on the "core-site.xml" & "hdfs-site.xml" files of the Active & Standby namenodes.

Please check:

# grep -A 1 'dfs.namenode.http-address' /etc/hadoop/conf/hdfs-site.xml

.

try copying the core-site.xml and hdfs-site.xml from working Active NameNode to the Standby name node machine. Or try Disabling NameNode HA and then enable it back.

Created 01-22-2018 10:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

grep -A 1 'dfs.namenode.http-address' /etc/hadoop/conf/hdfs-site.xml

<name>dfs.namenode.http-address.hdfsha.nn1</name>

<value>master01.sys57.com:50070</value>

--

<name>dfs.namenode.http-address.hdfsha.nn2</name>

<value>master03.sys57.com:50070</value>

Created 01-22-2018 10:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

dear jay - can you exxplain how to - try Disabling NameNode HA and then enable it back.

Created 01-22-2018 11:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay do you have some concultions from the xml?

Created 01-22-2018 11:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi Jay , we check both xml's files on master01 and master03 and both xml's are the same

Created 01-22-2018 11:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay what you recomended based on the output from grep ?