Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: what is the best backup and recovery solution ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

what is the best backup and recovery solution for a full hortonworks production hadoop deployment. ( not using third party backup software)

Created on 10-19-2017 03:25 PM - edited 09-16-2022 05:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP-2.5.0.0

Hadoop deployment has the following servicesHDFS .................name/datanodes sit on an isilon. ( nas storage)

YARN

MapReduce2

Tez

Hive

HAWQ

PXF

Pig

Sqoop

Oozie

ZooKeeper

Ambari Metrics

Spark

Created 10-19-2017 05:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might to have a look at the following HCC series of articles

Created 09-28-2018 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The article is very general and doe not provide concrete and specific backup & recovery tools.

Created 10-20-2017 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did see this article.. But there is a disclaimer for production use

Disclaimer: 1. This article is solely my personal take on disaster recovery in a Hadoop cluster

2. Disaster Recovery is specialized subject in itself. Do not Implement something based on this article in production until you have a good understanding on what you are implementing

Has Hortonworks a formal document on backup and recovery of their Hadoop environments??

If so where can I find it.

Many thanks

Created on 10-20-2017 02:45 PM - edited 08-17-2019 06:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

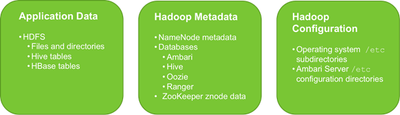

This info might be more helpful to guide you down the road of DR. With HDP in production, you must combine different technologies being offered and tailor these together as your own solution. I've read through many solutions, and the info below is the most critical in my opinion. Remember, preventing data loss is better than recovering from it!

Read these slides first:

https://www.slideshare.net/cloudera/hadoop-backup-and-disaster-recovery

https://www.slideshare.net/hortonworks/ops-workshop-asrunon20150112/72

1. VM Snapshots

- If your not using VM's, then switch over

- Ambari nightly VM snapshots

- Namenode VM snapshots

2. Lockdown critical directories:

fs.protected.directories - Under HDFS config in ambari

Protect critical directories from deletion. There could be accidental deletes of the critical data-sets. These catastrophic errors should be avoided by adding appropriate protections. For example the /user directory is the parent of all user-specific sub-directories. Attempting to delete the entire /user directory is very likely to be unintentional. To protect against accidental data loss, mark the /user directory as protected. This prevents attempts to delete it unless the directory is already empty

3. Backups

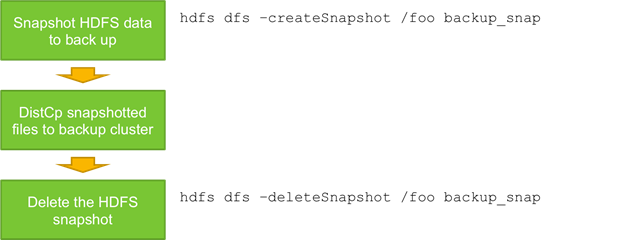

Backups can be automated using tools like Apache Falcon (being deprecated in HDP 3.0, switch to workflow editor + DistCp) and Apache Oozie

Using Snapshots

HDFS snapshots can be combined with DistCp to create the basis for an online backup solution. Because a snapshot is a read-only, point-in-time copy of the data, it can be used to back up files while HDFS is still actively serving application clients. Backups can even be automated using tools like Apache Falcon and Apache Oozie.

Example:

“Accidentally” remove the important file

sudo -u hdfs hdfs dfs -rm -r -skipTrash /tmp/important-dir/important-file.txt

Recover the file from the snapshot:

hdfs dfs -cp /tmp/important-dir/.snapshot/first-snapshot/important-file.txt /tmp/important-dir

hdfs dfs -cat /tmp/important-dir/important-file.txt

HDFS Snapshots Overview

A snapshot is a point-in-time, read-only image of the entire file system or a sub tree of the file system.

HDFS snapshots are useful for:

|

DistCp Overview

Hadoop DistCp (distributed copy) can be used to copy data between Hadoop clusters or within a Hadoop cluster. DistCp can copy just files from a directory or it can copy an entire directory hierarchy. It can also copy multiple source directories to a single target directory.

DistCp:

|

Created 09-28-2018 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting info. However, the second slide your pointed out, Hortonworks Operational Best Practice, is not directly related to the topic.