Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: what setting controls the max # of rows can be...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

what setting controls the max # of rows can be exported from Zeppelin as CSV

- Labels:

-

Apache Zeppelin

Created 09-29-2017 06:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 10-02-2017 03:20 PM - edited 08-17-2019 10:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Raju

The thing to know when handling dataframes in Zeppelin is that a resultset is imported into the notebook when queried. That table is also read into memory when a particular notebook is accessed.

To prevent a query for bringing back too much data and crashing the server (this can happen very quickly), each interpreter set a limit to the number of rows that are brought back to zeppelin ( default for most interpreters is ~1000 ).

=> This does not mean that queries of more than a thousand rows will not be executed, just that only the first 1000 rows are actually shown in Zeppelin.

You can adjust the number of rows interperter by interpreter, look for "maxResult" properties

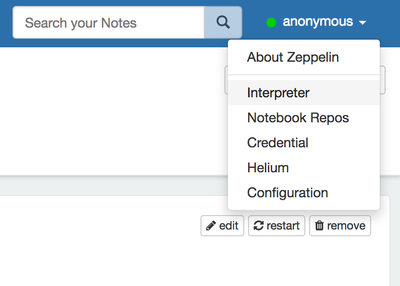

Go to the interpreter configuration page (upper right hand corner)

Ex : for SPARK

zeppelin.spark.maxResult

or for Livy

zeppelin.livy.spark.sql.maxResult

For the JDBC interperter ( there's always an exception to the rule 🙂 )

common.max_count

When using Zeppelin's dataframe export to CSV feature you simply exports what has been pushed back to zeppelin. If the the max number of rows is set to a thousand, then you'll never have more than a thousand rows in your csv

=> The actual number of rows in your result set may be larger, it simply hasn't been fully read back into Zeppelin.

This feature is great when working with small resultsets. It can however be deceiving as the results can be arbitrarily truncated when the max number of rows has been reached.

If you're looking to export an entire table or a large subset, you should probably do it programmatically, for example by saving a table to a file system such as HDFS

For Spark (2.0):

dataframe.coalesce(1)

.write

.option("header", "true")

.csv("/path/to/sample_file.csv")

//Note the coalesce(1) => will bring all result to a single file otherwise you'll have 1 file per executor FYI Notebooks are simply JSON object organized by paragraph. Just open up any notebook to get a sense of the structure

In HDP they are saved in :

/usr/hdp/current/zeppelin-server/notebook/[id of notebook]/note.json

Created 10-03-2017 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Matthieu Lamairesse