Support Questions

- Cloudera Community

- Support

- Support Questions

- workers ( datanode ) disk created by mistake unde...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

workers ( datanode ) disk created by mistake under "/" instead "/grid/sdd"

Created on 04-02-2018 02:40 PM - edited 08-17-2019 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all

we have ambari cluster with 165 workers machines

each worker have 4 disks ( /grid/sda /gird/sdb /grid/sdc /grid/sdd )

on of the workers have only 3 disks insted 4 disks

so after blueprint installation , on that worker sdd created under "/" and not under /grid

this cause / partition to became nearly 100%

so we want to create new disk on this worker as /grid/sdd

and copy the content under /sdd to /grid/sdd

so the procedure should be like this

1. create new disk - /grid/sdd and update /etc/fstab and mount /grid/sdd 2. stop all workers components 3. cp -rp /sdd/* /grid/sdd 4. start all workers components

please advice if all steps 1-4 are the right steps regarding my problem

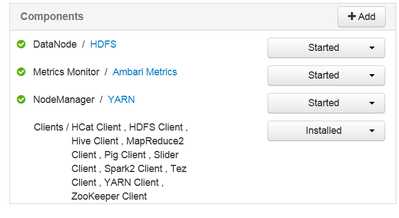

workers components

Created 04-03-2018 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I think the steps are correct, but I think for better understanding you add a step between 2 and 3 :-).

Mounting the new FS and updating the fstab before copying across the data from the old mount point.

Cheers 🙂

Created 04-02-2018 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a HCC validated documentation to execute successfully your solution

How to Move or Change HDFS DataNode Directories

Hope that helps 🙂

Created 04-02-2018 02:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

my scenario is little different , we not need to change ambari configuration since /grid/sdd already configured under HDFS --> config in ( DataNode directories )

second , by cp -rp I am actually copy the folders permissions as is

so can you approve my steps 1-4 ?

Created 04-02-2018 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your steps look okay but I still think the below-updated process portrays better the process. If its a production cluster then you MUST take the necessary precautions like baking up the data.

1. create new disk - /grid/sdd and update /etc/fstab and mount /grid/sdd OK

Make sure the old mount point is accessible becase you will copy date to to new mount from them.

2.Stop the cluster instead of only the datanodes as documented there could be a reason why eg some processes/jobs writing to those disks

3.Go to the ambari HDFS configuration and edit the datanode directory configuration: Remove /hadoop/hdfs/data and /hadoop/hdfs/data1. Add /grid/sda,/gird/sdb,/grid/sdc,/grid/sdd save.

4.Login into each datanode VM and copy the contents of /data_old /data1 into /grid/sda,/gird/sdb,/grid/sdc,/grid/sdd

5.Change the ownership of /grid/sda,/gird/sdb,/grid/sdc,/grid/sdd and everything under it to “hdfs”.

6.Start the cluster.

Created 04-02-2018 10:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot , first thank you ,

you said that you prefer to do ambari-server stop instead stooping the components on the worker machine ,

but stooping all cluster by ambari-server stop will cause downtime on this cluster !!!,

so I not feel good with this action ,

so stop the component only on the worker machine is less dramatic then stooping all cluster on production

second , regarding to your step 3 , as I said datanode directory is configured ok , no need to change anything

regarding to your step 4 , why I need to access each datanode while the problem is only on one datanode ( worker75 ) ?

as you know we have 165 workers machines but only worker75 have the problem

so all datanode are ok , the only problem is with one worker machine , as I mentioned sdd not created under /grid instead of that sdd created under slash , so this is the reason that we want to copy the content under /sdd to /grid/sdd

Created 04-02-2018 10:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I got misguided by your sentence "on of the workers have only 3 disks insted 4 disks" I think there was a typo error instead of "one" you wrote "on" and that completely changes the meaning of the sentence.

Yes true if its only one data node that should impact the whole cluster.

The other method would be to decommission the worker node (datanode) mount the new FS and then recommission 🙂

It's cool if all worked fine for you.

Created 04-02-2018 10:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so finally my steps 1-4 are correct , or you want to add something

?

Created 04-03-2018 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I think the steps are correct, but I think for better understanding you add a step between 2 and 3 :-).

Mounting the new FS and updating the fstab before copying across the data from the old mount point.

Cheers 🙂