Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: yarn can read/write to hdfs, but cannot execut...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

yarn can read/write to hdfs, but cannot execute file on hdfs( execute permission is given through Ranger

- Labels:

-

Apache Ranger

-

Apache Sqoop

-

Apache YARN

Created on 03-05-2018 12:48 PM - edited 08-17-2019 05:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using HDP-2.6, using Ranger to manager permission of HDFS.

already give all permission(read, write, execute) to user yarn of folder /data

now I want to use sqoop to import data from mysql to hive,

each time I got the following exception

30998 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - Loading data to table ods.test_table 30998 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - Loading data to table ods.test_table 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - Failed with exception org.apache.hadoop.security.AccessControlException: Permission denied: user=yarn, access=EXECUTE, inode="/data/hive/warehouse/ods/test_table/part-m-00000.gz":admin:hadoop:drwx------ 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - Failed with exception org.apache.hadoop.security.AccessControlException: Permission denied: user=yarn, access=EXECUTE, inode="/data/hive/warehouse/ods/test_table/part-m-00000.gz":admin:hadoop:drwx------ 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:353) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:353) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:292) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:292) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:238) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:238) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkDefaultEnforcer(RangerHdfsAuthorizer.java:428) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkDefaultEnforcer(RangerHdfsAuthorizer.java:428) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:365) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:365) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1950) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1950) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1934) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1934) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkOwner(FSDirectory.java:1903) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkOwner(FSDirectory.java:1903) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirAttrOp.setPermission(FSDirAttrOp.java:63) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSDirAttrOp.setPermission(FSDirAttrOp.java:63) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.setPermission(FSNamesystem.java:1850) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.setPermission(FSNamesystem.java:1850) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.setPermission(NameNodeRpcServer.java:821) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.setPermission(NameNodeRpcServer.java:821) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.setPermission(ClientNamenodeProtocolServerSideTranslatorPB.java:465) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.setPermission(ClientNamenodeProtocolServerSideTranslatorPB.java:465) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640) 31222 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2351) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2351) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2347) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2347) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at java.security.AccessController.doPrivileged(Native Method) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at java.security.AccessController.doPrivileged(Native Method) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at javax.security.auth.Subject.doAs(Subject.java:422) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at javax.security.auth.Subject.doAs(Subject.java:422) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1869) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1869) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2347) 31223 [Thread-28] INFO org.apache.sqoop.hive.HiveImport - at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2347)

I checked Ranger audit log, found something like this:

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.444","access":"READ","resource":"/data/hive/warehouse/ods/test_table","resType":"path","action":"read","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269952","seq_num":433113,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.446","access":"READ","resource":"/data/hive/warehouse/ods/test_table/part-m-00003.gz","resType":"path","action":"read","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table/part-m-00003.gz","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269953","seq_num":433115,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.446","access":"WRITE","resource":"/data/hive/warehouse/ods/test_table/part-m-00002.gz","resType":"path","action":"write","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269954","seq_num":433117,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.446","access":"WRITE","resource":"/data/hive/warehouse/ods/test_table/part-m-00002.gz","resType":"path","action":"write","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269955","seq_num":433119,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.447","access":"READ","resource":"/data/hive/warehouse/ods/test_table","resType":"path","action":"read","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269956","seq_num":433121,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.447","access":"EXECUTE","resource":"/data/hive/warehouse/ods/test_table/part-m-00000.gz","resType":"path","action":"execute","result":0,"policy":-1,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"hadoop-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269957","seq_num":433123,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.448","access":"EXECUTE","resource":"/data/hive/warehouse/ods/test_table/part-m-00001.gz","resType":"path","action":"execute","result":0,"policy":-1,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"hadoop-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269958","seq_num":433125,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.448","access":"EXECUTE","resource":"/data/hive/warehouse/ods/test_table/part-m-00002.gz","resType":"path","action":"execute","result":0,"policy":-1,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"hadoop-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269959","seq_num":433127,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.449","access":"WRITE","resource":"/data/hive/warehouse/ods/test_table/part-m-00003.gz","resType":"path","action":"write","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269960","seq_num":433129,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.449","access":"WRITE","resource":"/data/hive/warehouse/ods/test_table/part-m-00003.gz","resType":"path","action":"write","result":1,"policy":10,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"ranger-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269961","seq_num":433131,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

{"repoType":1,"repo":"xstudy_hadoop","reqUser":"yarn","evtTime":"2018-03-05 17:33:18.451","access":"EXECUTE","resource":"/data/hive/warehouse/ods/test_table/part-m-00003.gz","resType":"path","action":"execute","result":0,"policy":-1,"reason":"/data/hive/warehouse/ods/test_table","enforcer":"hadoop-acl","cliIP":"10.0.30.2","agentHost":"master","logType":"RangerAudit","id":"727c87c5-eeba-465e-ad8d-f1129c01801f-269962","seq_num":433133,"event_count":1,"event_dur_ms":0,"tags":[],"cluster_name":"xstudy"}

At first I thought that Ranger permission checker is not involved, but from the audit log, yarn just cannot get 'execute' permission, but I'm 100% sure that 'execute' permission is given.

I've two questions:

- Why yarn cannot get 'execute' permission on folder /data?

- Sqoop already imported data, why yarn asked 'execute' permission?

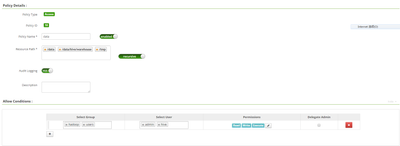

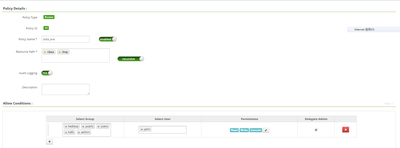

I attached screenshot for Ranger policy:

the policy named 'data' is the one I created at first, for 'yarn' and other users, as it keeps failing, I create second policy named 'data_exe', that's intended for 'yarn' user only

Created 03-07-2018 09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

by the way, how could I make the job run as 'admin' other than yarn?, as admin is the user submitted the job

Created 03-07-2018 07:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked out Ranger source code, I saw that Ranger would log some useful information at DEBUG level, do you know how to enable DEBUG level for ranger hdfs-agent, and where to find the log? in namenode log? or in oozie job log?

Created 03-13-2018 08:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jinyu Li,

For Ranger hdfs-agent debug, please change log4j for NameNode and add this:

log4j.logger.org.apache.ranger=DEBUG

Your debug log messages will appear in NameNode log.

Hope this helps !

Created 03-16-2018 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jinyu Li

your issue is likely produced by Hive Permission Inheritance.

After creating the tables, the Sqoop app tries to change the owner/mode of the created HDFS files.

Ranger permissions (even rwx) do not give rights to change POSIX owner/mode, which is why the operation fails. Such failure is classified as "EXECUTE" action by Ranger. You can find more details in the HDFS Audit log, stored locally on the NameNode.

Solution: Could you please try to set "hive.warehouse.subdir.inherit.perms" to false and re-run the job? This stops Hive Imports from trying to set permissions, which is fine when Ranger is the primary source of authorization.

see https://cwiki.apache.org/confluence/display/Hive/Permission+Inheritance+in+Hive for more details.

Best, Benjamin

- « Previous

-

- 1

- 2

- Next »