Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache Ambari Workflow Manager View for Apache Ooz...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-18-2017 07:36 PM - edited 08-17-2019 02:19 PM

In this tutorial, I will walk you through creating a Sqoop action using WFM on HDP 2.5+.

First we need a table, we're going to use MySQL as source database and table.

create table imported (rowkey int, value varchar(25)); insert into imported (rowkey, value) values (1, "john doe"); insert into imported (rowkey, value) values (2, "jane doe");

I want to make sure that all cluster nodes can access this table and going to grant access to user centos on the LAN, you may have different restrictions on the network and by all means consult your DBAs.

GRANT ALL PRIVILEGES ON *.* TO 'centos'@'172.22.65.%'

IDENTIFIED BY 'password'

WITH GRANT OPTION;

FLUSH PRIVILEGES;

GRANT ALL PRIVILEGES ON *.* TO 'centos'@'localhost'

IDENTIFIED BY 'password'

WITH GRANT OPTION;

FLUSH PRIVILEGES;

I want to make sure user centos can access the table

mysql –u centos –p ➢ password mysql> select * from test.imported; +--------+----------+ | rowkey | value | +--------+----------+ | 1 | john doe | | 2 | jane doe | +--------+----------+

Finally, I'd like to test my sqoop works

sqoop list-tables --connect jdbc:mysql://source-1/test --username centos --password password 17/02/18 15:13:13 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. imported

Also, in case of Oozie with HCatalog and Sqoop, every node that will execute job attempts must have HCat and Sqoop client installed.

I want to save a password in a file so that I could access it w/out a prompt and not in clear text.

echo -n "password" > .password hdfs dfs -put .password /user/$USER/ hdfs dfs -chmod 400 /user/$USER/.password rm .password [centos@source-1 ~]$ hdfs dfs -ls Found 1 items -r-------- 3 centos hdfs 8 2017-02-18 15:13 .password [centos@source-1 ~]$ hdfs dfs -cat .password password[centos@source-1 ~]$

Let's run the list command again referencing the file instead of --password argument

sqoop list-tables --connect jdbc:mysql://source-1/test --username centos --password-file /user/centos/.password 17/02/18 15:14:43 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. imported

You can find more details in our comprehensive documentation on data movement http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.5.3/bk_data-movement-and-integration/content/sqo...

Also, make sure mysql-connector-java is at an appropriate version. RHEL 6 bundles version 5.1.17 which does not work in later versions of HDP, we bundle 5.1.37 in HDP-UTILS and the only way to active it is to run the following

yum downgrade mysql-connector-java

then in your /usr/share/java directory you should be able to see correct connectors

lrwxrwxrwx. 1 root root 31 Feb 18 15:29 jdbc-mysql.jar -> mysql-connector-java-5.1.37.jar lrwxrwxrwx. 1 root root 31 Feb 18 15:29 mysql-connector-java.jar -> mysql-connector-java-5.1.37.jar

You have a choice to update the Oozie sharelib with this connector or bundle it as part of workflow lib. I'm going to do the latter for time's sake.

Before I start authoring a workflow, I'd like to confirm my sqoop import works, I will execute it on the command line first

sqoop import --connect jdbc:mysql://172.22.65.123/test --username centos --password-file /user/$USER/.password --table imported --hcatalog-table imported --create-hcatalog-table --hcatalog-storage-stanza "STORED AS ORCFILE" --hcatalog-home /usr/hdp/current/hive-webhcat --map-column-hive value=STRING --split-by rowkey

I'm choosing HCatalog import as it is more efficient than --hive-import, in case of latter, it needs to make one extra step of moving imported data from staging directory to hive and spawning an extra container. With --hcatalog-table everything happens in one shot. Also, benefit here is that you can create an ORC table from the command line instead of going into Hive and altering a table to set it to ORC.

Let's see what we got as a result

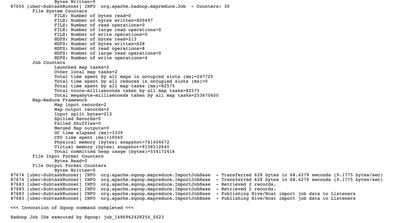

Map-Reduce Framework Map input records=2 Map output records=2 Input split bytes=213 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=837 CPU time spent (ms)=8890 Physical memory (bytes) snapshot=718036992 Virtual memory (bytes) snapshot=9154256896 Total committed heap usage (bytes)=535298048 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 17/02/18 15:32:05 INFO mapreduce.ImportJobBase: Transferred 628 bytes in 70.6267 seconds (8.8918 bytes/sec) 17/02/18 15:32:05 INFO mapreduce.ImportJobBase: Retrieved 2 records. 17/02/18 15:32:05 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners

Let's see what it looks like in Hive

[centos@source-1 ~]$ beeline Beeline version 1.2.1000.2.6.0.0-493 by Apache Hive beeline> !connect jdbc:hive2://localhost:10000 "" "" Connecting to jdbc:hive2://localhost:10000 Connected to: Apache Hive (version 1.2.1000.2.6.0.0-493) Driver: Hive JDBC (version 1.2.1000.2.6.0.0-493) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://localhost:10000> select * from default.imported; +------------------+-----------------+--+ | imported.rowkey | imported.value | +------------------+-----------------+--+ | 1 | john doe | | 2 | jane doe | +------------------+-----------------+--+ 2 rows selected (6.414 seconds)

Let's truncate the table in order to prepare for Oozie imports and additionally describe the table to demonstrate it is in fact in ORC

0: jdbc:hive2://localhost:10000> truncate table default.imported; No rows affected (0.4 seconds) 0: jdbc:hive2://localhost:10000> describe formatted imported; | SerDe Library: | org.apache.hadoop.hive.ql.io.orc.OrcSerde | NULL | | InputFormat: | org.apache.hadoop.hive.ql.io.orc.OrcInputFormat | NULL | | OutputFormat: | org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat

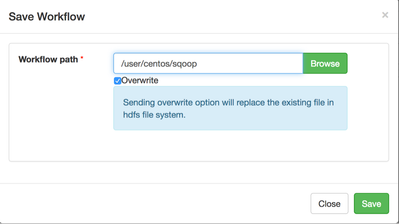

I'm ready to start working on a workflow, let's import sqoop action and save workflow to create a directory for it.

I want to make sure I have a valid directory so I could upload a few files that are necessary for this to complete successfully.

hdfs dfs -mkdir /user/centos/sqoop/lib hdfs dfs -put /usr/share/java/mysql-connector-java-5.1.37.jar /user/centos/sqoop/lib/ hdfs dfs -put /etc/hive/conf/hive-site.xml /user/centos/sqoop/lib/ hdfs dfs -put /etc/tez/conf/tez-site.xml /user/centos/sqoop/lib/

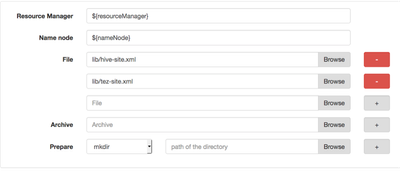

I'm going to use my own MySQL driver than the one in the sharelib and therefore I'm uploading it to my wf, again if you update the sharelib with associated jar you don't have to do that. Secondly, I'm going to include hive-site.xml and tez-site.xml. Until 2.5, you only needed hive-site.xml but now we also need tez-site.xml. It is a small fact that will save you a lot of hours of debugging, trust me I know.

Your wf lib directory should look like so

hdfs dfs -ls /user/centos/sqoop/lib/ Found 3 items -rw-r--r-- 3 centos hdfs 19228 2017-02-18 15:38 /user/centos/sqoop/lib/hive-site.xml -rw-r--r-- 3 centos hdfs 977873 2017-02-18 15:37 /user/centos/sqoop/lib/mysql-connector-java-5.1.37.jar -rw-r--r-- 3 centos hdfs 6737 2017-02-18 15:38 /user/centos/sqoop/lib/tez-site.xml

Finally, I want to modify my sqoop command as I no longer need --create-hcatalog-table command and want to replace $USER argument with my username, you can also use Oozie EL functions for string replacement.

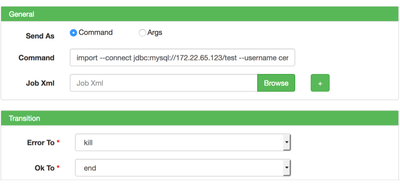

import --connect jdbc:mysql://172.22.65.123/test --username centos --password-file /user/centos/.password --table imported --hcatalog-table imported --hcatalog-home /usr/hdp/current/hive-webhcat --map-column-hive value=STRING --split-by rowkey

That's what my command will look like in Oozie, notice missing "sqoop" command, it's inferred when you select sqoop action in WFM.

Edit the sqoop action on WFM canvas and enter the command in. We are working on refreshing the UI before WFM is released and your dialog box may look slightly different but fields should remain the same.

Let's tell WFM that we also expect tez-site.xml and hive-site.xml files

Finally, we need to tell Oozie that we will pull in HCatalog and Hive jars for this to work

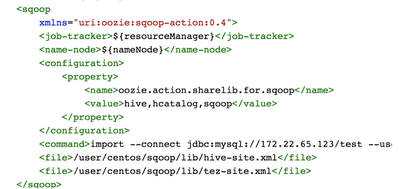

At this point my wf is finished, let's inspect the XML.

When you submit the job, it should succeed and you can look at the results.

Again, this is more efficient and actually works on HDP 2.5+, I highly recommend checking out WFM and hcatalog options in Sqoop.