Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache Deep Learning 101: Using Apache MXNet on Ap...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-26-2018 09:36 PM - edited 08-17-2019 08:41 AM

This is for people preparing to attend my talk on Deep Learning at DataWorks Summit Berling 2018 (https://dataworkssummit.com/berlin-2018/#agenda) on Thursday April 19, 2018 at 11:50AM Berlin time.

Motivation: Use the Power of Our Massive HDP Hadoop Cluster and YARN to Run My Apache MXNet

For a real-world Deep Learning problem, you would want to have some GPUs in your cluster and in the near term YARN 3.0 will allow you to manage those GPU resources. For now, install a few GPUs in special HDP cluster just for training Data Science jobs. You can make this cluster compute and RAM heavy for use by TensorFlow, Apache MXNet, Apache Spark and other YARN workloads.

In my example, we are running inception against an image, this could be from a security camera, a drone or other industrial purposes.

We will dive deeper into industrial IIOT use cases here:

If you are in Philadelphia, please join me. If not, all of the content will be shared on slideshare, github and here.

To set this up, we will be running Apache MXNet on Centos 7 HDP 2.6.4 nodes. We are going to run Apache MXNet Python scripts on our Hadoop cluster!!! Let's get this installed!

git clone https://github.com/apache/incubator-mxnet.git

The installation instructions at Apache MXNet's website (http://mxnet.incubator.apache.org/install/index.html) are amazing. Pick your platform and your style. I am doing this the simplest way on Linux path with pip.

We need to install OpenCV to handle images in Python. So we install that and all the build tools that OpenCV requires to build it and Apache MXNet.

Follow the install details here: https://community.hortonworks.com/articles/174227/apache-deep-learning-101-using-apache-mxnet-on-an....

YARN Cluster Submit for Apache MXNet

This requires some additional libraries and the Java JDK to compile the DMLC submission code.

yum install java-1.8.0-openjdk yum install java-1.8.0-openjdk-devel pip install kubernetes git clone https://github.com/dmlc/dmlc-core.git cd dmlc-core make cd tracker/yarn ./build.sh

To run my example that saves to HDFS

export JAVA_HOME=/usr/jdk64/jdk1.8.0_112 pip install pydoop

You will need to match your version of Hadoop and JDK. You will need to be on a HDP node or have HDP client with all the correct environment variables!

source yarnsubmit.sh

export HADOOP_HOME=/usr/hdp/2.6.4.0-91/hadoop export HADOOP_HDFS_HOME=/usr/hdp/2.6.4.0-91/hadoop-hdfs export hdfs_home=/usr/hdp/2.6.4.0-91/hadoop-hdfs export hadoop_hdfs_home=/usr/hdp/2.6.4.0-91/hadoop-hdfs /opt/demo/dmlc-core/tracker/dmlc-submit --cluster yarn --num-workers 1 --server-cores 2 --server-memory 1G --log-level DEBUG --log-file /opt/demo/logs/mxnet.log /opt/demo/incubator-mxnet/analyzeyarn.py

We are using the DMLC Job Tracker for YARN job submission: https://github.com/dmlc/dmlc-core/tree/master/tracker You will need to do a git clone on the directory.

I have installed on an HDP node as follows: /opt/demo/incubator-mxnet

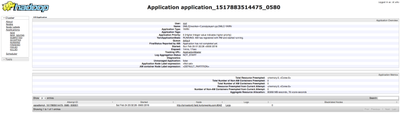

When I run my program I can example the Apache YARN logs via the command line tool like so:

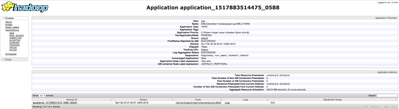

yarn logs -applicationId application_1517883514475_0588

18/02/25 02:41:56 INFO client.RMProxy: Connecting to ResourceManager at princeton0.field.hortonworks.com/172.26.200.216:8050

18/02/25 02:41:57 INFO client.AHSProxy: Connecting to Application History server at princeton0.field.hortonworks.com/172.26.200.216:10200

18/02/25 02:42:00 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

18/02/25 02:42:00 INFO compress.CodecPool: Got brand-new decompressor [.deflate]

Container: container_e01_1517883514475_0588_01_000001 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:directory.info

LogLastModifiedTime:Sun Feb 25 02:40:08 +0000 2018

LogLength:2119

LogContents:

ls -l:

total 20

lrwxrwxrwx. 1 yarn hadoop 101 Feb 25 02:40 analyzeyarn.py -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/11/analyzeyarn.py

-rw-r--r--. 1 yarn hadoop 75 Feb 25 02:40 container_tokens

-rwx------. 1 yarn hadoop 653 Feb 25 02:40 default_container_executor_session.sh

-rwx------. 1 yarn hadoop 707 Feb 25 02:40 default_container_executor.sh

lrwxrwxrwx. 1 yarn hadoop 100 Feb 25 02:40 dmlc-yarn.jar -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/12/dmlc-yarn.jar

-rwx------. 1 yarn hadoop 4808 Feb 25 02:40 launch_container.sh

lrwxrwxrwx. 1 yarn hadoop 98 Feb 25 02:40 launcher.py -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/10/launcher.py

drwx--x---. 2 yarn hadoop 6 Feb 25 02:40 tmp

find -L . -maxdepth 5 -ls:

419611738 4 drwx--x--- 3 yarn hadoop 4096 Feb 25 02:40 .

436305675 0 drwx--x--- 2 yarn hadoop 6 Feb 25 02:40 ./tmp

419611739 4 -rw-r--r-- 1 yarn hadoop 75 Feb 25 02:40 ./container_tokens

419611740 4 -rw-r--r-- 1 yarn hadoop 12 Feb 25 02:40 ./.container_tokens.crc

419611741 8 -rwx------ 1 yarn hadoop 4808 Feb 25 02:40 ./launch_container.sh

419611742 4 -rw-r--r-- 1 yarn hadoop 48 Feb 25 02:40 ./.launch_container.sh.crc

419611743 4 -rwx------ 1 yarn hadoop 653 Feb 25 02:40 ./default_container_executor_session.sh

419611744 4 -rw-r--r-- 1 yarn hadoop 16 Feb 25 02:40 ./.default_container_executor_session.sh.crc

419611745 4 -rwx------ 1 yarn hadoop 707 Feb 25 02:40 ./default_container_executor.sh

419611746 4 -rw-r--r-- 1 yarn hadoop 16 Feb 25 02:40 ./.default_container_executor.sh.crc

394926208 24 -r-x------ 1 yarn hadoop 21427 Feb 25 02:40 ./dmlc-yarn.jar

361654889 4 -r-x------ 1 yarn hadoop 2765 Feb 25 02:40 ./launcher.py

378183873 4 -r-x------ 1 yarn hadoop 3815 Feb 25 02:40 ./analyzeyarn.py

broken symlinks(find -L . -maxdepth 5 -type l -ls):

End of LogType:directory.info

*******************************************************************************

Container: container_e01_1517883514475_0588_01_000001 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:launch_container.sh

LogLastModifiedTime:Sun Feb 25 02:40:08 +0000 2018

LogLength:4808

LogContents:

#!/bin/bash

set -o pipefail -e

export PRELAUNCH_OUT="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/prelaunch.out"

exec >"${PRELAUNCH_OUT}"

export PRELAUNCH_ERR="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/prelaunch.err"

exec 2>"${PRELAUNCH_ERR}"

echo "Setting up env variables"

export PATH="/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/var/lib/ambari-agent"

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/usr/hdp/2.6.4.0-91/hadoop/conf"}

export DMLC_NUM_SERVER="0"

export MAX_APP_ATTEMPTS="2"

export DMLC_WORKER_CORES="1"

export DMLC_WORKER_MEMORY_MB="1024"

export DMLC_SERVER_MEMORY_MB="1024"

export JAVA_HOME=${JAVA_HOME:-"/usr/jdk64/jdk1.8.0_112"}

export LANG="en_US.UTF-8"

export APP_SUBMIT_TIME_ENV="1519526407793"

export NM_HOST="princeton0.field.hortonworks.com"

export DMLC_JOB_ARCHIVES=""

export DMLC_SERVER_CORES="2"

export LOGNAME="root"

export JVM_PID="$$"

export DMLC_TRACKER_PORT="9091"

export PWD="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001"

export LOCAL_DIRS="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588"

export APPLICATION_WEB_PROXY_BASE="/proxy/application_1517883514475_0588"

export NM_HTTP_PORT="8042"

export DMLC_TRACKER_URI="172.26.200.216"

export LOG_DIRS="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001"

export NM_AUX_SERVICE_mapreduce_shuffle="AAA0+gAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=

"

export NM_PORT="45454"

export USER="root"

export HADOOP_YARN_HOME=${HADOOP_YARN_HOME:-"/usr/hdp/2.6.4.0-91/hadoop-yarn"}

export CLASSPATH="$CLASSPATH:.:*:/usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop/*:/usr/hdp/2.6.4.0-91/hadoop/lib/*:/usr/hdp/current/hadoop-hdfs-client/*:/usr/hdp/current/hadoop-hdfs-client/lib/*:/usr/hdp/current/hadoop-yarn-client/*:/usr/hdp/current/hadoop-yarn-client/lib/*:/usr/hdp/current/ext/hadoop/*"

export DMLC_NUM_WORKER="1"

export DMLC_JOB_CLUSTER="yarn"

export HADOOP_TOKEN_FILE_LOCATION="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/container_tokens"

export NM_AUX_SERVICE_spark_shuffle=""

export LOCAL_USER_DIRS="/hadoop/yarn/local/usercache/root/"

export HADOOP_HOME="/usr/hdp/2.6.4.0-91/hadoop"

export HOME="/home/"

export NM_AUX_SERVICE_spark2_shuffle=""

export CONTAINER_ID="container_e01_1517883514475_0588_01_000001"

export MALLOC_ARENA_MAX="4"

echo "Setting up job resources"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/12/dmlc-yarn.jar" "dmlc-yarn.jar"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/10/launcher.py" "launcher.py"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/11/analyzeyarn.py" "analyzeyarn.py"

echo "Copying debugging information"

# Creating copy of launch script

cp "launch_container.sh" "/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/launch_container.sh"

chmod 640 "/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/launch_container.sh"

# Determining directory contents

echo "ls -l:" 1>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

ls -l 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

echo "find -L . -maxdepth 5 -ls:" 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

find -L . -maxdepth 5 -ls 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

echo "broken symlinks(find -L . -maxdepth 5 -type l -ls):" 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

find -L . -maxdepth 5 -type l -ls 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/directory.info"

echo "Launching container"

exec /bin/bash -c "$JAVA_HOME/bin/java -Xmx900m org.apache.hadoop.yarn.dmlc.ApplicationMaster -file "hdfs:/tmp/temp-dmlc-yarn-application_1517883514475_0588/launcher.py#launcher.py" -file "hdfs:/tmp/temp-dmlc-yarn-application_1517883514475_0588/analyzeyarn.py#analyzeyarn.py" -file "hdfs:/tmp/temp-dmlc-yarn-application_1517883514475_0588/dmlc-yarn.jar#dmlc-yarn.jar" ./launcher.py ./analyzeyarn.py 1>/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/stdout 2>/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000001/stderr"

End of LogType:launch_container.sh

************************************************************************************

End of LogType:stdout

***********************************************************************

Container: container_e01_1517883514475_0588_01_000001 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:prelaunch.out

LogLastModifiedTime:Sun Feb 25 02:40:08 +0000 2018

LogLength:100

LogContents:

Setting up env variables

Setting up job resources

Copying debugging information

Launching container

End of LogType:prelaunch.out

******************************************************************************

Container: container_e01_1517883514475_0588_01_000001 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:stderr

LogLastModifiedTime:Sun Feb 25 02:40:23 +0000 2018

LogLength:18617

LogContents:

18/02/25 02:40:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/02/25 02:40:12 WARN shortcircuit.DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded.

18/02/25 02:40:12 INFO dmlc.ApplicationMaster: Start AM as user=yarn

18/02/25 02:40:12 INFO dmlc.ApplicationMaster: Try to start 0 Servers and 1 Workers

18/02/25 02:40:12 INFO client.RMProxy: Connecting to ResourceManager at princeton0.field.hortonworks.com/172.26.200.216:8030

18/02/25 02:40:13 INFO impl.NMClientAsyncImpl: Upper bound of the thread pool size is 500

18/02/25 02:40:13 INFO impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

18/02/25 02:40:13 INFO dmlc.ApplicationMaster: [DMLC] ApplicationMaster started

18/02/25 02:40:15 INFO impl.AMRMClientImpl: Received new token for : princeton0.field.hortonworks.com:45454

18/02/25 02:40:15 INFO dmlc.ApplicationMaster: {launcher.py=resource { scheme: "hdfs" port: -1 file: "/tmp/temp-dmlc-yarn-application_1517883514475_0588/launcher.py" } size: 2765 timestamp: 1519526407236 type: FILE visibility: APPLICATION, analyzeyarn.py=resource { scheme: "hdfs" port: -1 file: "/tmp/temp-dmlc-yarn-application_1517883514475_0588/analyzeyarn.py" } size: 3815 timestamp: 1519526407703 type: FILE visibility: APPLICATION, dmlc-yarn.jar=resource { scheme: "hdfs" port: -1 file: "/tmp/temp-dmlc-yarn-application_1517883514475_0588/dmlc-yarn.jar" } size: 21427 timestamp: 1519526407738 type: FILE visibility: APPLICATION}

18/02/25 02:40:15 INFO dmlc.ApplicationMaster: {PYTHONPATH=${PYTHONPATH}:., DMLC_NUM_SERVER=0, DMLC_NODE_HOST=princeton0.field.hortonworks.com, DMLC_ROLE=worker, DMLC_WORKER_CORES=1, DMLC_WORKER_MEMORY_MB=1024, DMLC_SERVER_MEMORY_MB=1024, DMLC_TRACKER_URI=172.26.200.216, CLASSPATH=${CLASSPATH}:./*:/usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop//azure-data-lake-store-sdk-2.1.4.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-annotations-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-annotations.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-auth-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-auth.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-aws-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-aws.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-datalake-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-datalake.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-2.7.3.2.6.4.0-91-tests.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-tests.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-nfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-nfs.jar:/usr/hdp/2.6.4.0-91/hadoop//:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-hdfs-plugin-shim-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//nimbus-jose-jwt-3.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-plugin-classloader-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-databind-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-yarn-plugin-shim-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//activation-1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-jaxrs-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-sslengine-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//api-util-1.0.0-M20.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//asm-3.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//avro-1.7.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//joda-time-2.9.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-core-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsch-0.1.54.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-kms-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//json-smart-1.1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-s3-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//azure-keyvault-core-0.8.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsp-api-2.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//azure-storage-5.4.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsr305-3.0.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-beanutils-1.7.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-core-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-beanutils-core-1.8.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-cli-1.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//junit-4.11.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-codec-1.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-json-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-collections-3.2.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//log4j-1.2.17.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-compress-1.4.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-server-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-configuration-1.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//mockito-all-1.8.5.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-digester-1.8.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-io-2.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-lang-2.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//netty-3.6.2.Final.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-lang3-3.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//paranamer-2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-logging-1.1.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//protobuf-java-2.5.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-math3-3.1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-net-3.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//servlet-api-2.5.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-client-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//slf4j-api-1.7.10.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-framework-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//slf4j-log4j12-1.7.10.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-recipes-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//gson-2.2.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//guava-11.0.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//snappy-java-1.0.4.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//hamcrest-core-1.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jets3t-0.9.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//httpclient-4.5.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//httpcore-4.4.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jettison-1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-annotations-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//stax-api-1.0-2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-core-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-xc-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//xmlenc-0.52.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//java-xmlbuilder-0.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jaxb-api-2.2.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//zookeeper-3.4.6.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jaxb-impl-2.2.3-1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//xz-1.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jcip-annotations-1.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-2.7.3.2.6.4.0-91-tests.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-nfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-nfs.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-tests.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs.jar:/usr/hdp/current/hadoop-hdfs-client//:/usr/hdp/current/hadoop-hdfs-client/lib//asm-3.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-cli-1.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-codec-1.4.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-daemon-1.0.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-io-2.4.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-lang-2.6.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-logging-1.1.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//guava-11.0.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-annotations-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-core-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-databind-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jersey-core-1.9.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jersey-server-1.9.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jetty-6.1.26.hwx.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jsr305-3.0.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//leveldbjni-all-1.8.jar:/usr/hdp/current/hadoop-hdfs-client/lib//log4j-1.2.17.jar:/usr/hdp/current/hadoop-hdfs-client/lib//netty-3.6.2.Final.jar:/usr/hdp/current/hadoop-hdfs-client/lib//netty-all-4.0.52.Final.jar:/usr/hdp/current/hadoop-hdfs-client/lib//okhttp-2.4.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//okio-1.4.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//protobuf-java-2.5.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//servlet-api-2.5.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xercesImpl-2.9.1.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xml-apis-1.3.04.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xmlenc-0.52.jar:/usr/hdp/current/hadoop-hdfs-client/lib//:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-api-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-api.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-distributedshell-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-distributedshell.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-unmanaged-am-launcher.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-client-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-client.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-common.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-registry-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-registry.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-applicationhistoryservice-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-applicationhistoryservice.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-common.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-nodemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-nodemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-resourcemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-resourcemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-sharedcachemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-sharedcachemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-tests-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-tests.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-timeline-pluginstorage-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-timeline-pluginstorage.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-web-proxy-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-web-proxy.jar:/usr/hdp/current/hadoop-yarn-client//:/usr/hdp/current/hadoop-yarn-client/lib//activation-1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//aopalliance-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-guice-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/current/hadoop-yarn-client/lib//javassist-3.18.1-GA.jar:/usr/hdp/current/hadoop-yarn-client/lib//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-json-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//api-asn1-api-1.0.0-M20.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-server-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//api-util-1.0.0-M20.jar:/usr/hdp/current/hadoop-yarn-client/lib//asm-3.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//avro-1.7.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//javax.inject-1.jar:/usr/hdp/current/hadoop-yarn-client/lib//azure-keyvault-core-0.8.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jets3t-0.9.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//azure-storage-5.4.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//log4j-1.2.17.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-beanutils-1.7.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jaxb-api-2.2.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-beanutils-core-1.8.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-cli-1.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//leveldbjni-all-1.8.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-codec-1.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jaxb-impl-2.2.3-1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-collections-3.2.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//metrics-core-3.0.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-compress-1.4.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//json-smart-1.1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-configuration-1.6.jar:/usr/hdp/current/hadoop-yarn-client/lib//netty-3.6.2.Final.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-digester-1.8.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-io-2.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-lang-2.6.jar:/usr/hdp/current/hadoop-yarn-client/lib//nimbus-jose-jwt-3.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-lang3-3.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//objenesis-2.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-logging-1.1.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//paranamer-2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-math3-3.1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-net-3.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//protobuf-java-2.5.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-client-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//servlet-api-2.5.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-framework-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//snappy-java-1.0.4.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-recipes-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//fst-2.24.jar:/usr/hdp/current/hadoop-yarn-client/lib//gson-2.2.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//guava-11.0.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//guice-3.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//stax-api-1.0-2.jar:/usr/hdp/current/hadoop-yarn-client/lib//guice-servlet-3.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jcip-annotations-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/current/hadoop-yarn-client/lib//httpclient-4.5.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//httpcore-4.4.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-client-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-annotations-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//xmlenc-0.52.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-core-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//xz-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//zookeeper-3.4.6.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-databind-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//zookeeper-3.4.6.2.6.4.0-91-tests.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-jaxrs-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-core-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-xc-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//java-xmlbuilder-0.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jettison-1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsp-api-2.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-sslengine-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsch-0.1.54.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsr305-3.0.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//:/usr/hdp/current/ext/hadoop//, DMLC_NUM_WORKER=1, DMLC_JOB_CLUSTER=yarn, DMLC_JOB_ARCHIVES=, LD_LIBRARY_PATH=, DMLC_SERVER_CORES=2, DMLC_NUM_ATTEMPT=0, DMLC_TRACKER_PORT=9091, DMLC_TASK_ID=0}

18/02/25 02:40:15 INFO impl.NMClientAsyncImpl: Processing Event EventType: START_CONTAINER for Container container_e01_1517883514475_0588_01_000002

18/02/25 02:40:15 INFO impl.ContainerManagementProtocolProxy: Opening proxy : princeton0.field.hortonworks.com:45454

18/02/25 02:40:15 INFO dmlc.ApplicationMaster: onContainerStarted Invoked

18/02/25 02:40:23 INFO dmlc.ApplicationMaster: Application completed. Stopping running containers

18/02/25 02:40:23 INFO impl.NMClientAsyncImpl: NM Client is being stopped.

18/02/25 02:40:23 INFO impl.NMClientAsyncImpl: Waiting for eventDispatcherThread to be interrupted.

18/02/25 02:40:23 INFO impl.NMClientAsyncImpl: eventDispatcherThread exited.

18/02/25 02:40:23 INFO impl.NMClientAsyncImpl: Stopping NM client.

18/02/25 02:40:23 INFO impl.NMClientImpl: Clean up running containers on stop.

18/02/25 02:40:23 INFO impl.NMClientImpl: Stopping container_e01_1517883514475_0588_01_000002

18/02/25 02:40:23 INFO impl.NMClientImpl: ok, stopContainerInternal.. container_e01_1517883514475_0588_01_000002

18/02/25 02:40:23 INFO impl.ContainerManagementProtocolProxy: Opening proxy : princeton0.field.hortonworks.com:45454

18/02/25 02:40:23 INFO impl.NMClientImpl: Running containers cleaned up. Stopping NM proxies.

18/02/25 02:40:23 INFO impl.NMClientImpl: Stopped all proxies.

18/02/25 02:40:23 INFO impl.NMClientAsyncImpl: NMClient stopped.

18/02/25 02:40:23 INFO dmlc.ApplicationMaster: Diagnostics., num_tasks1, finished=1, failed=0

18/02/25 02:40:23 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered.

End of LogType:stderr

***********************************************************************

End of LogType:prelaunch.err

******************************************************************************

Container: container_e01_1517883514475_0588_01_000002 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:directory.info

LogLastModifiedTime:Sun Feb 25 02:40:15 +0000 2018

LogLength:2127

LogContents:

ls -l:

total 32

lrwxrwxrwx. 1 yarn hadoop 101 Feb 25 02:40 analyzeyarn.py -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/11/analyzeyarn.py

-rw-r--r--. 1 yarn hadoop 94 Feb 25 02:40 container_tokens

-rwx------. 1 yarn hadoop 653 Feb 25 02:40 default_container_executor_session.sh

-rwx------. 1 yarn hadoop 707 Feb 25 02:40 default_container_executor.sh

lrwxrwxrwx. 1 yarn hadoop 100 Feb 25 02:40 dmlc-yarn.jar -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/12/dmlc-yarn.jar

-rwx------. 1 yarn hadoop 19213 Feb 25 02:40 launch_container.sh

lrwxrwxrwx. 1 yarn hadoop 98 Feb 25 02:40 launcher.py -> /hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/10/launcher.py

drwx--x---. 2 yarn hadoop 6 Feb 25 02:40 tmp

find -L . -maxdepth 5 -ls:

453027956 4 drwx--x--- 3 yarn hadoop 4096 Feb 25 02:40 .

470001419 0 drwx--x--- 2 yarn hadoop 6 Feb 25 02:40 ./tmp

453027961 4 -rw-r--r-- 1 yarn hadoop 94 Feb 25 02:40 ./container_tokens

453027962 4 -rw-r--r-- 1 yarn hadoop 12 Feb 25 02:40 ./.container_tokens.crc

453027963 20 -rwx------ 1 yarn hadoop 19213 Feb 25 02:40 ./launch_container.sh

453027964 4 -rw-r--r-- 1 yarn hadoop 160 Feb 25 02:40 ./.launch_container.sh.crc

453027965 4 -rwx------ 1 yarn hadoop 653 Feb 25 02:40 ./default_container_executor_session.sh

453027966 4 -rw-r--r-- 1 yarn hadoop 16 Feb 25 02:40 ./.default_container_executor_session.sh.crc

453027967 4 -rwx------ 1 yarn hadoop 707 Feb 25 02:40 ./default_container_executor.sh

453418137 4 -rw-r--r-- 1 yarn hadoop 16 Feb 25 02:40 ./.default_container_executor.sh.crc

394926208 24 -r-x------ 1 yarn hadoop 21427 Feb 25 02:40 ./dmlc-yarn.jar

361654889 4 -r-x------ 1 yarn hadoop 2765 Feb 25 02:40 ./launcher.py

378183873 4 -r-x------ 1 yarn hadoop 3815 Feb 25 02:40 ./analyzeyarn.py

broken symlinks(find -L . -maxdepth 5 -type l -ls):

End of LogType:directory.info

*******************************************************************************

Container: container_e01_1517883514475_0588_01_000002 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:prelaunch.out

LogLastModifiedTime:Sun Feb 25 02:40:15 +0000 2018

LogLength:100

LogContents:

Setting up env variables

Setting up job resources

Copying debugging information

Launching container

End of LogType:prelaunch.out

******************************************************************************

Container: container_e01_1517883514475_0588_01_000002 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:stdout

LogLastModifiedTime:Sun Feb 25 02:40:23 +0000 2018

LogLength:411

LogContents:

{"top1pct": "67.6", "top5": "n03485794 handkerchief, hankie, hanky, hankey", "top4": "n04590129 window shade", "top3": "n03938244 pillow", "top2": "n04589890 window screen", "top1": "n02883205 bow tie, bow-tie, bowtie", "top2pct": "11.5", "imagefilename": "/opt/demo/incubator-mxnet/nanotie7.png", "top3pct": "4.5", "uuid": "mxnet_uuid_img_20180225024017", "top4pct": "2.8", "top5pct": "2.8", "runtime": "5.0"}

End of LogType:stdout

***********************************************************************

End of LogType:prelaunch.err

******************************************************************************

Container: container_e01_1517883514475_0588_01_000002 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:launch_container.sh

LogLastModifiedTime:Sun Feb 25 02:40:15 +0000 2018

LogLength:19213

LogContents:

#!/bin/bash

set -o pipefail -e

export PRELAUNCH_OUT="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/prelaunch.out"

exec >"${PRELAUNCH_OUT}"

export PRELAUNCH_ERR="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/prelaunch.err"

exec 2>"${PRELAUNCH_ERR}"

echo "Setting up env variables"

export PATH="/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/var/lib/ambari-agent"

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/usr/hdp/2.6.4.0-91/hadoop/conf"}

export DMLC_NUM_SERVER="0"

export DMLC_NODE_HOST="princeton0.field.hortonworks.com"

export DMLC_WORKER_CORES="1"

export DMLC_WORKER_MEMORY_MB="1024"

export DMLC_SERVER_MEMORY_MB="1024"

export JAVA_HOME=${JAVA_HOME:-"/usr/jdk64/jdk1.8.0_112"}

export LANG="en_US.UTF-8"

export NM_HOST="princeton0.field.hortonworks.com"

export DMLC_JOB_ARCHIVES=""

export LD_LIBRARY_PATH=""

export DMLC_SERVER_CORES="2"

export DMLC_NUM_ATTEMPT="0"

export LOGNAME="root"

export JVM_PID="$$"

export DMLC_TRACKER_PORT="9091"

export PWD="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002"

export LOCAL_DIRS="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588"

export PYTHONPATH="${PYTHONPATH}:."

export DMLC_ROLE="worker"

export NM_HTTP_PORT="8042"

export DMLC_TRACKER_URI="172.26.200.216"

export LOG_DIRS="/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002"

export NM_AUX_SERVICE_mapreduce_shuffle="AAA0+gAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA=

"

export NM_PORT="45454"

export USER="root"

export HADOOP_YARN_HOME=${HADOOP_YARN_HOME:-"/usr/hdp/2.6.4.0-91/hadoop-yarn"}

export CLASSPATH="${CLASSPATH}:./*:/usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop//azure-data-lake-store-sdk-2.1.4.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-annotations-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-annotations.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-auth-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-auth.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-aws-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-aws.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-datalake-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure-datalake.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-azure.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-2.7.3.2.6.4.0-91-tests.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common-tests.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-common.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-nfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop//hadoop-nfs.jar:/usr/hdp/2.6.4.0-91/hadoop//:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-hdfs-plugin-shim-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//nimbus-jose-jwt-3.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-plugin-classloader-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-databind-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//ranger-yarn-plugin-shim-0.7.0.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//activation-1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-jaxrs-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-sslengine-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//api-util-1.0.0-M20.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//asm-3.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//avro-1.7.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//joda-time-2.9.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-core-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsch-0.1.54.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-kms-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//json-smart-1.1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//aws-java-sdk-s3-1.10.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//azure-keyvault-core-0.8.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsp-api-2.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//azure-storage-5.4.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jsr305-3.0.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-beanutils-1.7.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-core-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-beanutils-core-1.8.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-cli-1.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//junit-4.11.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-codec-1.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-json-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-collections-3.2.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//log4j-1.2.17.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-compress-1.4.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jersey-server-1.9.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-configuration-1.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//mockito-all-1.8.5.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-digester-1.8.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-io-2.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-lang-2.6.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//netty-3.6.2.Final.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-lang3-3.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//paranamer-2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-logging-1.1.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//protobuf-java-2.5.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-math3-3.1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//commons-net-3.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//servlet-api-2.5.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-client-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//slf4j-api-1.7.10.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-framework-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//slf4j-log4j12-1.7.10.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//curator-recipes-2.7.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//gson-2.2.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//guava-11.0.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//snappy-java-1.0.4.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//hamcrest-core-1.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jets3t-0.9.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//httpclient-4.5.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//httpcore-4.4.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jettison-1.1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-annotations-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//stax-api-1.0-2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-core-2.2.3.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jackson-xc-1.9.13.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//xmlenc-0.52.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//java-xmlbuilder-0.4.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jaxb-api-2.2.2.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//zookeeper-3.4.6.2.6.4.0-91.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jaxb-impl-2.2.3-1.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//xz-1.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//jcip-annotations-1.0.jar:/usr/hdp/2.6.4.0-91/hadoop/lib//:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-2.7.3.2.6.4.0-91-tests.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-nfs-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-nfs.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs-tests.jar:/usr/hdp/current/hadoop-hdfs-client//hadoop-hdfs.jar:/usr/hdp/current/hadoop-hdfs-client//:/usr/hdp/current/hadoop-hdfs-client/lib//asm-3.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-cli-1.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-codec-1.4.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-daemon-1.0.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-io-2.4.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-lang-2.6.jar:/usr/hdp/current/hadoop-hdfs-client/lib//commons-logging-1.1.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//guava-11.0.2.jar:/usr/hdp/current/hadoop-hdfs-client/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-annotations-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-core-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-databind-2.2.3.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jersey-core-1.9.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jersey-server-1.9.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jetty-6.1.26.hwx.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/current/hadoop-hdfs-client/lib//jsr305-3.0.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//leveldbjni-all-1.8.jar:/usr/hdp/current/hadoop-hdfs-client/lib//log4j-1.2.17.jar:/usr/hdp/current/hadoop-hdfs-client/lib//netty-3.6.2.Final.jar:/usr/hdp/current/hadoop-hdfs-client/lib//netty-all-4.0.52.Final.jar:/usr/hdp/current/hadoop-hdfs-client/lib//okhttp-2.4.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//okio-1.4.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//protobuf-java-2.5.0.jar:/usr/hdp/current/hadoop-hdfs-client/lib//servlet-api-2.5.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xercesImpl-2.9.1.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xml-apis-1.3.04.jar:/usr/hdp/current/hadoop-hdfs-client/lib//xmlenc-0.52.jar:/usr/hdp/current/hadoop-hdfs-client/lib//:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-api-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-api.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-distributedshell-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-distributedshell.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-applications-unmanaged-am-launcher.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-client-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-client.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-common.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-registry-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-registry.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-applicationhistoryservice-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-applicationhistoryservice.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-common-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-common.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-nodemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-nodemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-resourcemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-resourcemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-sharedcachemanager-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-sharedcachemanager.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-tests-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-tests.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-timeline-pluginstorage-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-timeline-pluginstorage.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-web-proxy-2.7.3.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client//hadoop-yarn-server-web-proxy.jar:/usr/hdp/current/hadoop-yarn-client//:/usr/hdp/current/hadoop-yarn-client/lib//activation-1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//aopalliance-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-guice-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/current/hadoop-yarn-client/lib//javassist-3.18.1-GA.jar:/usr/hdp/current/hadoop-yarn-client/lib//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-json-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//api-asn1-api-1.0.0-M20.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-server-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//api-util-1.0.0-M20.jar:/usr/hdp/current/hadoop-yarn-client/lib//asm-3.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//avro-1.7.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//javax.inject-1.jar:/usr/hdp/current/hadoop-yarn-client/lib//azure-keyvault-core-0.8.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jets3t-0.9.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//azure-storage-5.4.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//log4j-1.2.17.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-beanutils-1.7.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jaxb-api-2.2.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-beanutils-core-1.8.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-cli-1.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//leveldbjni-all-1.8.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-codec-1.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jaxb-impl-2.2.3-1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-collections-3.2.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//metrics-core-3.0.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-compress-1.4.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//json-smart-1.1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-configuration-1.6.jar:/usr/hdp/current/hadoop-yarn-client/lib//netty-3.6.2.Final.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-digester-1.8.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-io-2.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-lang-2.6.jar:/usr/hdp/current/hadoop-yarn-client/lib//nimbus-jose-jwt-3.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-lang3-3.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//objenesis-2.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-logging-1.1.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//paranamer-2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-math3-3.1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//commons-net-3.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//protobuf-java-2.5.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-client-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//servlet-api-2.5.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-framework-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//snappy-java-1.0.4.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//curator-recipes-2.7.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//fst-2.24.jar:/usr/hdp/current/hadoop-yarn-client/lib//gson-2.2.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//guava-11.0.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//guice-3.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//stax-api-1.0-2.jar:/usr/hdp/current/hadoop-yarn-client/lib//guice-servlet-3.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jcip-annotations-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//htrace-core-3.1.0-incubating.jar:/usr/hdp/current/hadoop-yarn-client/lib//httpclient-4.5.2.jar:/usr/hdp/current/hadoop-yarn-client/lib//httpcore-4.4.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-client-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-annotations-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//xmlenc-0.52.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-core-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//xz-1.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-core-asl-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//zookeeper-3.4.6.2.6.4.0-91.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-databind-2.2.3.jar:/usr/hdp/current/hadoop-yarn-client/lib//zookeeper-3.4.6.2.6.4.0-91-tests.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-jaxrs-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//jersey-core-1.9.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-mapper-asl-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//jackson-xc-1.9.13.jar:/usr/hdp/current/hadoop-yarn-client/lib//java-xmlbuilder-0.4.jar:/usr/hdp/current/hadoop-yarn-client/lib//jettison-1.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsp-api-2.1.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-sslengine-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jetty-util-6.1.26.hwx.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsch-0.1.54.jar:/usr/hdp/current/hadoop-yarn-client/lib//jsr305-3.0.0.jar:/usr/hdp/current/hadoop-yarn-client/lib//:/usr/hdp/current/ext/hadoop//"

export DMLC_NUM_WORKER="1"

export DMLC_JOB_CLUSTER="yarn"

export HADOOP_TOKEN_FILE_LOCATION="/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/container_tokens"

export NM_AUX_SERVICE_spark_shuffle=""

export LOCAL_USER_DIRS="/hadoop/yarn/local/usercache/root/"

export HADOOP_HOME="/usr/hdp/2.6.4.0-91/hadoop"

export DMLC_TASK_ID="0"

export HOME="/home/"

export NM_AUX_SERVICE_spark2_shuffle=""

export CONTAINER_ID="container_e01_1517883514475_0588_01_000002"

export MALLOC_ARENA_MAX="4"

echo "Setting up job resources"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/12/dmlc-yarn.jar" "dmlc-yarn.jar"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/10/launcher.py" "launcher.py"

ln -sf "/hadoop/yarn/local/usercache/root/appcache/application_1517883514475_0588/filecache/11/analyzeyarn.py" "analyzeyarn.py"

echo "Copying debugging information"

# Creating copy of launch script

cp "launch_container.sh" "/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/launch_container.sh"

chmod 640 "/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/launch_container.sh"

# Determining directory contents

echo "ls -l:" 1>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

ls -l 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

echo "find -L . -maxdepth 5 -ls:" 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

find -L . -maxdepth 5 -ls 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

echo "broken symlinks(find -L . -maxdepth 5 -type l -ls):" 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

find -L . -maxdepth 5 -type l -ls 1>>"/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/directory.info"

echo "Launching container"

exec /bin/bash -c "./launcher.py ./analyzeyarn.py 1>/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/stdout 2>/hadoop/yarn/log/application_1517883514475_0588/container_e01_1517883514475_0588_01_000002/stderr"

End of LogType:launch_container.sh

************************************************************************************

Container: container_e01_1517883514475_0588_01_000002 on princeton0.field.hortonworks.com_45454

LogAggregationType: AGGREGATED

===============================================================================================

LogType:stderr

LogLastModifiedTime:Sun Feb 25 02:40:17 +0000 2018

LogLength:393

LogContents:

[02:40:17] src/nnvm/legacy_json_util.cc:209: Loading symbol saved by previous version v0.8.0. Attempting to upgrade...

[02:40:17] src/nnvm/legacy_json_util.cc:217: Symbol successfully upgraded!

/usr/lib/python2.7/site-packages/mxnet/module/base_module.py:65: UserWarning: Data provided by label_shapes don't match names specified by label_names ([] vs. ['softmax_label'])

warnings.warn(msg)

End of LogType:stderr

***********************************************************************

[root@princeton0 demo]# cat logs/mxnet.log

2018-02-25 01:49:11,667 INFO start listen on 172.26.200.216:9091

2018-02-25 01:54:05,445 INFO start listen on 172.26.200.216:9091

2018-02-25 02:17:52,685 INFO start listen on 172.26.200.216:9091

2018-02-25 02:29:46,873 INFO start listen on 172.26.200.216:9091

2018-02-25 02:29:48,076 DEBUG Submit job with 1 workers and 0 servers

2018-02-25 02:29:48,078 DEBUG java -cp /usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop/lib/*:/usr/hdp/2.6.4.0-91/hadoop/.//*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/./:/usr/hdp/2.6.4.0-91/hadoop-hdfs/lib/*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/.//*:/usr/hdp/2.6.4.0-91/hadoop-yarn/lib/*:/usr/hdp/2.6.4.0-91/hadoop-yarn/.//*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/lib/*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/.//*::mysql-connector-java.jar:/usr/hdp/2.6.4.0-91/tez/*:/usr/hdp/2.6.4.0-91/tez/lib/*:/usr/hdp/2.6.4.0-91/tez/conf:/opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar org.apache.hadoop.yarn.dmlc.Client -file /opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar -file /opt/demo/dmlc-core/tracker/dmlc_tracker/launcher.py -file /opt/demo/incubator-mxnet/analyzeyarn.py -jobname DMLC[nworker=1]:analyzeyarn.py -tempdir /tmp -queue default ./launcher.py ./analyzeyarn.py

2018-02-25 02:33:24,463 INFO start listen on 172.26.200.216:9091

2018-02-25 02:33:25,633 DEBUG Submit job with 1 workers and 0 servers

2018-02-25 02:33:25,634 DEBUG java -cp /usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop/lib/*:/usr/hdp/2.6.4.0-91/hadoop/.//*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/./:/usr/hdp/2.6.4.0-91/hadoop-hdfs/lib/*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/.//*:/usr/hdp/2.6.4.0-91/hadoop-yarn/lib/*:/usr/hdp/2.6.4.0-91/hadoop-yarn/.//*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/lib/*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/.//*::mysql-connector-java.jar:/usr/hdp/2.6.4.0-91/tez/*:/usr/hdp/2.6.4.0-91/tez/lib/*:/usr/hdp/2.6.4.0-91/tez/conf:/opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar org.apache.hadoop.yarn.dmlc.Client -file /opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar -file /opt/demo/dmlc-core/tracker/dmlc_tracker/launcher.py -file /opt/demo/incubator-mxnet/analyzeyarn.py -jobname DMLC[nworker=1]:analyzeyarn.py -tempdir /tmp -queue default ./launcher.py ./analyzeyarn.py

2018-02-25 02:40:00,993 INFO start listen on 172.26.200.216:9091

2018-02-25 02:40:02,067 DEBUG Submit job with 1 workers and 0 servers

2018-02-25 02:40:02,068 DEBUG java -cp /usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop/lib/*:/usr/hdp/2.6.4.0-91/hadoop/.//*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/./:/usr/hdp/2.6.4.0-91/hadoop-hdfs/lib/*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/.//*:/usr/hdp/2.6.4.0-91/hadoop-yarn/lib/*:/usr/hdp/2.6.4.0-91/hadoop-yarn/.//*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/lib/*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/.//*::mysql-connector-java.jar:/usr/hdp/2.6.4.0-91/tez/*:/usr/hdp/2.6.4.0-91/tez/lib/*:/usr/hdp/2.6.4.0-91/tez/conf:/opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar org.apache.hadoop.yarn.dmlc.Client -file /opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar -file /opt/demo/dmlc-core/tracker/dmlc_tracker/launcher.py -file /opt/demo/incubator-mxnet/analyzeyarn.py -jobname DMLC[nworker=1]:analyzeyarn.py -tempdir /tmp -queue default ./launcher.py ./analyzeyarn.py

The Run Logs

2018-02-26 18:51:04,613 INFO start listen on 172.26.200.216:9091 2018-02-26 18:51:51,143 INFO start listen on 172.26.200.216:9091 2018-02-26 18:51:52,336 DEBUG Submit job with 1 workers and 0 servers 2018-02-26 18:51:52,337 DEBUG /usr/jdk64/jdk1.8.0_112/bin/java -cp /usr/hdp/2.6.4.0-91/hadoop/conf:/usr/hdp/2.6.4.0-91/hadoop/lib/*:/usr/hdp/2.6.4.0-91/hadoop/.//*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/./:/usr/hdp/2.6.4.0-91/hadoop-hdfs/lib/*:/usr/hdp/2.6.4.0-91/hadoop-hdfs/.//*:/usr/hdp/2.6.4.0-91/hadoop-yarn/lib/*:/usr/hdp/2.6.4.0-91/hadoop-yarn/.//*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/lib/*:/usr/hdp/2.6.4.0-91/hadoop-mapreduce/.//*::mysql-connector-java.jar:/usr/hdp/2.6.4.0-91/tez/*:/usr/hdp/2.6.4.0-91/tez/lib/*:/usr/hdp/2.6.4.0-91/tez/conf:/opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar org.apache.hadoop.yarn.dmlc.Client -file /opt/demo/dmlc-core/tracker/dmlc_tracker/../yarn/dmlc-yarn.jar -file /opt/demo/dmlc-core/tracker/dmlc_tracker/launcher.py -file /opt/demo/incubator-mxnet/analyzeyarn.py -jobname DMLC[nworker=1]:analyzeyarn.py -tempdir /tmp -queue default ./launcher.py ./analyzeyarn.py

Below are some YARN UI screens to show you the application run results.

This Python script will look familiar as it's the one we have been using in the series. I have added the external inception predict functions and some Pydoop commands to write data to HDFS into one mega python script to make this easier to run in YARN.

The example output in HDFS

hdfs dfs -ls /mxnetyarn Found 5 items -rw-r--r-- 3 root hdfs 416 2018-02-26 18:45 /mxnetyarn/mxnet_uuid_json_20180226184514.json -rw-r--r-- 3 root hdfs 417 2018-02-26 18:46 /mxnetyarn/mxnet_uuid_json_20180226184643.json -rw-r--r-- 3 root hdfs 417 2018-02-26 18:47 /mxnetyarn/mxnet_uuid_json_20180226184707.json -rw-r--r-- 3 yarn hdfs 417 2018-02-26 18:52 /mxnetyarn/mxnet_uuid_json_20180226185209.json -rw-r--r-- 3 yarn hdfs 417 2018-02-26 22:08 /mxnetyarn/mxnet_uuid_json_20180226220806.json

We could ingest these HDFS files with Apache NiFi and convert them to Hive tables or you could do that directly with Apache Hive.

Source Code:

https://github.com/tspannhw/nifi-mxnet-yarn

https://github.com/tspannhw/ApacheBigData101

Python Script

#!/bin/python # fork of previous ones forked from Apache MXNet examples # https://github.com/tspannhw/mxnet_rpi/blob/master/analyze.py import pydoop.hdfs as hdfs import time import sys import datetime import subprocess import sys import os import datetime import traceback import math import random, string import base64 import json from time import gmtime, strftime import mxnet as mx import numpy as np import math import random, string import time from time import gmtime, strftime # forked from Apache MXNet example with minor changes for osx import time import mxnet as mx import numpy as np import cv2, os, urllib from collections import namedtuple Batch = namedtuple('Batch', ['data']) # Load the symbols for the networks with open('/opt/demo/incubator-mxnet/synset.txt', 'r') as f: synsets = [l.rstrip() for l in f] # Load the network parameters sym, arg_params, aux_params = mx.model.load_checkpoint('/opt/demo/incubator-mxnet/Inception-BN', 0) # Load the network into an MXNet module and bind the corresponding parameters mod = mx.mod.Module(symbol=sym, context=mx.cpu()) mod.bind(for_training=False, data_shapes=[('data', (1,3,224,224))]) mod.set_params(arg_params, aux_params) ''' Function to predict objects by giving the model a pointer to an image file and running a forward pass through the model. inputs: filename = jpeg file of image to classify objects in mod = the module object representing the loaded model synsets = the list of symbols representing the model N = Optional parameter denoting how many predictions to return (default is top 5) outputs: python list of top N predicted objects and corresponding probabilities ''' def predict(filename, mod, synsets, N=5): tic = time.time() img = cv2.cvtColor(cv2.imread(filename), cv2.COLOR_BGR2RGB) if img is None: return None img = cv2.resize(img, (224, 224)) img = np.swapaxes(img, 0, 2) img = np.swapaxes(img, 1, 2) img = img[np.newaxis, :] toc = time.time() mod.forward(Batch([mx.nd.array(img)])) prob = mod.get_outputs()[0].asnumpy() prob = np.squeeze(prob) topN = [] a = np.argsort(prob)[::-1] for i in a[0:N]: topN.append((prob[i], synsets[i])) return topN # Code to download an image from the internet and run a prediction on it def predict_from_url(url, N=5): filename = url.split("/")[-1] urllib.urlretrieve(url, filename) img = cv2.imread(filename) if img is None: print( "Failed to download" ) else: return predict(filename, mod, synsets, N) # Code to predict on a local file def predict_from_local_file(filename, N=5): return predict(filename, mod, synsets, N) start = time.time() packet_size=3000 # Create unique image name uniqueid = 'mxnet_uuid_{0}_{1}.json'.format('json',strftime("%Y%m%d%H%M%S",gmtime())) filename = '/opt/demo/incubator-mxnet/nanotie7.png' topn = [] # Run inception prediction on image try: topn = predict_from_local_file(filename, N=5) except: print("Error") errorcondition = "true" try: # 5 MXNET Analysis top1 = str(topn[0][1]) top1pct = str(round(topn[0][0],3) * 100) top2 = str(topn[1][1]) top2pct = str(round(topn[1][0],3) * 100) top3 = str(topn[2][1]) top3pct = str(round(topn[2][0],3) * 100) top4 = str(topn[3][1]) top4pct = str(round(topn[3][0],3) * 100) top5 = str(topn[4][1]) top5pct = str(round(topn[4][0],3) * 100) end = time.time() row = { 'uuid': uniqueid, 'top1pct': top1pct, 'top1': top1, 'top2pct': top2pct, 'top2': top2,'top3pct': top3pct, 'top3': top3,'top4pct': top4pct,'top4': top4, 'top5pct': top5pct,'top5': top5, 'imagefilename': filename, 'runtime': str(round(end - start)) } json_string = json.dumps(row) print (json_string) hdfs.hdfs(host="princeton0.field.hortonworks.com", port=50090, user="root") hdfs.dump(json_string + "\n", "/mxnetyarn/" + uniqueid, mode="at") fh = open("/opt/demo/logs/mxnetyarn.log", "a") fh.writelines('{0}\n'.format(json_string)) fh.close except: print("{\"message\": \"Failed to run\"}")

We are running with this image:

Which is a pretty standard picture of a cat with a necktie holding a Raspberry Pi with Rainbow HAT on it.

See: https://raw.githubusercontent.com/tspannhw/ApacheBigData101/master/nanotie7.png

References:

https://github.com/dmlc/dmlc-core/tree/master/tracker/yarn

https://www.slideshare.net/AmazonWebServices/deep-learning-for-developers-86885654

https://github.com/dmlc/dmlc-core/tree/master/tracker/yarn

https://mxnet.incubator.apache.org/tutorials/embedded/wine_detector.html

https://github.com/apache/incubator-mxnet/tree/master/example/image-classification

https://mxnet.incubator.apache.org/how_to/cloud.html

http://dmlc-core.readthedocs.io/en/latest/

https://community.hortonworks.com/articles/42995/yarn-application-monitoring-with-nifi.html